Comprehensive Guide to Artificial Intelligence with Python (Translate.p3)

- Tram Ho

Source: Edureka

In part 2 we explored through 4 topics:

- Machine Learning Basics

- Types Of Machine Learning

- Types Of Problems Solved By Using Machine Learning

- Machine Learning Process

Continuing in this section, we will explore the following topics:

- Machine Learning With Python

- Limitations Of Machine Learning

- Why Deep Learning?

- How Deep Learning Works?

- What Is Deep Learning?

- Deep Learning Use Case

- Perceptrons

- Multilayer Perceptrons

9. Machine Learning With Python

In this section, we will deploy Machine Learning using Python. Let’s get started.

Problem Statement: To build a Machine Learning model, predict whether it will rain tomorrow by studying past data.

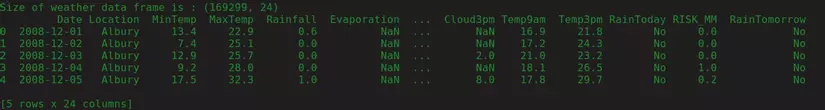

Data Set Description: This dataset contains about 145k observations about daily weather conditions as observed from many weather stations in Australia. The dataset has about 24 features and we will use 23 features (Predictor variables) to predict the target variable, which is “RainTomorrow”.

Download weatherAUS dataset: here.

This target variable (RainTomorrow) will store two values:

- Yes: Indicates that it will rain tomorrow

- No: Indicates that it will not rain tomorrow

Therefore, this is clearly a classification problem. Machine Learning model will classify the output into 2 classes, YES or NO.

Logic: Building Classification models to predict whether it will rain tomorrow or not based on weather conditions.

Now that the goal is clear, let our brain work and start coding.

Step 1: Enter the required libraries

1 2 3 4 5 6 | <span class="token comment"># AI/main.py</span> <span class="token comment"># For linear algebra</span> <span class="token keyword">import</span> numpy <span class="token keyword">as</span> np <span class="token comment"># For data processing</span> <span class="token keyword">import</span> pandas <span class="token keyword">as</span> pd |

Step 2: Download the data set

1 2 3 4 5 6 7 8 9 10 | <span class="token comment">#AI/main.py</span> <span class="token keyword">import</span> <span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span> <span class="token comment">#Load the data set</span> df <span class="token operator">=</span> pd<span class="token punctuation">.</span>read_csv<span class="token punctuation">(</span><span class="token string">'./weatherAUS.csv'</span><span class="token punctuation">)</span> <span class="token comment">#Display the shape of the data set</span> <span class="token keyword">print</span><span class="token punctuation">(</span><span class="token string">'Size of weather data frame is :'</span><span class="token punctuation">,</span>df<span class="token punctuation">.</span>shape<span class="token punctuation">)</span> <span class="token comment">#Display data</span> <span class="token keyword">print</span><span class="token punctuation">(</span>df<span class="token punctuation">[</span><span class="token number">0</span><span class="token punctuation">:</span><span class="token number">5</span><span class="token punctuation">]</span><span class="token punctuation">)</span> |

Run the command python main.py will show results:

Step 3: Data processing

1 2 3 4 | <span class="token comment">#AI/main.py</span> <span class="token comment"># Checking for null values</span> <span class="token keyword">print</span><span class="token punctuation">(</span>df<span class="token punctuation">.</span>count<span class="token punctuation">(</span><span class="token punctuation">)</span><span class="token punctuation">.</span>sort_values<span class="token punctuation">(</span><span class="token punctuation">)</span><span class="token punctuation">)</span> |

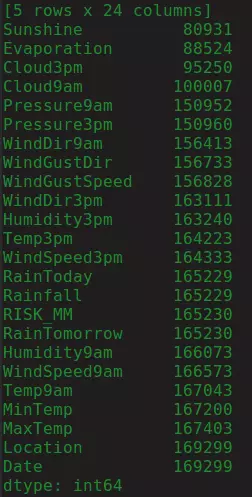

And result is:

Note the output, it shows that the first four columns have more than 40% null values, so it is best if we exit these columns.

During preprocessing data, it is always necessary to eliminate insignificant variables. Unnecessary data will only increase our calculations. Therefore, we will remove the ‘location’ and ‘date’ variables because they are not significant in predicting the weather.

We will also remove the variable ‘RISK_MM’ because we want to predict ‘RainTomorrow’ and RISK_MM (rainfall the next day) may leak some information for our model.

1 2 3 4 5 6 7 | <span class="token comment">#AI/main.py</span> <span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span> df <span class="token operator">=</span> df<span class="token punctuation">.</span>drop<span class="token punctuation">(</span>columns<span class="token operator">=</span><span class="token punctuation">[</span><span class="token string">'WindDir3pm'</span><span class="token punctuation">,</span> <span class="token string">'WindDir9am'</span><span class="token punctuation">,</span> <span class="token string">'WindGustDir'</span><span class="token punctuation">,</span> <span class="token string">'Sunshine'</span><span class="token punctuation">,</span><span class="token string">'Evaporation'</span><span class="token punctuation">,</span><span class="token string">'Cloud3pm'</span><span class="token punctuation">,</span><span class="token string">'Cloud9am'</span><span class="token punctuation">,</span><span class="token string">'Location'</span><span class="token punctuation">,</span><span class="token string">'RISK_MM'</span><span class="token punctuation">,</span><span class="token string">'Date'</span><span class="token punctuation">]</span><span class="token punctuation">,</span>axis<span class="token operator">=</span><span class="token number">1</span><span class="token punctuation">)</span> <span class="token keyword">print</span><span class="token punctuation">(</span>df<span class="token punctuation">.</span>shape<span class="token punctuation">)</span> <span class="token punctuation">(</span><span class="token number">169299</span><span class="token punctuation">,</span> <span class="token number">17</span><span class="token punctuation">)</span> |

Next, we will delete all null values in our data frame.

1 2 3 4 5 6 7 8 | <span class="token comment">#AI/main.py</span> <span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span> <span class="token comment">#Removing null values</span> df <span class="token operator">=</span> df<span class="token punctuation">.</span>dropna<span class="token punctuation">(</span>how<span class="token operator">=</span><span class="token string">'any'</span><span class="token punctuation">)</span> <span class="token keyword">print</span><span class="token punctuation">(</span>df<span class="token punctuation">.</span>shape<span class="token punctuation">)</span> <span class="token punctuation">(</span><span class="token number">130840</span><span class="token punctuation">,</span> <span class="token number">17</span><span class="token punctuation">)</span> |

After eliminating null values, we must also check our data set for any exceptions. An exception is a data point that is significantly different from other observations. Exceptions often occur due to miscalculation during data collection.

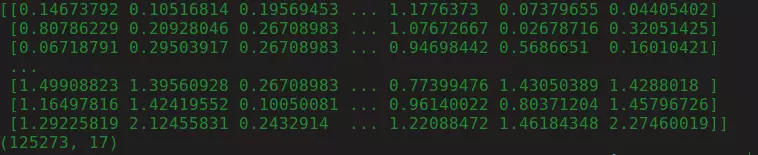

In the code below, we will remove the exception:

1 2 3 4 5 6 7 8 9 | <span class="token comment">#AI/main.py</span> <span class="token keyword">import</span> <span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span> <span class="token keyword">from</span> scipy <span class="token keyword">import</span> stats <span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span> z <span class="token operator">=</span> np<span class="token punctuation">.</span><span class="token builtin">abs</span><span class="token punctuation">(</span>stats<span class="token punctuation">.</span>zscore<span class="token punctuation">(</span>df<span class="token punctuation">.</span>_get_numeric_data<span class="token punctuation">(</span><span class="token punctuation">)</span><span class="token punctuation">)</span><span class="token punctuation">)</span> <span class="token keyword">print</span><span class="token punctuation">(</span>z<span class="token punctuation">)</span> df<span class="token operator">=</span> df<span class="token punctuation">[</span><span class="token punctuation">(</span>z <span class="token operator"><</span> <span class="token number">3</span><span class="token punctuation">)</span><span class="token punctuation">.</span><span class="token builtin">all</span><span class="token punctuation">(</span>axis<span class="token operator">=</span><span class="token number">1</span><span class="token punctuation">)</span><span class="token punctuation">]</span> <span class="token keyword">print</span><span class="token punctuation">(</span>df<span class="token punctuation">.</span>shape<span class="token punctuation">)</span> |

Recorded results:

Next, we will specify 0s and 1s in place of YES and NO.

1 2 3 4 5 6 | <span class="token comment">#AI/main.py</span> <span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span> <span class="token comment">#Change yes and no to 1 and 0 respectvely for RainToday and RainTomorrow variable</span> df<span class="token punctuation">[</span><span class="token string">'RainToday'</span><span class="token punctuation">]</span><span class="token punctuation">.</span>replace<span class="token punctuation">(</span><span class="token punctuation">{</span><span class="token string">'No'</span><span class="token punctuation">:</span> <span class="token number">0</span><span class="token punctuation">,</span> <span class="token string">'Yes'</span><span class="token punctuation">:</span> <span class="token number">1</span><span class="token punctuation">}</span><span class="token punctuation">,</span>inplace <span class="token operator">=</span> <span class="token boolean">True</span><span class="token punctuation">)</span> df<span class="token punctuation">[</span><span class="token string">'RainTomorrow'</span><span class="token punctuation">]</span><span class="token punctuation">.</span>replace<span class="token punctuation">(</span><span class="token punctuation">{</span><span class="token string">'No'</span><span class="token punctuation">:</span> <span class="token number">0</span><span class="token punctuation">,</span> <span class="token string">'Yes'</span><span class="token punctuation">:</span> <span class="token number">1</span><span class="token punctuation">}</span><span class="token punctuation">,</span>inplace <span class="token operator">=</span> <span class="token boolean">True</span><span class="token punctuation">)</span> |

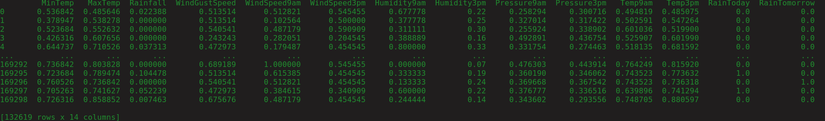

Now, time to standardize data to avoid any frustration while predicting results. To do this, we can use the MinMaxScaler function included in the sklearn library.

1 2 3 4 5 6 7 8 9 10 | <span class="token comment">#AI/main.py</span> <span class="token keyword">import</span> <span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span> <span class="token keyword">from</span> sklearn <span class="token keyword">import</span> preprocessing scaler <span class="token operator">=</span> preprocessing<span class="token punctuation">.</span>MinMaxScaler<span class="token punctuation">(</span><span class="token punctuation">)</span> scaler<span class="token punctuation">.</span>fit<span class="token punctuation">(</span>df<span class="token punctuation">)</span> df <span class="token operator">=</span> pd<span class="token punctuation">.</span>DataFrame<span class="token punctuation">(</span>scaler<span class="token punctuation">.</span>transform<span class="token punctuation">(</span>df<span class="token punctuation">)</span><span class="token punctuation">,</span> index<span class="token operator">=</span>df<span class="token punctuation">.</span>index<span class="token punctuation">,</span> columns<span class="token operator">=</span>df<span class="token punctuation">.</span>columns<span class="token punctuation">)</span> df<span class="token punctuation">.</span>iloc<span class="token punctuation">[</span><span class="token number">4</span><span class="token punctuation">:</span><span class="token number">10</span><span class="token punctuation">]</span> <span class="token keyword">print</span><span class="token punctuation">(</span>df<span class="token punctuation">)</span> |

The result will be:

Step 4: Analyze exploration data (EDA)

Now that we have pre-processed the data set, it’s time to examine the performance analysis and identify key variables that will help us predict the outcome. To do this, we will use the SelectKBest function found in the sklearn library:

1 2 3 4 5 6 7 8 9 10 11 12 | <span class="token comment">#AI/main.py</span> <span class="token keyword">import</span> <span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span> <span class="token keyword">from</span> sklearn<span class="token punctuation">.</span>feature_selection <span class="token keyword">import</span> SelectKBest<span class="token punctuation">,</span> chi2 X <span class="token operator">=</span> df<span class="token punctuation">.</span>loc<span class="token punctuation">[</span><span class="token punctuation">:</span><span class="token punctuation">,</span>df<span class="token punctuation">.</span>columns<span class="token operator">!=</span><span class="token string">'RainTomorrow'</span><span class="token punctuation">]</span> y <span class="token operator">=</span> df<span class="token punctuation">[</span><span class="token punctuation">[</span><span class="token string">'RainTomorrow'</span><span class="token punctuation">]</span><span class="token punctuation">]</span> selector <span class="token operator">=</span> SelectKBest<span class="token punctuation">(</span>chi2<span class="token punctuation">,</span> k<span class="token operator">=</span><span class="token number">3</span><span class="token punctuation">)</span> selector<span class="token punctuation">.</span>fit<span class="token punctuation">(</span>X<span class="token punctuation">,</span> y<span class="token punctuation">)</span> X_new <span class="token operator">=</span> selector<span class="token punctuation">.</span>transform<span class="token punctuation">(</span>X<span class="token punctuation">)</span> <span class="token keyword">print</span><span class="token punctuation">(</span>X<span class="token punctuation">.</span>columns<span class="token punctuation">[</span>selector<span class="token punctuation">.</span>get_support<span class="token punctuation">(</span>indices<span class="token operator">=</span><span class="token boolean">True</span><span class="token punctuation">)</span><span class="token punctuation">]</span><span class="token punctuation">)</span> |

The output gives us the three most important predictor variables:

- Rainfall

- Humidity3pm

- RainToday

Index(['Rainfall', 'Humidity3pm', 'RainToday'], dtype='object')

The main purpose of this demo is to help you understand how Machine Learning works, so to simplify the calculations, we will only assign one of these important variables as an input.

1 2 3 4 5 6 7 8 9 10 | <span class="token comment">#AI/main.py</span> <span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span> <span class="token comment">#The important features are put in a data frame</span> df <span class="token operator">=</span> df<span class="token punctuation">[</span><span class="token punctuation">[</span><span class="token string">'Humidity3pm'</span><span class="token punctuation">,</span><span class="token string">'Rainfall'</span><span class="token punctuation">,</span><span class="token string">'RainToday'</span><span class="token punctuation">,</span><span class="token string">'RainTomorrow'</span><span class="token punctuation">]</span><span class="token punctuation">]</span> <span class="token comment">#To simplify computations we will use only one feature (Humidity3pm) to build the model</span> X <span class="token operator">=</span> df<span class="token punctuation">[</span><span class="token punctuation">[</span><span class="token string">'Humidity3pm'</span><span class="token punctuation">]</span><span class="token punctuation">]</span> y <span class="token operator">=</span> df<span class="token punctuation">[</span><span class="token punctuation">[</span><span class="token string">'RainTomorrow'</span><span class="token punctuation">]</span><span class="token punctuation">]</span> |

In the code above, X and y Indicates the corresponding input and output.

Step 5: Build a Machine Learning Model

In this step, we will build the Machine Learning model using a training data set and evaluate the effectiveness of the model using the testing data set.

We will build classification models using the following algorithms:

- Logistic Regression

- Random Forest

- Decision Tree

- Support Vector Machine

Below is the code snippet for each of the following classification models:

Logistic Regression

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | <span class="token comment">#AI/main.py</span> <span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span> <span class="token comment"># Logistic Regression</span> <span class="token keyword">from</span> sklearn<span class="token punctuation">.</span>linear_model <span class="token keyword">import</span> LogisticRegression <span class="token keyword">from</span> sklearn<span class="token punctuation">.</span>model_selection <span class="token keyword">import</span> train_test_split <span class="token keyword">from</span> sklearn<span class="token punctuation">.</span>metrics <span class="token keyword">import</span> accuracy_score <span class="token keyword">import</span> time <span class="token comment"># Calculating the accuracy and the time taken by the classifier</span> t0 <span class="token operator">=</span> time<span class="token punctuation">.</span>time<span class="token punctuation">(</span><span class="token punctuation">)</span> <span class="token comment"># Data Splicing</span> X_train<span class="token punctuation">,</span> X_test<span class="token punctuation">,</span> y_train<span class="token punctuation">,</span> y_test <span class="token operator">=</span> train_test_split<span class="token punctuation">(</span>X<span class="token punctuation">,</span> y<span class="token punctuation">,</span> test_size<span class="token operator">=</span><span class="token number">0.25</span><span class="token punctuation">)</span> clf_logreg <span class="token operator">=</span> LogisticRegression<span class="token punctuation">(</span>random_state<span class="token operator">=</span><span class="token number">0</span><span class="token punctuation">)</span> <span class="token comment"># Building the model using the training data set</span> clf_logreg<span class="token punctuation">.</span>fit<span class="token punctuation">(</span>X_train<span class="token punctuation">,</span> y_train<span class="token punctuation">)</span> <span class="token comment"># Evaluating the model using testing data set</span> y_pred <span class="token operator">=</span> clf_logreg<span class="token punctuation">.</span>predict<span class="token punctuation">(</span>X_test<span class="token punctuation">)</span> score <span class="token operator">=</span> accuracy_score<span class="token punctuation">(</span>y_test<span class="token punctuation">,</span> y_pred<span class="token punctuation">)</span> <span class="token comment"># Printing the accuracy and the time taken by the classifier</span> <span class="token keyword">print</span><span class="token punctuation">(</span><span class="token string">'Accuracy using Logistic Regression:'</span><span class="token punctuation">,</span> score<span class="token punctuation">)</span> <span class="token comment">#0.8395113859146434</span> <span class="token keyword">print</span><span class="token punctuation">(</span><span class="token string">'Time taken using Logistic Regression:'</span><span class="token punctuation">,</span> time<span class="token punctuation">.</span>time<span class="token punctuation">(</span><span class="token punctuation">)</span> <span class="token operator">-</span> t0<span class="token punctuation">)</span> <span class="token comment">#0.10015130043029785</span> |

Random Forest Classifier

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | <span class="token comment">#AI/main.py</span> <span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span> <span class="token comment">#Random Forest Classifier</span> <span class="token keyword">from</span> sklearn<span class="token punctuation">.</span>ensemble <span class="token keyword">import</span> RandomForestClassifier <span class="token keyword">from</span> sklearn<span class="token punctuation">.</span>model_selection <span class="token keyword">import</span> train_test_split <span class="token comment">#Calculating the accuracy and the time taken by the classifier</span> t0<span class="token operator">=</span>time<span class="token punctuation">.</span>time<span class="token punctuation">(</span><span class="token punctuation">)</span> <span class="token comment">#Data Splicing</span> X_train<span class="token punctuation">,</span>X_test<span class="token punctuation">,</span>y_train<span class="token punctuation">,</span>y_test <span class="token operator">=</span> train_test_split<span class="token punctuation">(</span>X<span class="token punctuation">,</span>y<span class="token punctuation">,</span>test_size<span class="token operator">=</span><span class="token number">0.25</span><span class="token punctuation">)</span> clf_rf <span class="token operator">=</span> RandomForestClassifier<span class="token punctuation">(</span>n_estimators<span class="token operator">=</span><span class="token number">100</span><span class="token punctuation">,</span> max_depth<span class="token operator">=</span><span class="token number">4</span><span class="token punctuation">,</span>random_state<span class="token operator">=</span><span class="token number">0</span><span class="token punctuation">)</span> <span class="token comment">#Building the model using the training data set</span> clf_rf<span class="token punctuation">.</span>fit<span class="token punctuation">(</span>X_train<span class="token punctuation">,</span>y_train<span class="token punctuation">)</span> <span class="token comment">#Evaluating the model using testing data set</span> y_pred <span class="token operator">=</span> clf_rf<span class="token punctuation">.</span>predict<span class="token punctuation">(</span>X_test<span class="token punctuation">)</span> score <span class="token operator">=</span> accuracy_score<span class="token punctuation">(</span>y_test<span class="token punctuation">,</span>y_pred<span class="token punctuation">)</span> <span class="token comment">#Printing the accuracy and the time taken by the classifier</span> <span class="token keyword">print</span><span class="token punctuation">(</span><span class="token string">'Accuracy using Random Forest Classifier:'</span><span class="token punctuation">,</span>score<span class="token punctuation">)</span> <span class="token comment">#0.8424973608807118</span> <span class="token keyword">print</span><span class="token punctuation">(</span><span class="token string">'Time taken using Random Forest Classifier:'</span> <span class="token punctuation">,</span> time<span class="token punctuation">.</span>time<span class="token punctuation">(</span><span class="token punctuation">)</span><span class="token operator">-</span>t0<span class="token punctuation">)</span> <span class="token comment">#1.558568000793457</span> |

Decision Tree Classifier

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | <span class="token comment">#AI/main.py</span> <span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span> <span class="token comment">#Decision Tree Classifier</span> <span class="token keyword">from</span> sklearn<span class="token punctuation">.</span>tree <span class="token keyword">import</span> DecisionTreeClassifier <span class="token keyword">from</span> sklearn<span class="token punctuation">.</span>model_selection <span class="token keyword">import</span> train_test_split <span class="token comment">#Calculating the accuracy and the time taken by the classifier</span> t0<span class="token operator">=</span>time<span class="token punctuation">.</span>time<span class="token punctuation">(</span><span class="token punctuation">)</span> <span class="token comment">#Data Splicing</span> X_train<span class="token punctuation">,</span>X_test<span class="token punctuation">,</span>y_train<span class="token punctuation">,</span>y_test <span class="token operator">=</span> train_test_split<span class="token punctuation">(</span>X<span class="token punctuation">,</span>y<span class="token punctuation">,</span>test_size<span class="token operator">=</span><span class="token number">0.25</span><span class="token punctuation">)</span> clf_dt <span class="token operator">=</span> DecisionTreeClassifier<span class="token punctuation">(</span>random_state<span class="token operator">=</span><span class="token number">0</span><span class="token punctuation">)</span> <span class="token comment">#Building the model using the training data set</span> clf_dt<span class="token punctuation">.</span>fit<span class="token punctuation">(</span>X_train<span class="token punctuation">,</span>y_train<span class="token punctuation">)</span> <span class="token comment">#Evaluating the model using testing data set</span> y_pred <span class="token operator">=</span> clf_dt<span class="token punctuation">.</span>predict<span class="token punctuation">(</span>X_test<span class="token punctuation">)</span> score <span class="token operator">=</span> accuracy_score<span class="token punctuation">(</span>y_test<span class="token punctuation">,</span>y_pred<span class="token punctuation">)</span> <span class="token comment">#Printing the accuracy and the time taken by the classifier</span> <span class="token keyword">print</span><span class="token punctuation">(</span><span class="token string">'Accuracy using Decision Tree Classifier:'</span><span class="token punctuation">,</span>score<span class="token punctuation">)</span> <span class="token comment">#0.8382144472930176</span> <span class="token keyword">print</span><span class="token punctuation">(</span><span class="token string">'Time taken using Decision Tree Classifier:'</span> <span class="token punctuation">,</span> time<span class="token punctuation">.</span>time<span class="token punctuation">(</span><span class="token punctuation">)</span><span class="token operator">-</span>t0<span class="token punctuation">)</span> <span class="token comment">#0.033601999282836914</span> |

Support Vector Machine

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | <span class="token comment">#AI/main.py</span> <span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span> <span class="token comment">#Support Vector Machine</span> <span class="token keyword">from</span> sklearn <span class="token keyword">import</span> svm <span class="token keyword">from</span> sklearn<span class="token punctuation">.</span>model_selection <span class="token keyword">import</span> train_test_split <span class="token comment">#Calculating the accuracy and the time taken by the classifier</span> t0<span class="token operator">=</span>time<span class="token punctuation">.</span>time<span class="token punctuation">(</span><span class="token punctuation">)</span> <span class="token comment">#Data Splicing</span> X_train<span class="token punctuation">,</span>X_test<span class="token punctuation">,</span>y_train<span class="token punctuation">,</span>y_test <span class="token operator">=</span> train_test_split<span class="token punctuation">(</span>X<span class="token punctuation">,</span>y<span class="token punctuation">,</span>test_size<span class="token operator">=</span><span class="token number">0.25</span><span class="token punctuation">)</span> clf_svc <span class="token operator">=</span> svm<span class="token punctuation">.</span>SVC<span class="token punctuation">(</span>kernel<span class="token operator">=</span><span class="token string">'linear'</span><span class="token punctuation">)</span> <span class="token comment">#Building the model using the training data set</span> clf_svc<span class="token punctuation">.</span>fit<span class="token punctuation">(</span>X_train<span class="token punctuation">,</span>y_train<span class="token punctuation">)</span> <span class="token comment">#Evaluating the model using testing data set</span> y_pred <span class="token operator">=</span> clf_svc<span class="token punctuation">.</span>predict<span class="token punctuation">(</span>X_test<span class="token punctuation">)</span> score <span class="token operator">=</span> accuracy_score<span class="token punctuation">(</span>y_test<span class="token punctuation">,</span>y_pred<span class="token punctuation">)</span> <span class="token comment">#Printing the accuracy and the time taken by the classifier</span> <span class="token keyword">print</span><span class="token punctuation">(</span><span class="token string">'Accuracy using Support Vector Machine:'</span><span class="token punctuation">,</span>score<span class="token punctuation">)</span> <span class="token comment">#0.804614688583924</span> <span class="token keyword">print</span><span class="token punctuation">(</span><span class="token string">'Time taken using Support Vector Machine:'</span> <span class="token punctuation">,</span> time<span class="token punctuation">.</span>time<span class="token punctuation">(</span><span class="token punctuation">)</span><span class="token operator">-</span>t0<span class="token punctuation">)</span> <span class="token comment">#49.53200387954712</span> |

All grading models give us an accuracy score of 83-84% except for Support Vector Machines. Considering the size of our dataset, the accuracy is pretty good.

To learn more about Machine Learning, read these blogs:

- What is Machine Learning? Machine Learning For Beginners

- Machine Learning Tutorial for Beginners

- Latest Machine Learning Projects to Try in 2019

10. Limitations Of Machine Learning

The following are the limitations of Machine Learning:

- Machine Learning is not capable of processing and processing height data (high dimensional data).

- It cannot be used in image recognition and object detection because they require height data.

- Another major challenge in Machine Learning is telling the machine the important features to look for to accurately predict results. This process is called feature extraction. Feature extraction is a manual process in Machine Learning.

The above limitations can be solved by using Deep Learning.

11. Why Deep Learning?

- Deep learning is one of the only ways we can overcome the challenges of feature extraction. This is because deep learning models are capable of self-learning focusing on appropriate features, requiring minimal human intervention.

- Deep Learning is mainly used to deal with height data. It is based on the concept of Neural Networks and is often used in object detection and image processing.

Now let us understand how Deep Learning works.

12. How Deep Learning Works?

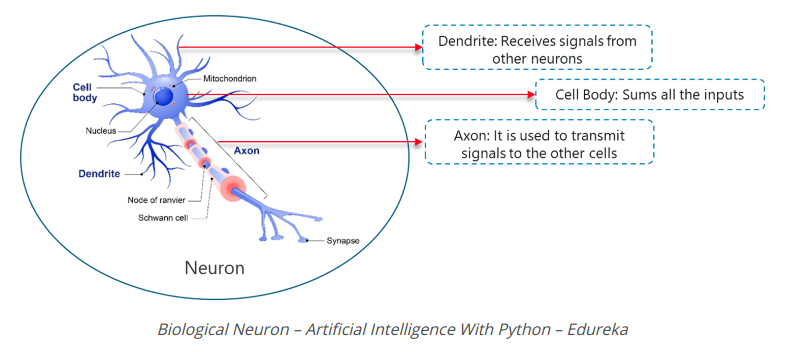

Deep Learning mimics the basic component of the human brain called a brain cell or a neuron. Inspired from a neuron an artificial neuron was developed.

Deep Learning is based on the function of a biological neuron, so let’s understand how we mimic this function in artificial neurons (also known as perceptionrons):

- In a biological neuron, Dendrites are used to receive input. These inputs are summarized in the cell body and through Axon it is transmitted to the next neuron.

- Similar to biological neurons, a perceptron receives multiple inputs, applies different transformations and functions, and provides an output.

- The human brain consists of many connected neurons called neural networks, similarly, by combining multiple perceptrons, we have developed what is known as the Deep neural network.

Now understand exactly what Deep Learning is

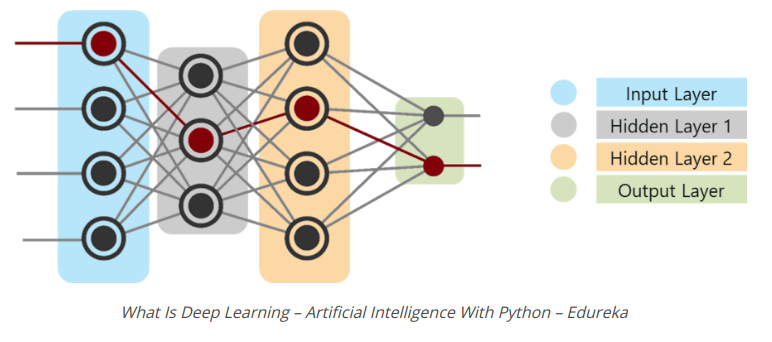

13. What Is Deep Learning?

Deep Learning is a collection of statistical machine learning techniques used to learn feature hierarchies based on the concept of artificial neural networks.

A Deep neural network consists of the following classes:

- The Input Layer

- The Hidden Layer

- The Output Layer

In the picture above:

- The first class is the input layer that accepts all inputs.

- The final layer is the output layer that provides the desired output.

- All layers in between these layers are called hidden layers.

- There may be n number of hidden layers, the number of hidden layers and the number of perceptions in each class that will completely depend on the use case you are trying to solve.

Deep Learning is used in highly computational use cases like Face verification, self-driving cars, etc. Understand the importance of Deep Learning by looking at real-world use cases.

14. Deep Learning Use Case

Consider how PayPal uses Deep Learning to identify any possible fraudulent activities. PayPal has processed more than $ 235 billion in payments from four billion transactions of more than 170 million customers.

PayPal used Machine learning and Deep Learning algorithms to extract data from customers’ purchase histories in addition to looking at fraudulent patterns that might be stored in their databases to predict Whether the deal is fraudulent or not.

The company has relied on Deep Learning & Machine Learning technology for about 10 years. Initially, the fraud monitoring team used simple linear models. But over the years, the company turned to a more advanced Machine Learning technology called Deep Learning.

Fraud management and Data Scientist at PayPal, Ke Wang, cites:

What we enjoy from more modern, advanced machine learning is its ability to consume a lot more data, handle layers and layers of abstraction and be able to ‘see’ things that a simpler technology would not be able to see, even human beings might not be able to see.

A simple linear model is capable of consuming about 20 variables. However, with Deep Learning technology, one can run thousands of data points.

“There’s a magnitude of difference — you’ll be able to analyze a lot more information and identify patterns that are a lot more sophisticated”

Therefore, by implementing Deep Learning technology, PayPal can finally analyze millions of transactions to identify any fraudulent activity.

To better understand Deep Learning, understand how Perceptron works.

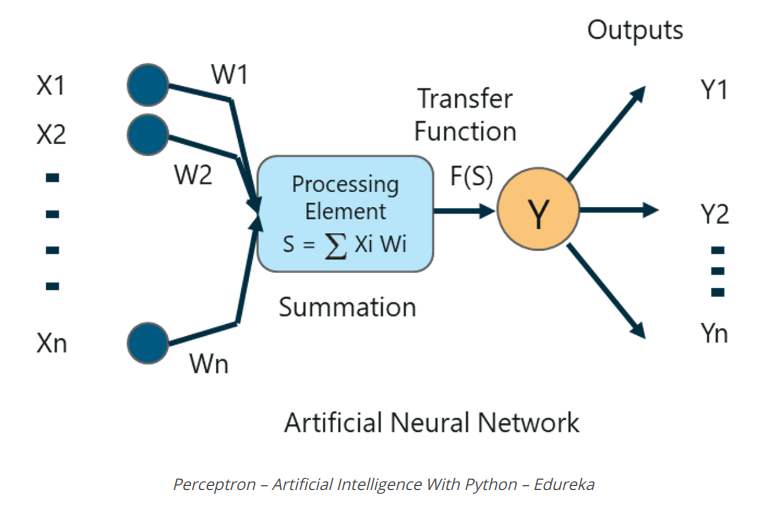

15. What Is A Perceptron?

A perceptron is a single-layer neural network used to classify linear data. Perceptron has 4 important components:

- Input

- Weights and Bias

- Summation Function

- Activation or transformation Function

The basic logic behind a perceptron is as follows:

The inputs (x) received from the input layer are multiplied by the specified weight w. The multiplier values are then added to form the Weighted Sum. The total weight of the inputs and their corresponding weights are then applied to the relevant Activation Function. The function activates the input mapping to the corresponding output.

Weights and Bias In Deep Learning

Why do we have to assign weights to each input?

When an input variable is entered into the network, a randomly chosen value is assigned as the weight of that input. The weight of each input data point indicates the importance of that input in predicting the outcome.

On the other hand, the bias parameter allows you to adjust the trigger function curve in a way that achieves the correct output.

Summation Function

When the inputs are assigned a weight number, the product of the input and the corresponding weight are taken. Adding all these products gives us the weighted Sum. This is done by the sum function.

Activation Function

The main purpose of the trigger function is to map the total weight to the output. Activation functions such as fishy, ReLU, sigmoid, etc. are examples of transformation functions.

To learn more about Perceptron’s functions, you can take a look at the Deep Learning blog: Perceptron Learning Algorithm.

Now understand the concept of Perceptionron multilayer.

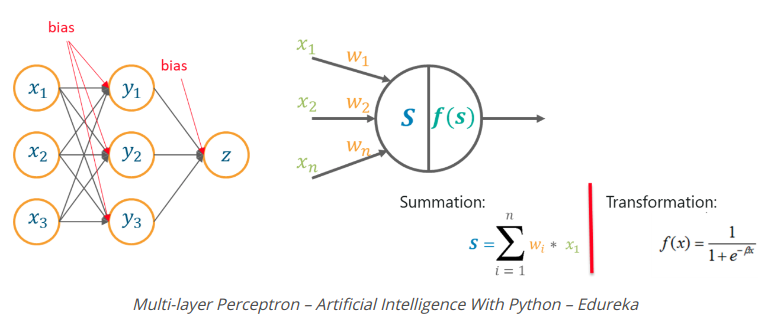

16. Multilayer Perceptrons

Why Multilayer Perceptron Is Used?

A Perceptionron class does not have the ability to handle height data and they can be used to classify non-linear disaggregated data.

Therefore, complex issues involving a large number of parameters can be solved using Multilayer Perceptrons.

What Is A Multilayer Perceptron?

A Multilayer Perceptron is a classifier containing one or more hidden layers and it is based on the artificial feedforward neural network. In Feedforward networks, each neural network layer is fully connected to the next.

How does Multilayer Perceptron Work?

In a multi-layer Perceptionron, the weights assigned to each input are initially updated to minimize errors resulting in calculations.

This is done because initially we randomly assigned weight values to each input, which obviously didn’t give us the desired results, so it was necessary to update the weights so that the output is correct.

The process of updating weights and training networks is called Backpropagation.

Backpropagation is the logic behind multi-layer Perceptionrons. This method is used to update the weights so that the most important input variable has a maximum weight, thus reducing errors while calculating the output.

So that’s the logic behind Artificial Neural Networks. If you want to learn more, make sure you provide it, read more Neural Network Tutorial – Multi-Layer Perceptron.

Source : Viblo