Understand your problem

The first and most important thing is that you need to understand the application’s requirements when interacting with Audio. You will need to answer some of the following questions to be able to accurately estimate your application using Audio, and sometimes, customers will not know the specs until you Q&A.

The question is that you probably already have an answer: Play or record, or both .

The application has audio play

- Where does the application load audio from? (iOS, local app or from server on network)

- What is audio used for? (sound effect, background music, music)

- Does the application allow audio from other applications (such as when users turn on audio from iTunes, Zing Mp3, Spotify, Podcast, etc.) to be played together?

- Can users interact with audio, if so, what can be done? (play / pause, next / back, change play speed, change volume, …)

- Is the headset button allowed to work, needs to handle any events (toggle play / pause, next / back, …)

- Whether to display Media Player on the lock screen, if so, what information is shown and which interactive buttons the user can use.

- When plugging, unplugging headphones, the behavior of the app will perform like?

- What are the specific behaviors of playing audio when plugging in, unplugging headphones or being interrupted by other apps or incoming calls?

Application with recording

- Can the application cut audio or merge audio?

- Does the app require drawing sound waves?

- If drawing sound wave, how does animation of sound wave run? (This affects the release cycle of recorded data in the buffer, so clearing this part is very important.)

- Is there any data compression after the recording, if yes, what is the compression format?

- Do I need to restore and continue recording when the app is unexpectedly killed?

- What are the specific behaviors of recording when plugging in, unplugging headphones or being interrupted by other applications or incoming calls?

Generally speaking, recording will be more difficult, you need to understand more media concepts, even, if the requirements are complex, you may have to go down to the Core Audio floor and work with the C libraries, right understand and setup the input / output ports, handle the buffer itself, … and it’s best to push to the AVFoundation floor as quickly as possible.

There will be more problems with special requirements based on application specs. Therefore, you need to understand these are reference questions, and you can expand it offline!

Understand Audio interaction classes in AVFoundation

First, what is AVFoundation ? That said, he did not intend to work with audio but never heard about it. But to understand it better, we will analyze again offline!

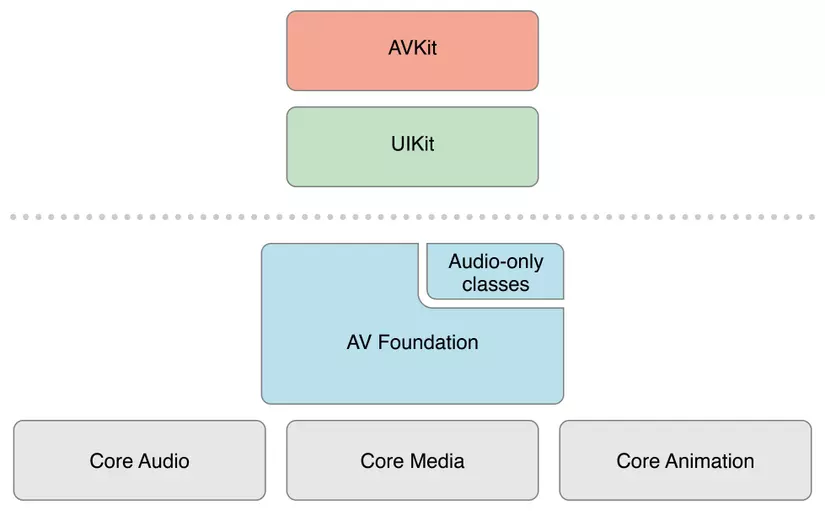

Once upon a time, when AVFoundation was not available (even when it was but there were still bugs), developers who wanted to develop interactive applications with audio needed to work on the Core Audio layer, code in C / C ++, and have to deal with a lot of things including setting up hardware controls.

However, Apple has developed AVFoundation , created shared classes, handled all the confusion that we have to implemtent over and over again with Core Audio, and output an Objective-C interface that we can use with Objective-C makes it convenient.

Yes, it is the AVFoundation framework, it must be admittedly convenient. Playing audio or recording just needs a short, very clear and easy to understand code.

So in the end, what does AVFoundation have to become such a AVFoundation ?

AVAudioSession

If you don’t already know, each application in iOS has a unique audio session, accessible in code through AVAudioSession.sharedIntance() .

The audio session is an intermediary between iOS and the application, expressing the intention to interact with the audio the programmer wants. We demonstrate this by configuring the properties of the Audio session.

Audio session has 3 basic features:

- Setting category: A category is a key that defines a type of audio behavior for our app. Details about the category categories see here .

- Post notification about interruptions and route changes.

- Extract the hardware parameters.

The audio session is the first thing we need to consider and establish because it will identify the audio behavior for the application.

AVAudioPlayer

As simple as the name implies, this is an audio player. However, it is not quite as powerful as the name it gets, because we can only use this class to open the audio in the internal memory or from the buffer. This means you need to have the file already on your computer, or download it before you can play audio using AVAudioPlayer .

For academic features, refer to Apple’s docs: AVAudioPlayer

Note that, unlike AVPlayer , AVAudioPlayer does not automatically handle audio session interruption nor audio session route change, so we need to proactively handle it. Refer:

Responding to Audio Session Interruptions

Responding to Audio Session Route Changes

In fact,

AVAudioPlayeralso stops by itself when it encounters interruption, but when play interruption occurs with an inactive mechanism it creates a bug. The writer himself has worked with it and must deal with this bug. We will talk about it more later!

AVPlayer

It can be said that this is a very formidable player. Why do people just call it a Player and not something like AudioPlayer?

Because it can play the whole video, ladies and gentlemen!

What that means, means that this is a versatile player that can play audio / video from local, audio / video from an external network connection. In addition, it also provides a different play mechanism than AVAudioPlayer , that is through AVPlayerItem . Thanks to that, we can separate error handling logic between player and item, cover some more complex cases

AVPlayer can automatically handle audio session interruption and route change. If you want to update the UI based on the AVPlayer interrupt handler, you only need to observe some necessary properties, such as timeControlStatus .

As for the academic things about AVPlayer, please see Apple docs: AVPlayer

And then it will come time you wonder,

AVPlayerhegemony like that, then we useAVAudioPlayerto do?AVPlayerboth play local files and play files from the server, why not use one?In fact,

AVPlayerandAVAudioPlayerhave different orientations in use, so its class structure will reflect this:

AVAudioPlayeris simpler and more convenient, it has convenient features such asisPlaying,volume, … while AVPlayer does not have, although it can be set up, but some functions also need quite lengthy code.AVAudioPlayeris a leaner and easier to use audio player version ofAVPlayer, and because of that, it is also limited in usability only with local files and buffers to ensure its simplicity. If you offer streaming files from a network connection, there are tons of cases that you have to handle, and then you can’t be simpler, you needAVPlayer.AVPlayerseems to be a more complex version of audio player, and all of the extra features it provides is to facilitate the construction and processing of streaming files over external connections.And to answer the original question, sir, you can leave

AVAudioPlayercompletely to use onlyAVPlayer. However, what you have just done is a typical case of the phrase “using a buffalo to kill chickens”. Cumbersome and heavy will put on your code, and the “maintain your baby” category will start soon.

AVAudioRecorder

Recording is a complex task, always been. Because it was built to reduce the bloat of code when setting up the recording function, it doesn’t provide overwriting capabilities, and to write cutting code with AVAudioRecorder is a disaster.

Unfortunately to say that in order to provide a decent and complete recording feature, most often go to the Core Audio floor, using Audio Queue Services or Audio Units .

For the basic features of AVAudioRecorder , refer to Apple’s docs: AVAudioRecorder

AVAudioEngine

This is a whole new horizon in the world of AVFoundation . Launched from iOS 8, AVAudioEngine is an Objective-C library that simulates the mechanism of the Audio Unit in Core Audio.

If you do not know the mechanism of the Audio Unit, you can understand AVAudioEngine like a remix software. We can connect multiple nodes together, mix audio through one or more player nodes with abundant effect nodes and are available from the library of AVAudioEngine , then export a great remix.

We can also record audio with good audio- AVAudioEngine capabilities because recording with AVAudioEngine not completely closed like AVAudioRecorder .

However, this is a new library and from my experience using it, this library is really full of bugs, sometimes it really crashes like playing. Apple docs do not have a lot of detailed instructions on how to set it up so it’s “best practice”, only some community contributions and some tutorials.

If you need more effects than just changing the play speed? Perhaps you need to switch from AVAudioPlayer to AVAudioEngine . If you are looking to record with AVAudioEngine ? Medium should not medium. How about we will skip to the next post!

Conclusion

Part 1 will end here, the next article I will guide the implementation and give some advice from myself through the experience gained when working with audio offline everyone!