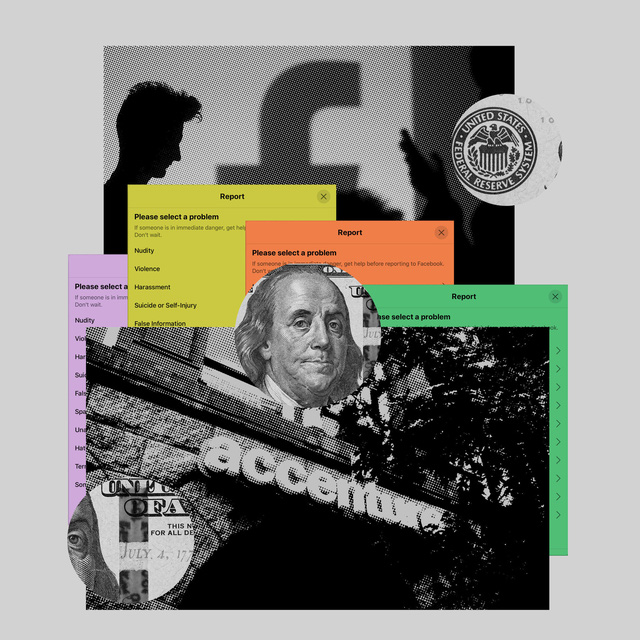

The company quietly ‘cleans up trash’ on Facebook

- Tram Ho

Facebook and Accenture rarely talk about each other or even acknowledge each other as partners, but their secret relationship is central to efforts to keep the most harmful content out of the social network. For years, Facebook has been criticized for the hateful, violent content that pervades the platform. CEO Mark Zuckerberg repeatedly pledged to “clean up” Facebook with artificial intelligence and thousands of employees.

Behind the scenes, however, Facebook quietly pays others to take on most of the responsibility. Since 2012, the company has hired at least 10 different firms to do this through a dense network of subcontractors. No one is more important than Accenture. According to the New York Times, this is Facebook’s largest partner in content management.

Andrew M. Cuomo to remove user-reported pornographic posts within 24 hours.

Facebook employees were quickly “flooded” in the huge workload. CEO Sheryl Sandberg and leaders look to other options, including outsourcing. It’s cheaper than self-employment and offers tax and legal benefits, and the flexibility to scale up and down in areas where the company doesn’t have an office or is fluent in the language.

In 2011, Facebook worked with oDesk, a service that hires freelancers, to review content. But in 2012, after the Gawker site reported that oDesk employees in Morocco and elsewhere were only getting $1 an hour, Facebook found another partner.

The next destination is Accenture. In 2010, Accenture signed an accounting contract with Facebook. In 2012, the contract expanded to include content management, especially outside the US. That same year, Facebook sent people to Maila and Warsaw to train Accenture staff to filter posts. They learn how to use Facebook’s software and policies to boost posts, remove them, or pass them on to others for review.

In 2015, Accenture created a group codenamed Honey Badger to serve Facebook exclusively. From 300 employees in 2015, Accenture grew to 3,000 in 2016, including both full-time and contract employees, based on location and duties. Thanks to doing business with Facebook, the company signs other contracts with YouTube, Twitter, Pinterest… Facebook also assigns additional tasks to check fake and duplicate user accounts, monitor brand and celebrity accounts. for Accenture.

In 2016, after US authorities discovered that Russia was using Facebook to spread information affecting the presidential election, the social network said it would increase the number of administrators by 3,000 people.

“If we want to build safe communities, we need to react quickly,” Zuckerberg wrote in 2017.

The following year, Facebook hired Arun Chandra, a former HP Enterprise Manager, to take charge of relations with Accenture and its partners. His department is under the supervision of Mrs. Sandberg. Facebook expands content management partners such as Cognizant, TaskUs.

Content moderation is challenging work, despite artificial intelligence that has eliminated more than 90% of problematic posts on Facebook and Instagram. Employees are scored based on how accurate the article is. If they make a mistake of about 5%, they can be fired.

At the end of the month, Accenture will send an invoice to Facebook, detailing the admin’s working hours and the volume of content approved. Each American administrator earns about $50 an hour for Accenture.

Mental loss

Many Accenture employees are beginning to question the impact of having to see so much hate speech. Accenture has hired several psychological counselors to assist employees.

Izabela Dziugiel, a psychologist working for the Warsaw office, says these employees are not well prepared when it comes to content moderation. They have to process articles from the Middle East, including many photos and videos about the Syrian war. She quit her job in 2019.

In Dublin, an administrator left a suicide note on his desk in 2018. Luckily the employee was safe.

Joshua Sklar, an administrator in Austin who quit his job in April, said he had to see between 500 and 700 posts per shift, including photos of people dying in car crashes and videos of animals being tortured. There is even a video of a man filming himself raping a little girl. “It’s disgusting,” Sklar wrote in a post.

Spencer Darr, who testified at the hearing in June, recounted the horror of the work. It was a job that required him to make unimaginable decisions, such as removing a video of a live dog being skinned or just marking it as offensive. “The job of a content moderator is impossible,” he said.

Cooperation with Facebook also caused Accenture internal turmoil. Through staff meetings and recommendations, Accenture has made a number of changes. For example, in December 2019, the company notified administrators of risks through a 2-page document. Work writing material “could have a negative impact on your emotional or mental health”.

In October 2020, Accenture first listed content moderation as a risk factor in its annual report, leaving the company vulnerable to the press and into legal trouble. However, they still cannot leave Facebook, simply because Facebook is the “diamond customer”.

Source : Genk