Thanks to a new feature, OpenAI’s GPT-4 brings a turning point in the lives of blind people

- Tram Ho

Less than a month after its launch, GPT-4 has shown how powerful this AI model’s feature upgrades can be. Leveraging GPT-4’s image recognition capabilities, a newly launched application can transform the daily lives of blind or visually impaired people.

Be My Eyes, a Danish startup, said it has applied the feature of this new AI model to assist blind and visually impaired people. Called “Virtual Volunteer”, the object-recognition app can answer questions about images sent to it.

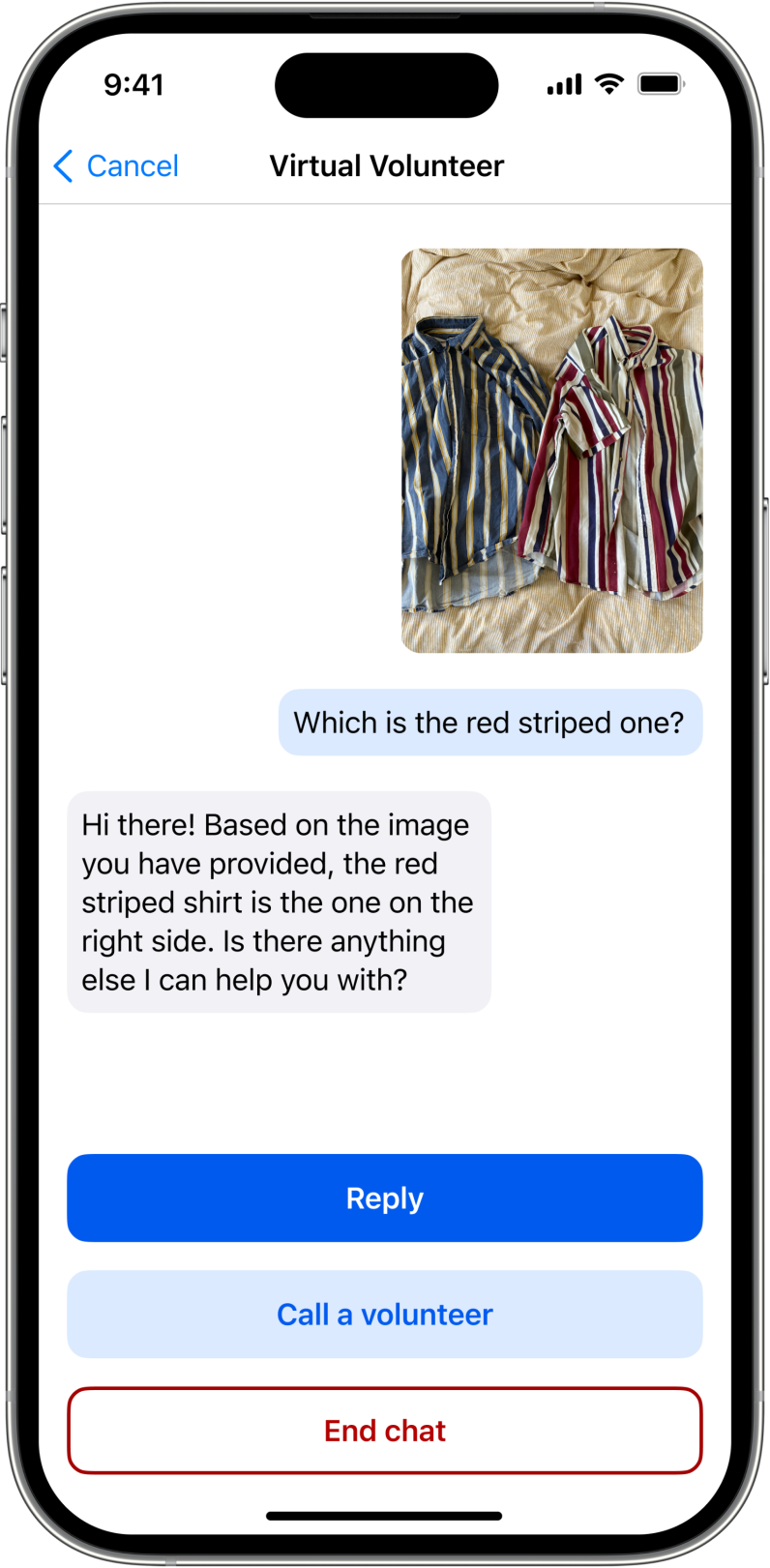

For example, imagine a hungry user. They simply take a picture of an ingredient and ask for recipes related to that ingredient. Or if you want to choose an outfit, you just need to take a picture of the clothes you have and request, the application can identify and provide information according to the user’s request.

As a blind person, but thanks to the Virtual Volunteer app with the built-in GPT-4 model, Lucy Edwards’ life has completely changed.

If you want to go out to eat, just upload a picture of a map and the app will direct you to that restaurant. When you arrive, you can take a picture of the menu and hear the choices from it. Then if you want to cut calories by finding a gym, you can use your smartphone to find a suitable treadmill.

For ordinary users, these are tasks that can be done easily, but for people who are blind or visually impaired, these are really big obstacles in their life. However, with the help of Virtual Volunteer, the visually impaired can overcome those challenges more easily.

The application is able to do this thanks to a new OpenAI upgrade to GPT-4. It is this new AI model that can analyze both image and text input. While this is not entirely a new feature, previous tools have never convinced the startup of its performance in handling such images.

Thanks to Virtual Volunteer, visually impaired users can choose a shirt color that suits their preferences. Photo The Next Web

Mike Buckley, CEO of Be My Eyes, said : “Not only are there so many errors and inability to chat, the current tools on the market aren’t equipped to address many of the community’s needs.”

“GPT-4’s image recognition capabilities are truly outstanding, and the chat and image analysis layers provided by OpenAI further increase its value and utility exponentially.”

Previously, Be My Eyes supported its users through the help of human volunteers. But now with GPT-4 – the new feature, OpenAI claims, can deliver equivalent results with the same context and understanding – thus providing faster, more efficient support to users when no need for human volunteers. However, if the user doesn’t get the right answer or wants a human connection, the app can still find another human volunteer.

While the initial results are promising, Buckley stressed that the free service will continue to be rolled out cautiously. Initial testers and the wider community will play a central role in deciding this process.

Check out The Next Web

Source : Genk