As introduced, this first part I would like to talk about the AI system for the system. In this face recognition problem, there are a few requirements:

- Because of the large number of people coming in and out, there was no time to train for face recognition.

- The person’s face data usually only has one or a few photos. If only on the same day, only one, if not encountered the muddy grandparents please many times.

Therefore, this problem I will give a solution is to use object detection pretrain model for face recognition. We will then extract the feature of the person’s face using a mesh of pretrain convolution. In simple terms, people will only look at the person’s face, then look for features such as a high nose, a mole somewhere, etc. to distinguish but the difference is that the computer processes on the pixel image value. We will then use the search tree or the search graph. For each new person, we will put into that person’s characteristic graph to search later.

AI explanation section

Here, I would like to explain a few AI theories that I use. If you are only interested in the code please go to the code section now. Note, skip this section does not affect the code.

Here, I use MTCNN. I know you’ll be like: Ewww insert the meme here . Why not use FaceNet or CenterNet-Resnet 50 or something that is genuine? The reason is because when I checked to see if any library pretrain to develop the system quickly, the MTCNN repo written in torch hit the face and it was convenient that they still made pip install for it. CenterNet puts the docker in a mess, FaceNet uses MXNet.

Original paper: https://arxiv.org/pdf/1604.02878v1.pdf .

MTCNN includes 3 networks:

- P-Net

- R-Net

- O-Net

The image input is resized into multiple sizes to form an Image Pyramid. Then the pyramid will be put into P-Net:

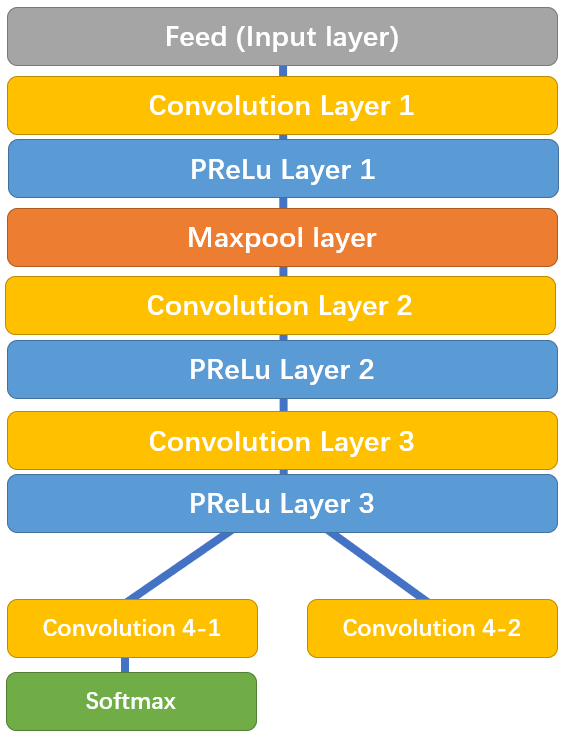

PNet architecture

PNet architecture

As can be seen here, P-Net is a FCN – Fully convolutional network. Its task is to identify image windows that include the human face, but that are many, fast and inaccurate. The output output includes:

- Face classification has shape (1x1x2).

- BBox regression has shapes (1x1x4).

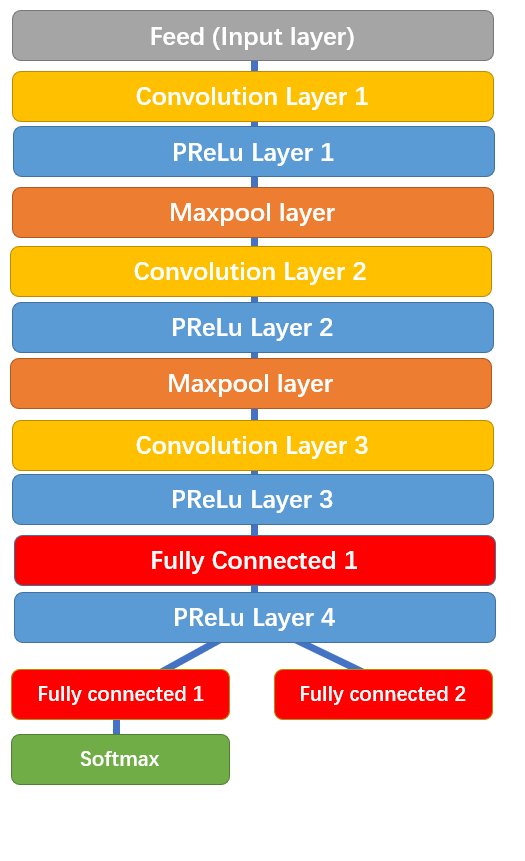

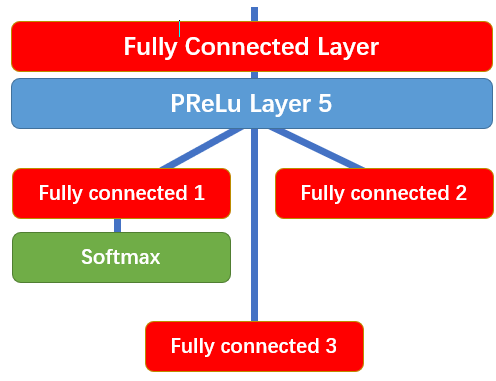

R-Net and O-Net networks have similar structures that differ only in depth and output. With R-Net input, bounding boxes from P-Net and O-Net input are bounding boxes from R-Net. Their task is to filter out bounding boxes more precisely by squeezing the depth of the model.

RNet architecture

ONet architecture

The above 2 networks have an additional Fully connected layer. That’s why their output:

- Face classification has shape (2).

- BBox regression has shapes (4).

Code implementation part

You can git clone repo yourself to use or use pip. If you do not intend to modify the behavior of the layers in the repo, you should use pip for convenient config paths, … First, create a backend folder in the project folder. And create the following files:

face_detector.pyface_searcher.py

Part extract feature

We will create a class responsible for locating faces and extracting features through a convoluted network in the file face_detector.py

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 | from facenet_pytorch import MTCNN, InceptionResnetV1 import torch import PIL from PIL import Image import cv2 import numpy as np import os import sys import time class FaceDetector(object): def __init__(self, image_size=160, keep_all=False): """ mtcnn: face detector extractor: face feature extractor Args: image_size: face image size keep_all: detect all faces or single face """ self.keep_all = keep_all # self.device = torch.device( # "cuda" if torch.cuda.is_available() else "cpu") self.device = 'cpu' print('Using ', self.device) t = time.time() self.mtcnn = MTCNN( image_size=image_size, min_face_size=20, thresholds=[0.6, 0.7, 0.7], factor=0.709, post_process=True, keep_all=False, device=self.device ) self.extractor = InceptionResnetV1( pretrained='vggface2').eval().to(self.device) print('Fininsh load model in {} sec'.format(time.time()-t)) |

Explain a few parameters:

- MTCNN:

- image_size: image size crop surface

- min_face_size: minimum face size on original to search

- threshold: confidence level for recognition. Array of three values for three networks.

- factor: scale the image on the pyramid

- InceptionResnetV1:

- Pretrain: Choose the pretrain model to use

- classify: if we use True, then the network will go through both the Logits class and a classification problem. Here we will set False to get image characteristics.

Now we will continue with the face recognition code:

1 2 3 4 5 | def detect(self, image, save_path=None): # boxes, _ = self.mtcnn.detect(image) faces = self.mtcnn(image, save_path=save_path) return faces |

The upper function returns the image as a torch tensor

1 2 3 4 5 6 7 | def extract_feature(self, tensor): if not self.keep_all: tensor = tensor.unsqueeze(0) embeddings = self.extractor(tensor.to(self.device)) embeddings = embeddings.detach().cpu().numpy() return embeddings if self.keep_all else np.squeeze(embeddings) |

The above function will return the featured vector with shape (512,) in our problem. Next we will write the functions to get the vector from the image directly and from a folder (in case of server startup, we need to put all the images back into the system).

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | def extract_face(self, image, save_path=None): try: faces = self.detect(image, save_path) embeddings = self.extract_feature(faces) return embeddings except Exception as err: # TODO: Logging here print(err) return None def extract_face_from_folder(self, folder, save_prefix=None): # Use for single face per image. Modify save_path for multi face if not self.keep_all: all_embeddings = [] for image_name in sorted(os.listdir(folder)): image_path = os.path.join(folder, image_name) print(image_path) image = pil_loader(image_path) save_path = os.path.join( save_prefix, image_name) if save_prefix is not None else None all_embeddings.append(self.extract_face(image, save_path)) return np.array(all_embeddings) |

Section included in the search graph

This part I use Hnswlib for the following 2 reasons:

- The above library allows adding new values after building the graph. Libraries like Annoy do not allow this.

- By benchmark, Hnswlib is better than many other libraries in both speed and Recall accuracy

We will write a search class in face_searcher.py :

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 | class FaceSearcher(object): def __init__(self, dim=512, space='l2', threshold=0.5): """ Args: dim: face embedding feature length space: distance algorithm (L2, Inner product, cosine) threshold: similarity threshold """ self.p = hnswlib.Index(space=space, dim=dim) self.p.init_index(max_elements=1000, ef_construction=200, M=48) self.p.set_ef(20) self.k = 1 self.threshold = threshold def add_faces(self, data, index): try: if index.shape[0] != data.shape[0]: # TODO: Logging here print('Try to assign index with length {} to data with length {}'.format( index.shape[0], data.shape[0])) else: self.p.add_items(data, index) except Exception as err: # TODO: Logging here print(err) def query_faces(self, data): try: index, distance = self.p.knn_query(data, k=self.k) # Filter result index = np.squeeze(index) distance = np.squeeze(distance) print('Index: ', index, 'nDistance: ', distance) return index if distance < self.threshold else None except Exception as err: # TODO: Logging here print(err) return None |

The parameters of hnswlib.Index include:

- space: how to calculate distance: Squared L2, Inner product, Inner product

- dim: the vector length transmitted, here is 512

Parameter way like ef, M, … take a look here

In the next section, we will build the server system with flask.