What is Latent Space?

If to summarize Latent Space in a simple and unique definition, it is representative of compressed data, and what kind of data depends on the problem.

Imagine you have a large data set of handwritten digits here (0-9) like the image above. The question here is how can we create an algorithm to compare and find similarities of the same digits in that data set?

In Deep Learning, we can use data sets with the classify problem, which also means that you have trained the model to learn structural similarities between images during the training process. In fact, this is how the model can classify digits from the start – by learning feartures for each digit during its training with the neural network.

So what does all of the above have to do with latent space?

Answer: The concept of latent space is very important to deep learning – computers will somehow learn features of data and simplify data representations for the purpose of finding patternts.

So what is the meaning of Latent space?

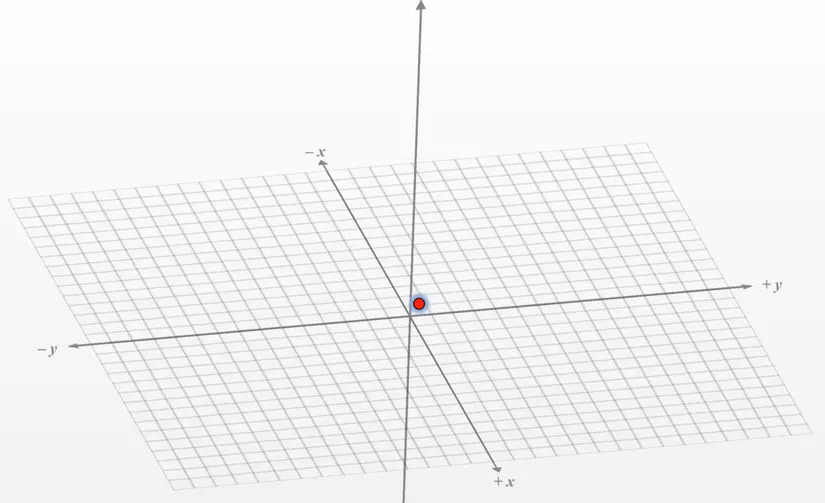

In this fairly simple example, let’s say our original data set is a 5 x 5 x 1 image. We will set the latent space size to 3 x 1, which means that the compressed data point is a vector has 3 dimensions.

Now every 5x5x1 compressed data point is uniquely identified by 3 numbers , That means we can plot this data on the 3D Plane (One is x, the other is y, the number is the other is z).

Whenever we draw graphs for points or think about points in the latent space, we can imagine them as coordinates in space where “similar” points are closer together on the graph.

But you may be wondering, what are “similar” images and why does reducing the size of the data make similar images “closer together” in space?

If we look at the three images above, two chairs of different color and a table, we will easily say that the two images of the chair are more similar while the table is the most different in shape.

But what makes the other two chairs “alike”? Of course we will consider related features such as angles, characteristics of shapes, etc.As explained above, each such feature will be considered a data point represented in the latent space. . In the latent space irrelevant information such as the color of the seat will be ignored when represented in the latent space, or in other words we will remove that information.

As a result, when we reduce the size (As I said above, each image has been compressed into a data point in the latent space and thus it has been reduced in dimensions to the level needed to represent the image. the image of both chairs becomes less different and more similar. If we imagine them in space, they will be ‘closer together’.

Application of Latent Space

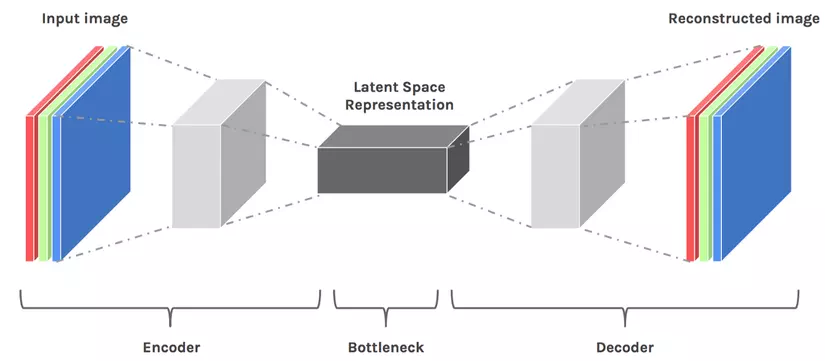

Autoencoder

It is a neural network that acts as an identifier.

When we turn the model into an “identity function”, we are forcing it to store all the relevant features of the input data in a well-informed compressed representation to be able to reproduce it in a major way. correct with the original input. Of course, that compressed representation is the latent space as you can see in the image above.

Interpolate with latent space

Suppose we “compress” the data of the two chairs and table images above into three two-dimensional vectors [0.4, 0.5] and [0.45, 0.45] [0.6, 0.75] . If we interpolate on the latent space, we will take samples in the latent space between the cluster “chair” and the cluster “table”. From the pre-installed clusters we will feed it into the decoder, and as a result we will have a new image between the chair and the table of the shape.

GAN latent space

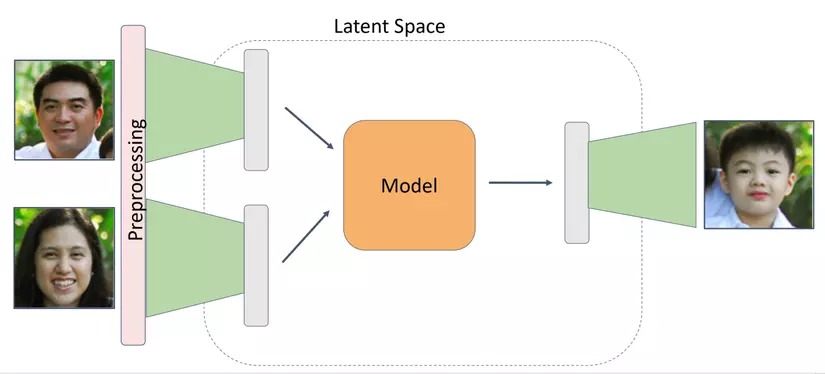

This model is heavily applied in GAN (Generative Adversarial Network) problems to produce a combined output pattern from the features of the input inputs.

As a very new and unique idea is to convert the latent space to an image like it, with the latent space we won’t know how it works internally because it “hides” the process, but we can interfere. it’s from the outside.

As in the image above we will start training with a random vector – Noise to map to any random face by passing the GAN style by repeating it over and over again. Then we will back-propagation to calculate the difference between the generated image and the original image, the main purpose of this is to create a latent vector space as close to the original image as possible. So what is the purpose of generating a vector image latent space representing the original image?

A typical example is the Family GAN: Combine face of a parent to give birth to a child’s face. We will use the above method to create the latent space of the parent, then interpolate those two vectors in the laltent space to create the child’s face.

Through this article, I have clarified the concept of latent space and its application. If there is any place that is not understood or correct, please comment, thank you for reading the article.

Reference

1 – https://towardsdatascience.com/understanding-latent-space-in-machine-learning-de5a7c687d8d

2 – https://medium.com/swlh/familygan-generating-a-childs-face-using-his-parents-394d8face6a4