What is a container?

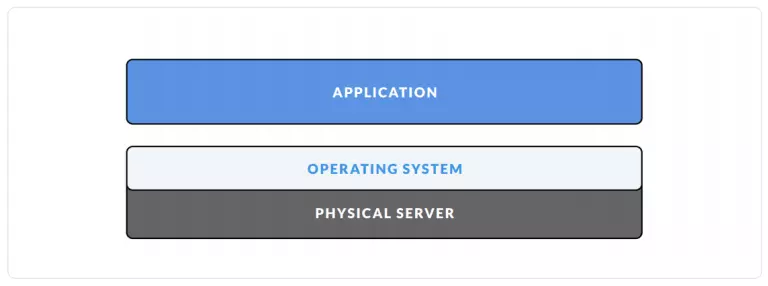

In the past, a server’s model was usually a set of three main factors:

- Physical server

- Operating system

- Application

The problem here is that a server can only install one operating system, an application, from which it cannot fully utilize the power of the hardware.

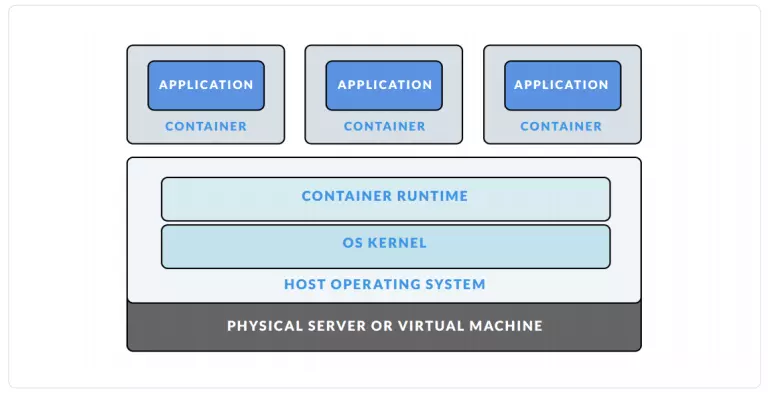

After a while, virtualization of containerization appeared, helping to create multiple virtual sub-servers from a physical server, sharing the kernel kernel and server resources, thereby not only optimizing performance. productivity of the hardware but also saves administration time.

Container is a software unit that provides a mechanism to package applications, source code, settings, libraries … into a single object. The packaged application can operate quickly and efficiently across different computing environments. From there it can create a perfect environment where there are everything for the program to function, not being affected by the environment of the system nor having negative effects on the storage system. it.

To create a container, there are two features in the Linux kernel:

- Namespace : is responsible for isolating system resources, causing processes to “see” different sets of resources.

- Control groups (cgroups) : limit the resources of each application, allowing Docker Engine to coordinate the amount of hardware resources of each application, to establish constraints.

Processes in a container are isolated from processes of other containers in the same system. However, all of these containers share the kernel of the host OS.

Advantages:

- Flexibility : Easy to deploy in many different environments by eliminating dependencies of the application on the OS layer as well as infrastructure.

- Space saving : the container is built based on many available images, thereby saving more storage space.

- Homogeneous: no difference in terms of the environment when deployed anywhere, create consistency when working in groups.

- Fast : Due to the shared host OS, containers can be created almost instantly, booting up is also much faster.

Container vs Virtual Machine

In the early days, the server model was usually a physical server, an operating system, and running an application.

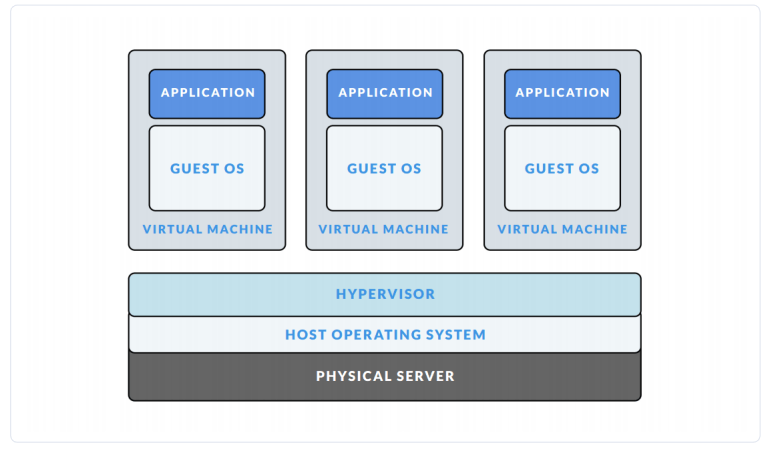

However, later, the capacity of hardware is constantly increasing, this model will create the problem of wasting resources when the system cannot use all the advantages in terms of resources. In addition, there are difficulties in expanding the system (if you want to expand, you have to hire more servers, configuration, …). And since then, virtualization technology (virtualization) was born.

Virtualization is a technology designed to create an intermediate layer between a computer’s hardware and the software that runs on it. Virtualization is based on the idea of partitioning drives, they divide the root server into multiple logical servers. From there, a single physical server can form multiple virtual machines running independently and separately from each other. Those virtual machines can have their own operating system, their own applications, independent of other virtual machines.

The advent of virtualization enabled a computer to be divided into multiple virtual machines, thereby creating many different environments to run many applications. So the productivity of the hardware is pushed higher, to get more use. However, this virtualization also raises many new problems:

- Resource consuming : When running a virtual machine, it will always take up a certain portion of resources. Let’s say you have a physical server with 512GB SSD , 16GB of RAM . If you create 2 virtual machines, each with 128GB SSD and 4GB RAM , the physical server has lost 256GB SSD and 8GB RAM despite turning on the virtual machine and not using anything.

- It takes a long time to boot, restart, and shut down virtual machines, usually a few minutes.

- Cumbersome : the server will not be able to run at full performance when it has to bear the load for the whole group of virtual machines, and it is also difficult to manage and expand.

To solve the above problems, the Container was invented.

As mentioned in the previous section, with this technology, a single physical server can generate and run multiple containers similar to a VM. However, the special feature here is that the containers can share the same kernel and share the resources of the physical server together (CPU, RAM, …) So the resources will be used more reasonably and flexibly. not confined to a distance like VM.

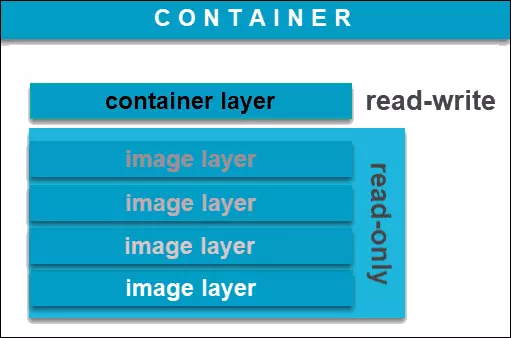

In addition, the container is divided into many different layers also makes resource management more efficient.

Specifically, the new container will be built based on read-only image layers. In each container there will be an additional layer with read-write permissions, any changes made to the container will only be written here. Thus, from just a few initial images, we can create many different containers, thereby saving a part of storage space.

For Virtual Machine, the capacity that the operating system with dependent applications uses is not small.

Birth of Docker

Docker is an open source platform that provides users with the tools to simply and easily package, transport and run containers on different platforms with the fastest criterion – “Build once, run anywhere ”. Docker realizes virtualization at the operating system level. Each container is isolated from each other but all share some bin / lib and the kernel of Host OS. Docker can work on many different platforms such as Linux, Microsoft Windows and Apple OS X. In addition, Docker also supports many famous cloud services such as Microsoft Azure or Amazon Web Services.

Common terms in Docker

Docker Image

A Docker Image is a read-only template used to create containers. Image is composed of layers and all layers are read-only. An image can be based on another image with some additional customization. In short, Docker Image is a place to store environmental settings such as OS, packages, software to run, …

If viewed programmatically, Docker Image is like a class that contains methods and properties, and containers are instances / objects of those classes. So from an image we can create many containers with identical internal environments.

Dockerfile

Dockerfile is a text file with no extension, containing specifications for a software execution field, structure for a Docker image. From those commands, Docker will build the Docker image (usually small in size from a few MB to large a few GB).

Docker Container

Docker Container is created from Docker Image, which contains everything needed to be able to run an application. As virtualization but the Container is very light, can be considered as a system process. It only takes a few seconds to start, stop or restart a Container. With a physical server, instead of running a few traditional virtual machines, we can run a few dozen, even a few hundred Docker Containers.

Possible states: run, started, stopped, moved and deleted.

For example, the container might contain the environment:

- OS: Ubuntu 18.04

- Package: pre-installed git, curl, vim, nano, …

- Install the application to be executed

Docker Network

Docker network is responsible for providing private network (VLAN) so that containers on a host can communicate with each other, or containers on multiple hosts can communicate with each other (multi-host networking).

Docker Volume

Docker volume is the docker’s data creation and use mechanism, responsible for storing data independent of the container life cycle.

There are 3 cases of using Docker Volume:

- Retains data when a Container is deleted.

- To share data between physical server and Docker Container.

- Sharing data between Docker Containers.

Docker Compose

Docker compose is the tool used to define and run multi-containers for Docker applications. With compose you use the YAML file to configure services for your application. Then use the command to create and run from those configs.

Usage is also quite simple with just three steps:

- Declare the app’s environment in Dockerfile.

- Declare the services needed to run the application in the docker-compose.yml file.

- Run docker-compose up to start and run the app.

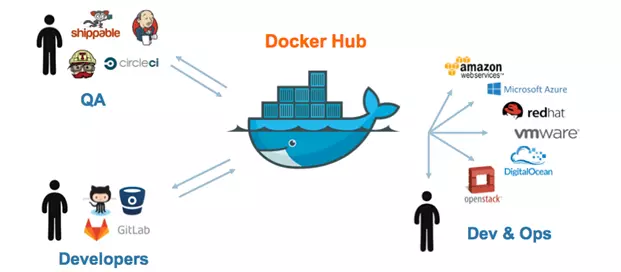

Docker Hub

If you are a developer, you are probably familiar with the github tool used to upload your code to it, simply understand, the Docker hub is similar to github but for DockerFile, Docker Images. Here are the DockerFile, Images of the user as well as official versions from major developers such as Google, Oracle, Microsoft, … There is also Docker Hub that allows to manage images with commands like Github like push, pull … so you can easily manage your images.

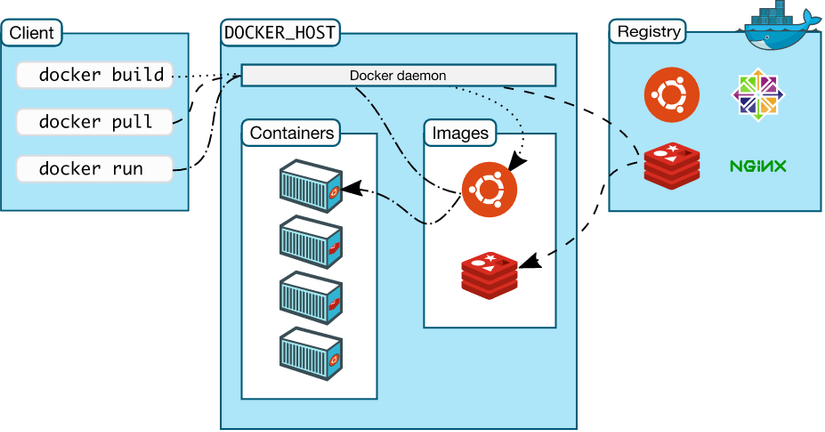

Docker architecture

Docker is a client-server application, with 2 popular versions:

- Docker Community Edition (CE) : is a free version and is primarily based on other open source products.

- Docker Enterprise (EE) : Enterprise version, when using this version will receive support from the publisher, in addition to additional management and security features.

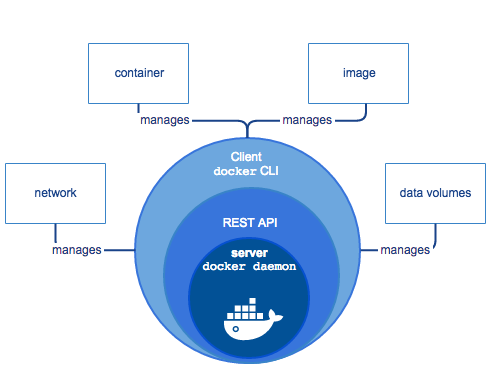

Components of Docker Engine include:

- Docker Deamon : runs on the host, acts as a server, receives RESTful requests from Docker Client and executes it. As a lightweight runtime that builds, runs and manages containers and other related components.

Docker Deamon manages four main objects: image, container, network, volume.

- Docker Client (CLI) : provides a command line interface for the user, and also sends a request to the Docker daemon.

The diagram illustrates common Docker client commands and the relationship of Image, Container, Network, Volume.

The diagram illustrates common Docker client commands and the relationship of Image, Container, Network, Volume.

- Docker Registry : where the Docker image is stored. Docker Hub is a public registry that anyone can use, and Docker is configured to search for images on Docker Hub by default. You can even run your own registry. There are two types of registry: public or private registry.

.. continue ..