Have you ever wondered how many hidden layers are needed for a Neural Network? Because you can’t just add as much as you want because doing so will lead to problems like vanishing gradient or overfitting, and if you add too little, the model will not be optimized in the best way. In short, how to determine the number of hidden layers that need to be added appropriately, in this article, I will introduce a strange but familiar method.

The method that I will introduce shortly is also the center of great popularity and buzz for ResNet in 2015.

Residual Net

Residual Net is the method that I will talk about in this article. Before explaining how Residual Net works, I will take an example for you to easily imagine:

Suppose you are surfing the internet and see a pair of shoes that you really like and like and the shoes only cost 10 dong and are close to my house, then this is definitely what God wants me to buy. So you bring exactly 10 dong to buy these shoes, but when you get there you find that these shoes have increased in price to 12 dong so you don’t have enough money to buy but you really like these shoes extreme, then the simplest way is you ‘ROAD’ home and ‘GET MORE’ money.

The way Residual Net works is the same, but the opposite is to go back and remove it.

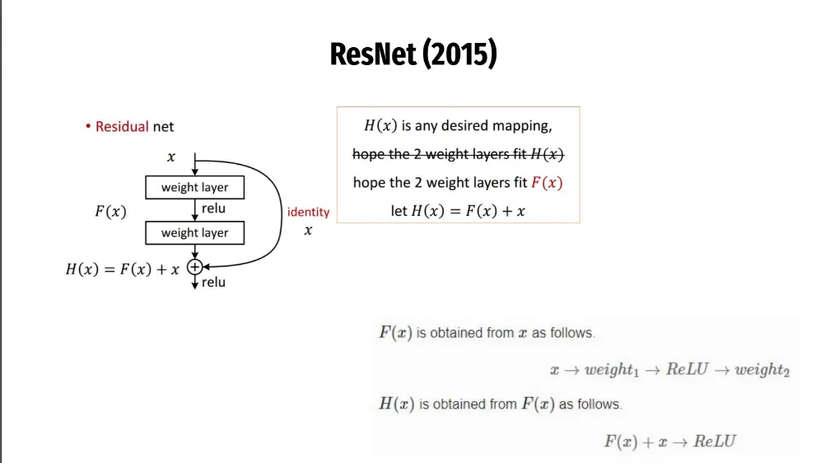

As you can see in the picture we want to add 2 more hidden layers, now there will be 2 cases (like the beginning of the article I mentioned):

- model is not optimized so adding 2 layers will help increase performance

- the model is already optimized and adding will lead to vanishing – overfitting

To solve these two problems, the ResNet model has created an Identity (also known as a short cut), that is, we just add hidden layers redundantly in case of lack, if there is a surplus, we Will go back and take out.

In the picture you will see there are 2 paths before going through ReLu:

- Go straight through each hidden layer

- follow the curve – Identity (ignore 2 hidden layers) and go straight to ReLu

And determining which path is reasonable will be the job of the computer (via backward ). Just creating an Identity (simply if not optimize -> go straight else —> go curve) has solved 2 problems that we have headaches every time we build a model.

In short: We do not necessarily need to specify exactly how many hidden layers are enough, but it will be done by the computer. ‘ Just add layers, if it’s not better then don’t make it worse ‘ is how Identity (short cut) works.