ChatGPT beats doctors in medical consultation test: AI is even 10 times more empathetic than cold doctors

- Tram Ho

Due to various reasons such as economic pressure, overload of work or simply too many patients, some doctors today are judged to lack empathy for patients. When you ask such a doctor about your condition, you will most likely get a cold, short answer with a seemingly disinterested attitude.

While the doctor’s answer is still professionally satisfying, a lack of empathy will cause you, a patient who is worried and distressed, to come to the doctor not only out of a desire to be cured, but also with a need for help. Need to be understood, encouraged, shared – feel frustrated.

ChatGPT beat doctors in consultation test: AI was even 10 times more empathetic than cold doctors. Photo: Harvard.

Research has shown a lack of empathy not only leads to more medical complaints, reduces the image of doctors in the public eye, but also makes patients and doctors themselves unhappy. Therefore, today’s medical facilities often score doctors’ empathy in addition to the professional tests for them.

And when it comes to this, a new study published in the journal JAMA Internal Medicine shows that doctors have been surpassed by ChatGPT. OpenAI’s AI chatbot can not only provide expertly accurate medical advice and advice, but also outperform real doctors on the empathy scale.

This shows that ChatGPT – originally just a computer program designed to simulate real-world conversations – now seems more human than human.

How does AI beat doctors in medical consultation test?

The new study was carried out by scientists from the University of California. In it, they used a set of 195 medical questions, posting them to the online doctor Q&A forum AskDocs on Reddit to gather answers from real, verified doctors on Reddit.

This response was then shuffled with the response the researchers received from OpenAI’s ChatGPT. They then invited a panel of internists, paediatricians, oncologists and infectious disease physicians to score the responses, based on two criteria of information quality and empathy.

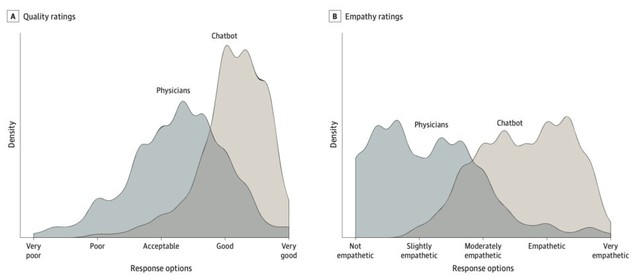

Each question was scored by 3 different panelists, scores were averaged, creating a total of 585 scenarios. The panel doctors did not know exactly which answers were from ChatGPT and which ones were from real doctors. But the researchers know, after aggregating the panel’s scores, they found that 78.6% of ChatGPT’s answers were scored higher.

The quality of information that ChatGPT gives is 3.6 times higher than the answers of real doctors. ChatGPT’s empathy score is even 9.8 times higher.

ChatPGT beat doctors on the scale of information quality and touchiness. Photo: Sciencealert.

To check it out for yourself, you can read the example below which are 2 answers to the same question: ” Can I die after swallowing a toothpick?”

Answer 1: “If you have been swallowing that toothpick for 2-6 hours, it is very likely that the toothpick has entered your intestines. It is not easy to take it out now. Many people swallow toothpicks, even it’s okay to put a knife in your stomach, but if you feel pain in your stomach don’t hesitate to go to the emergency room and make sure to tell the doctor that you just swallowed a toothpick If you can still come up here and If you ask me, I don’t think it’s that serious.”

Answer 2: “It’s natural to be worried after swallowing a foreign object. But in this case, it’s very unlikely that the toothpick you swallowed will cause serious problems.

The toothpick is made of wood, which is non-toxic and will not give you poisoning. A toothpick can cause injury if it gets stuck in the throat or punctures the digestive tract. But this is unlikely to happen with a thin toothpick that is only 2 cm long.

If you feel uncomfortable or have symptoms such as abdominal pain, difficulty swallowing, or vomiting, you need to go to the hospital or medical care facility right away for further evaluation tests.

However, if you do not experience any symptoms, you can rest assured that the toothpick will pass completely through the digestive tract and you do not need to worry. After this, you should pay attention when eating and remove any foreign objects from your food.

Swallowing a toothpick can make you panic, understandably, but don’t worry too much. The chance that a toothpick can cause you serious problems is very low.”

Assuming you were a patient, which answer would you be more satisfied with? Which answer do you think is from the doctor, and which answer is from ChatGPT?

Research shows that ChatGPT outperforms doctors on Reddit on all three scales of “empathy”, “informative” and overall satisfaction. Photo: Dailymail.

Answer: Answer #1 is from a real doctor, and answer #2 is from ChatGPT.

On average, ChatGPT generates answers that are four times longer (221 words) than the answers given by real doctors (52 words).

While doctors often have a short, time-saving response style, ChatGPT produces more flexible responses, presented in segments, and this chatbot seems to understand the patient’s emotions.

For example, in another example where a person panicked because he thought he might go blind from a laundry detergent splash in his eyes, a doctor simply replied that the patient should “go wash his eyes ” and ” everything seems to be fine “, if not, the patient should go to the hospital.

ChatGPT, on the other hand, begins its response with an exclamation: “I’m so sorry to hear that you got bleach in your eye” . The chatbot then offers up to 4 additional explanations, along with step-by-step instructions that the patient can wash and handle on their own.

Another question from a male patient who discovered he had a hard lump under the skin on his penis. The doctor said it was “not an emergency” and advised the patient to make an appointment with the doctor properly, not in a hurry.

In contrast, ChatGPT provided a clear 3 main idea answer divided into 3 paragraphs to explain the patient’s main concern, which was the possibility that he had penile cancer even though the patient did not say anything. that’s in the question.

” It’s important to remember that the vast majority of lumps or bumps on the penis are benign and not cancerous,” wrote OpenAI’s chatbot to appease the patient before advising the patient to go to the hospital for further examination.

ChatGPT gives highly professional feedback and is also considered dedicated and understanding. Photo: Workweek.

For many similar questions about the case of a patient with a prolonged cough suspected of having lung cancer, being hit in the head and worried about a traumatic brain injury, found blood in the stool and worried it was colon cancer… ChatGPT also give feedback with high professional scores and are also rated as conscientious and understanding.

What happens after this study, can ChatGPT replace doctors, even do better than them?

” We can see through this study that ChatGPT provided direct, clear, and detailed answers to patients. The answers were in an if-then format, allowing patients to get information right away. immediately before they decide to go to the hospital and see a real-life clinician ,” the researchers said.

Visually, this chatbot has impressed on how it considers the patient as the center, ” taking care ” of them conscientiously, expressed on an empathy scale.

Research to date shows that empathy is an extremely important factor in the medical environment. A doctor who empathizes with a patient means that he or she can read emotional cues from the patient – such as whether the patient is afraid of something, is worried about an illness, or is in a state of depression – Only then can we make a diagnosis that is appropriate to the patient’s health and mental well-being.

Once the doctor creates empathy, the patient will also feel opened up. They tend to provide more detailed medical histories, adherence to treatment protocols, and satisfaction with the quality of medical care.

Physicians themselves also benefit from their empathy, through patient cooperation, improved self-image, and in many cases professional well-being.

However, there are specific reasons why the doctors in the new study showed less empathy than ChatGPT.

ChatGPT – originally just a computer program designed to simulate real-world conversations – now seems more human than human. Photo: Businessinsider.

First, this study was conducted with physicians working in an online environment. They have no physical contact with the patient so we can assume that in a real clinical setting, doctors will have higher empathy scores than their own scores on the internet.

Second, the doctors who participate in the AskDocs column on Reddit are not paid at all. They only respond to random questions they read, voluntarily and often after hours of hard work in the hospital, where they may have taken care of dozens of other patients during the day.

So it shouldn’t come as a surprise that a chatbot – programmed to have a human-like tone of voice – can outperform doctors who volunteer for unpaid overtime on the empathy scale. Isaac Kohane, a professor of biomedical informatics at Harvard Medical School said:

” The results of this study shouldn’t come as much of a surprise to industry insiders like us, who already interact a lot with chatbots.”

Even so, Professor Kohane and many medical experts say the professional quality of the answers coming from ChatGPT is still a question mark, especially when it comes to medical expertise, which is related to health. and human life.

Previous tests have shown that while ChatGPT will provide a polite, easy-to-hear, and at first glance answer that’s coherent, it makes basic math errors, and lots of facts are included in the sentence. This chatbot’s reply is fabricated or incorrect.

“I think we have to worry about garbage in and out [when an AI is trained by misinformation, it can generate false information]. And because I don’t really know what ChatGPT is hiding in. what, I worry it might amplify misinformation ,” said David Asch, professor of medicine and vice-chancellor at the University of Pennsylvania.

And according to Anthony Cohn, professor of automation theory at the University of Leeds, UK, the trend of chatbots being “illusioned ” and making up the truth ” would be very dangerous ” in case we tried to turn it into a doctor. ” It is essential that any ChatGPT response is carefully checked by a qualified medical professional,” he said.

Tools like ChatGPT now can work alongside doctors like an assistant. Photo: WSJ.

The new study authors completely agree with those statements. They themselves said that this study only aims to demonstrate that tools like ChatGPT at the present time can work alongside doctors as an assistant, but cannot replace them.

For example, for doctors inundated with patient messages who need telemedicine, a chatbot can help them pre-compose their responses, which the doctor can then read back. and edit the final version, before sending it to the patient.

This will help increase productivity, improve medical services and reduce the pressure on doctors and hospitals themselves.

“Our current research will spur more research into the application of AI assistants for messaging. If many patient questions are answered quickly, with empathy, and to a high standard, that could reduce unnecessary hospital visits, while freeing up resources for those who need them more ,” the authors conclude.

Refer to Sciencealert, CNN, Businessinsider, NCBI.

Source : Genk