Original article: https://www.tranthanhdeveloper.com/2020/12/in-memory-cache-la-gi.html

As we all know, the computer will usually have 2 main data storage places, one is the hard drive and the second is RAM. Reading and writing data in RAM and the hard drive has a huge difference in speed. RAM gives us amazing read and write speeds depending on the type, but usually tens of gigabytes per second compared to speeds from about 50 ~ 250MB / s of SSD. Looking at those numbers, it is clear that the read and write speed between the two storage areas is very large. In addition to the operations of reading data from the hard drive reducing performance, the programs that require CPU to compute a lot also reduce performance.

From the two problems mentioned above, we can think of a solution that is temporarily storing data to RAM to increase application performance, temporary storage on RAM is called printing. -memory cache.

Limitations of In-memory cache

Despite the amazingly fast read and write speeds, the RAM is often limited, so we have to care and manage what should be cached and when the memory is nearly full, we have to delete the data we are stored on RAM. Another problem with RAM is that data will be lost during shutdown or power failure, so we should give priority to temporary storage of data stored on the hard drive, but the retrieval from the hard drive is slow or priority to save. data store can be recalculated. Restrict the use of the in-memory cache as a database to store newly created data. If you choose to save newly created data temporarily in RAM, write to the hard drive as soon as possible. If you are planning on temporarily storing it in RAM for a few seconds before you actually save it to your hard drive, you may have to accept that you could lose user data, break the data integrity of the application.

Some important concepts when caching data:

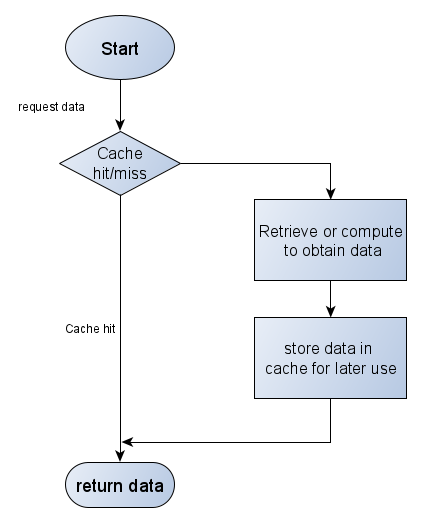

When considering the application of cache to any application we should know and estimate parameters such as cache hit, cache miss of the application and Cache Replacement Policy of each cache provider to be able to take advantage of. maximum cache power, reduced memory footprint, ..

What is cache hit?

Cache hit is when the requested data has been cached in memory. The higher the cache hit rate shows that the developers’ cache management is very good. It means that the cache system is optimized.

What is cache miss?

Contrary to cache hit, we have cache miss, cache miss is the state in which the requested data has not been cached. The higher the cache miss state means more burden on our system. The application of the cache should be reevaluated.

What is Cache Replacement Policy?

Cache Replacement Policy is roughly translated as algorithms for replacing values or deleting old values to add new values. In this article, I will not explain in detail but you can refer to this wikipedia link: https://en.wikipedia.org/wiki/Cache_replacement_policies

Here are some popular ways:

- Bélády’s algorithm

- First in first out (FIFO)

- Last in first out (LIFO) or First in last out (FILO)

- Least recently used (LRU)

- Time aware least recently used (TLRU)

- Most recently used (MRU)

- Pseudo-LRU (PLRU)

- Random replacement (RR)

- Segmented LRU (SLRU)

- Least-frequently used (LFU)

- Least frequent recently used (LFRU)

- LFU with dynamic aging (LFUDA)

- Low inter-reference recency set (LIRS)

- CLOCK-Pro

- Adaptive replacement cache (ARC)

- AdaptiveClimb (AC)

- Clock with adaptive replacement (CAR)

- Multi queue (MQ)

- Pannier: Container-based caching algorithm for compound objects

Some popular cache providers and libraries are used in Java and other languages

- EhCache

- Caffeine Cache

- Memcached

- Redis

- Hazelcast

- Couchbase

- Infinispan

- …