Hello guys, it’s me again. Did you change the wind today? Do not work on the FinTech series anymore, now I will introduce to you a problem that can be said is “too old” to the doctor.

- Loadbalance also known as “Load balancing”.

- So what is load balancing? and why do we need it ??

- Na na is: On a beautiful day, you get a pretty terrible server in terms of both the configuration and the number of requests to it. The boss requires you to optimize resource usage, maximize throughput, as well as reduce latency and ensure faulty configurations. So the idea of load balancing came into being.

- You can understand the meaning of load balancing is to build an additional gateways at the front to handle the request from the client and distribute the algorithm to the backend server behind.

Load balancing was born to solve two big things:

- 1st: Make sure the system runs stably, always avaiable (When one node in the system is out of service, the remaining nodes still run stably)

- Second: Minimize the number of requests pushed to a processing server.

Today there are many technologies that help with Loadbalance and in this article, I will write about Nginx.

First, what is Nginx?

NGINX, pronounced “engine-ex,” is a well-known open source web server software. It was originally used to serve HTTP webs. However, today it is also used as a reverse proxy, HTTP load balancer and email proxy such as IMAP, POP3, and SMTP.

NGINX was officially published in October 2004. The founder of this software is Igor Sysoev, who launched the project in 2002 to solve the C10k problem. C10k is the limit of handling 10 thousand connections at the same time. Today, many web servers have more connections to handle. NGINX uses an event-driven (asynchronous) event-driven architecture. This feature makes NGINX server the most reliable, speedy and scalable.

Because of its strong capabilities, and in order to handle thousands of connections at the same time, many large traffic websites have used NGINX service. Some of the tech giants that use it are Google, Netflix, Adobe, Cloudflare, WordPress, and more.

Some types of algorithms are used as:

1. Round Robin

As a cyclic circular algorithm, the servers will be peer-reviewed and arranged in a rotation. Service queries will in turn be sent to the servers in sorted order

For example:

- Configure a Cluster cluster including 03 servers: A, B, C.

- The first service request will be sent to server A.

- A second service request will be sent to server B.

- A third service request will be sent to server C.

- …

- A fourth service request will be sent to server A again

2. Weighted Round Robin algorithm

Similar to Round Robin technique but WRR is also capable of handling according to the configuration of each destination server. Each server is evaluated by an integer (weight value – the default value is 1). A server that can handle twice as much another server will be numbered larger and receive twice as many requests from the load balancer.

For example:

- Configure a Cluster cluster consisting of 03 servers: A, B, C. With the processing capacity of machine A is bigger than machine B and bigger than machine C. We will set the weight of servers A, B, C is 4,3,2, respectively.

- The order of coordination of requests will be AABABCABC

- The first service request will be sent to server A.

- A second service request will be sent to server A.

- A third service request will be sent to server B.

- A fourth service request will be sent back to server A….

Defect:

- Using this algorithm can lead to dynamic load imbalance if the load of requests constantly changes over a wide period of time.

- For example, requests to watch videos or download large files interspersed with requests to read information, …). As such, it will accrue requests to a server with a high weight and lose the ability to load balancing.

- In a short amount of time, it is quite possible that most high-load requests will be redirected to a server.

3. Dynamic Round Robin algorithm (DRR)

- The DRR algorithm works almost the same as the WRR algorithm, the difference is that the weight here is based on continuous server testing, so the weight is constantly changing.

- This is a dynamic algorithm (the above algorithms are static algorithms), choosing a server will be based on many aspects of analyzing server performance in real time (for example, the number of connections currently available on the fastest server or server, …).

- This algorithm is usually not installed in simple financial equalizers, it is often used in F5 Network load balancing products.

4. Fastest algorithm.

This is an algorithm based on calculating the response time of each server (response time), this algorithm will choose which server has the fastest response time. The response time is determined by the time interval between the time a packet is sent to the server and the time it is received.

The sending and receiving will be done by the load balancer, based on the response time, the load balancer will know which server to send the next request to.

For example:

- When accessing youtobe.com , if the user’s IP is from Vietnam, the request will be transferred to the Vietnam server for processing. This will save a lot for international bandwidth and improve the speed of the connection.

- The fastest algorithm is often used when servers are in different geographical locations. Thus, the server is near any server, the response time of that server will be the fastest, and that server will be chosen to serve.

5. Lest Connection (LC) algorithm

The requests will be redirected to the server with the least number of connections in the system. This algorithm is considered a dynamic algorithm, because it must count the number of active connections of the server.

For a system with similarly configured servers, LC is able to work well even when the load of connections varies over a large range. Therefore, using RC will overcome the disadvantages of RR.

From the outside it seems that LC works well when the servers have different configuration, but in reality that is not true.

Defect:

- TCP’s TIMVE_WAIT state is usually set to 2 minutes, during which 2 minutes a busy server can receive tens of thousands of continuous connections.

- Assuming that server A is twice as capable as server B, server A is processing thousands of requests and keeping those requests in the TIME_WAIT state of TCP. Server B, meanwhile, must be treated as server A, but because of the lower configuration of server B, it will be much slower. Thus, the LC algorithm does not work well when the servers have different configurations.

II. Demo loadbalance using Nginx:

B1. Download and install nginx using brew.

- Install: brew install nginx

- To run nginx run: sudo nginx

- Open the test program, guys: http: // localhost: 8080

B2. Perform create application backend demo. And perform run on 2 different ports.

- I use spring boot and run on 2 ports 9876 and 9875, guys.

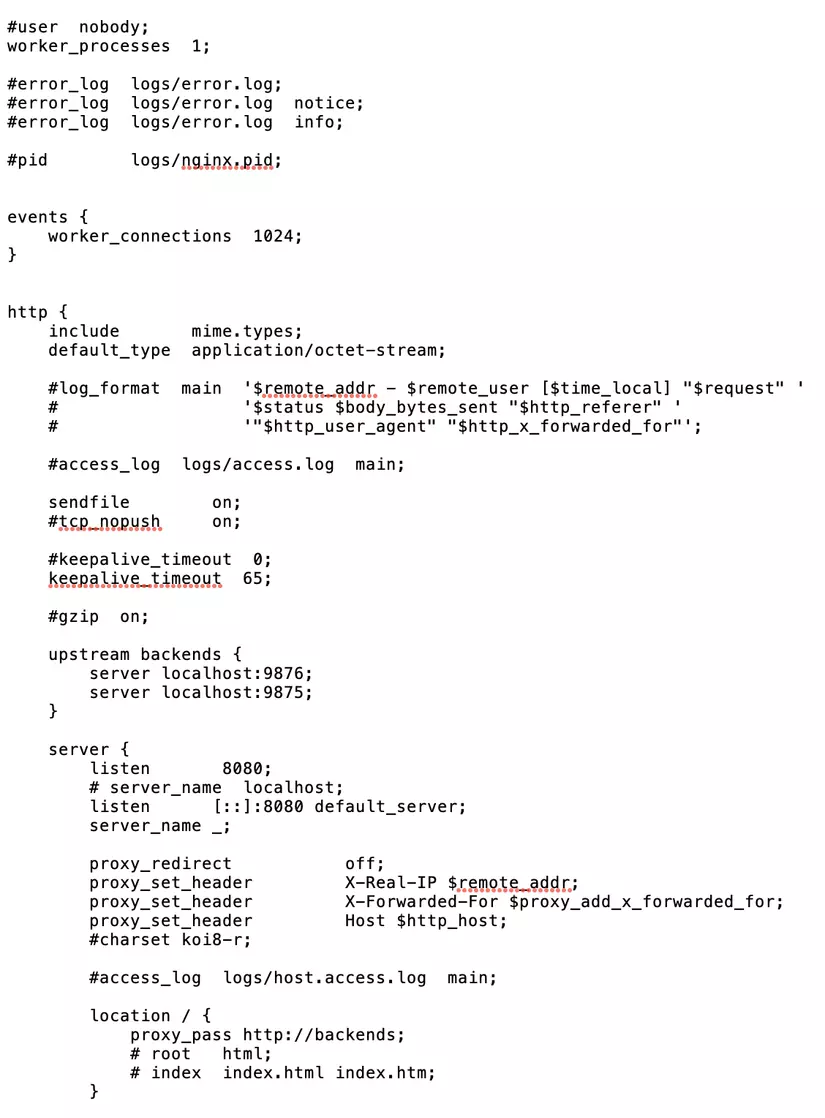

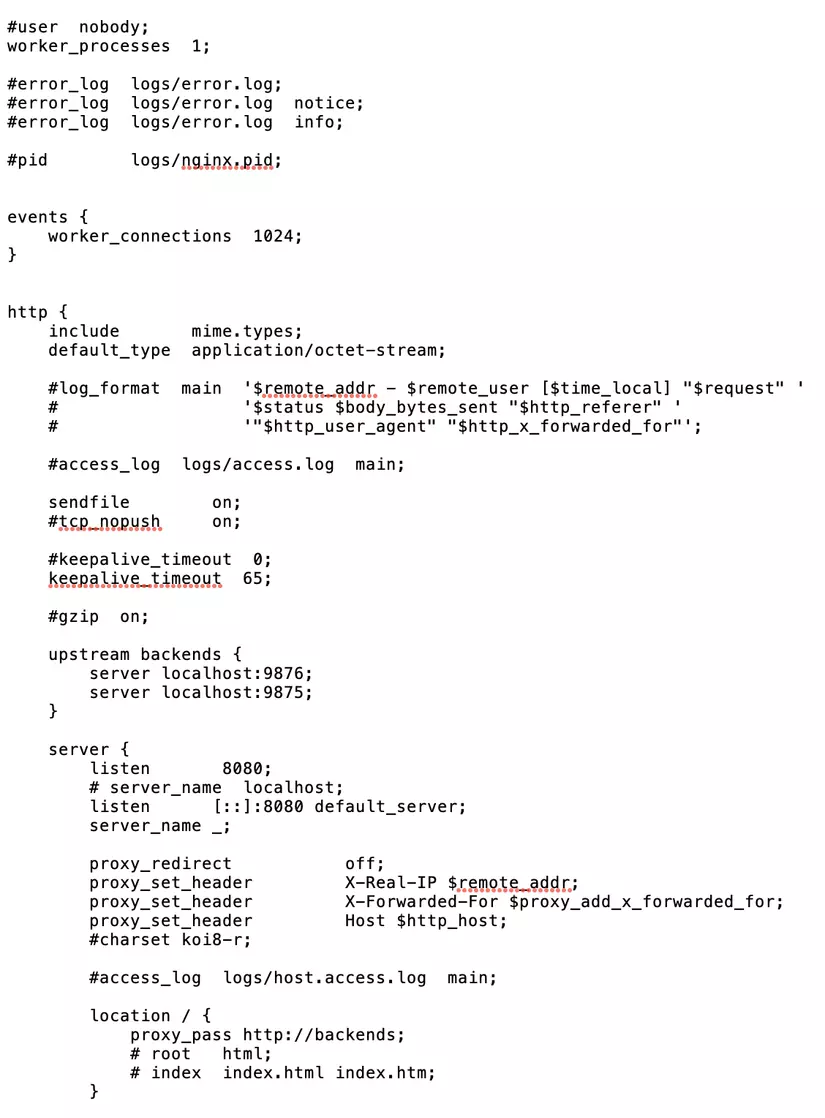

B3. Open nginx.conf file to perform configuration.

Perform the following config:

1 2 3 4 5 | upstream backends { server localhost:9876; server localhost:9875; } |

Of which: localhost:9876 ; and localhost:9875 are 2 running application ports.

In the tag location:

1 2 3 4 5 6 | location / { proxy_pass http://backends; # root html; # index index.html index.htm; } |

B4: Perform nginx run and test, guys!