Welcome to the first post in the series to learn about my Docker

In the first article of this series, we will take 10-15 minutes to know what Docker is, what it brings, why it should be used and who is using it.

Whether these will be optional after all, you can read all that you want to see, but especially her “please” is mandatory and requires you to read this article , if not maybe you will realize using Docker finished without seeing it more than usual at all

Docker – Hello beauty

The thing is …

There was a guy named Dau To who just joined a company, when he was new to the team, his feet were wet but he was given to the project by the leader and installed, tested and overviewed the project. Then install. Which is PHP, then Laravel, MySQL, ok test run and  BUILD FAILED

BUILD FAILED  . Then he ran to ask Leader, the leader said he needed to install Redis. Then install Redis. Troubling all day and installing, he realized that installing Redis on Windows is more difficult than going to the sky, while in Ubuntu or Mac it is as easy as flossing. So that evening I about to install Ubuntu on your computer. Then build again

. Then he ran to ask Leader, the leader said he needed to install Redis. Then install Redis. Troubling all day and installing, he realized that installing Redis on Windows is more difficult than going to the sky, while in Ubuntu or Mac it is as easy as flossing. So that evening I about to install Ubuntu on your computer. Then build again  BUILD FAILED

BUILD FAILED  , he again asked Leader and knew that he had not run

, he again asked Leader and knew that he had not run composer dump:autoload to load PHP libraries, nor did he know that he had to run php artisan storage:link . After running all of the above, then opening the web, you cannot see the realtime function, ask the leader again and know that you have not run the laravel echo server . He felt sad and inhibited

Then there was a guy named Mat Nho when he had to maintain 3-4 projects, each project needed everything like PHP, NodeJS, MySQL, MongoDB, and one day he installed and boomed, MySQL died. how to remove “white” and reinstall, the error is still printed like that, red  , the related projects are empty because they all need DB to run. He was sad, and from then on, whenever he installed anything on the server, his hands trembled like the first day to report to his father-in-law’s house.

, the related projects are empty because they all need DB to run. He was sad, and from then on, whenever he installed anything on the server, his hands trembled like the first day to report to his father-in-law’s house.

Through the two stories above we see the following fears:

- in the first story: each project needs to install many things, many libraries to run, while the document is unclear, and sometimes there is a document but we can not install and run successfully due to the difference between different environments. school run. Local is using Win while server is 96.69% is Linux

- In the second story, the sharing of resources between projects, and the confusion of the number of projects increases, making each decision to install or delete a resource a real headache. Which will most likely occur in the case of “I finished erasing but can’t reinstall it”.

Anyone who finds himself in the same situation as in his hands. Ok put your hands down I started the next section

What is docker

From sites like the one above and dozens of other reasons, Docker was born.

Definition of Docker in a “mechanical” way:

Docker is an open source project that automatically deploys Linux and Windows applications into virtualized containers. Docker provides an abstraction layer and automation based on LinuxDocker is an open source project that automatically deploys Linux and Windows applications into virtualized containers. Docker provides an abstraction layer and automation based on Linux

The definition is simplified according to myself:

Docker is a tool that allows you to run projects in a specific environment, clearly defined, independent of the original environment. The applications that run in Docker are called Containers (“Bow”)

I know that after you’ve read the definition, there are still many questions. Just slowly, time will tell . Let’s see what’s good about Docker

What Docker brings

Here is what I summarized about the good of Docker:

- Docker gives you a specific target environment, for example you need the Ubuntu environment, with PHP 7.2 with NodeJS 8.0, with mysql 5.2. Docker will help you get that.

- The environment in Docker is independent from the original environment: Docker will create for you a “virtual” environment in which you can run your project, regardless of your original operating system. Therefore, whether you are on Windows or Mac, you can still run the project under Ubuntu environment or any environment (which currently supports Docker) you need.

- A Docker environment after it is defined will be “immutable”. You can set up anywhere with the same environment you have defined.

- Each project will have a specific configuration file, 10 years after you read the project, you still know what it takes to run the project and what to do. You just need to give the configuration file to others and they will know for themselves what to do to run

- Because each project we can set up in a separate environment, the projects will not conflict, sharing resources confusing (if we do not want). Thereby minimizing interdependence, installing, adding and removing libraries, the configuration will not be affected to other projects.

- Docker can help automatically Heal (self recovery, restart) if in case of error.

Stop! Has there been a virtual machine before ??

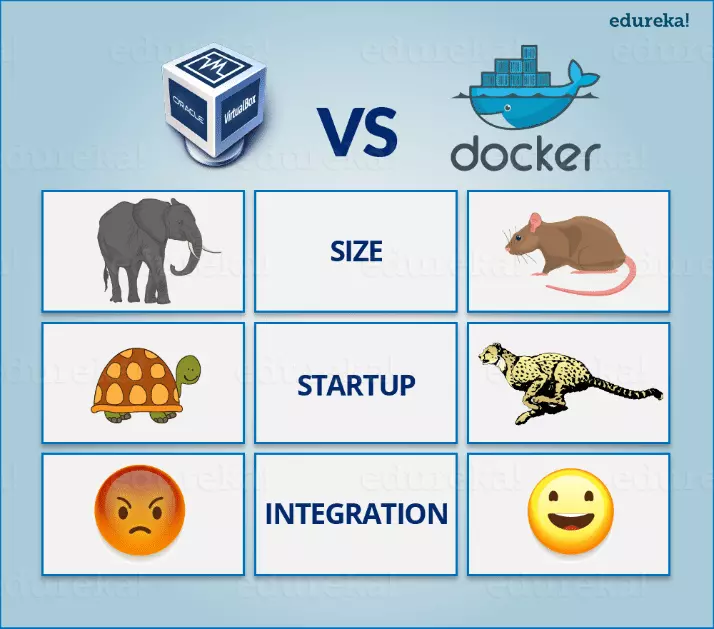

After listening to the above, you can realize the similarities that previous virtual machines like VMWare or Virtual Box could do: create virtual environments, independent of the original operating system.

But one of the biggest drawbacks of a virtual machine, I think, is that it is heavy, very complicated to install, and very slow. Using a virtual machine, we have to cut a hard amount of resources for it, leading to the original operating system and the virtual machine must share the hardware and reduce the performance of both. When you encounter an error, you will need to reinstall it  .

.

With Docker, Docker will create a “virtual environment” rather than a virtual machine anymore, so it will share hardware with the original external environment, RAM, CPU, .. the speed will be the same, file sharing (if desired) with the parent environment. Also, creating a “virtual” environment in Docker is quick and easy to do with just one or several commands.

So you need to be clear about what Docker brings and what virtual machines bring: 1 that creates a virtual environment for sharing hardware with the original environment, one that makes us a whole machine. Virtual with everything from hardware, to file systems, … separate from the real machine. (Oh, after listening, my mind is really virtual

). Have a look at the picture below to see a funny comparison, but actually about using Docker versus using a virtual machine.

). Have a look at the picture below to see a funny comparison, but actually about using Docker versus using a virtual machine.

Why do I need Docker?

Here are some of my thoughts on why you should love Docker  :

:

- When our project has many people working together, Win users, Mac users, Linux users, but the way to install the software and library to be able to run the project is different on each platform, and also when Installation may change the project. This leads to the project running incorrectly and wrongly on different platforms.

- The most frightening thing of me in the old days was that the Local code was delicious, but on the server, I had garlic

.

. - Each project needs a lot of things to go with: MySQL, Redis, extensions, … And remembering to install each one is very tiring, remember how to configure it properly is even more tiring. And the biggest FIRE is when the installation is faulty, but the error is deleted and reinstalled not to be the same.

- When we have many projects, sharing many things, which leads to when we want to change, modify a shared resource that will affect the related projects.

- And 1 case I feel most painful. That is when we move the environment (such as moving the server), we need to move the old project and move to the new environment. At this time, FAKE

really show up. How to move all the data, reconfigure it from scratch with billions, install billions of things

really show up. How to move all the data, reconfigure it from scratch with billions, install billions of things - …. bla and blo

Everything on Docker will help you solve a very simple, easy and cute (like the article writer so  )

)

Who is using Docker

Based on what I know, the seminars or events I go to, Docker is used in almost every company I meet. From Google, Facebook, Amazon, Tiki, to my old company and so many I can’t count here

Chances are your company is also using Docker, try asking Leader  .

.

At the moment, using Docker will help us to use a lot of utilities included:

- Kubernetes: Tool to manage projects running by Docker, Heal automatically when errors, auto scale, auto deploy, automatically and automatically

- CI / CD: This section I will present in this series, applying CI CD helps us just code, and commit, the rest of the test, check errors, and deploy (deploy) to the real environment are all. do it automatically, no need to install messy tools to auto-deploy anymore.

- The support to the root of source code management platforms such as Github, Gitlab, Bitbucket, … for Docker applications adds to the value of Docker.

With all the great things that Docker brings, I think that is also why Docker is currently extremely popular application to run projects in a “virtualized” environment instead of running in the traditional way.

Concepts to grasp

Because jumping into Docker will have a lot of things we need to know, but if you say right in this one article, everyone will be super lose.  . Therefore I will only mention a few basic concepts that I find necessary and we often use, other things we will gradually explore in the following articles.

. Therefore I will only mention a few basic concepts that I find necessary and we often use, other things we will gradually explore in the following articles.

Image

Image is one of the most basic units in Docker. 1 Image will define an environment and its contents. Our application wants to run, it needs Image

For example in Image we can define the following components:

- Environment: Ubuntu 16.04

- PHP 7.2

- MySQL 5.2

Then all of the above (Ubuntu, PHP, MySQL) we can repackage into 1 Image. Can keep as dowry, or make public for others to use.

Container

A Container is an entity of Image . The simplest way to understand it is: Image you see as a class, and Container as an Object is initialized from that class.

From an Image, we can initialize tens and hundreds of containers. Each project can contain one or more containers created from one or more images. For example, we have a PHP project:

- Ubuntu environment -> Ubuntu image 16.04 required

- Additional php -> php7.2 image required

Therefore, when running the above project, we will need to create 2 containers: 1 is Ubuntu container, 1 PHP7.2 container

Port

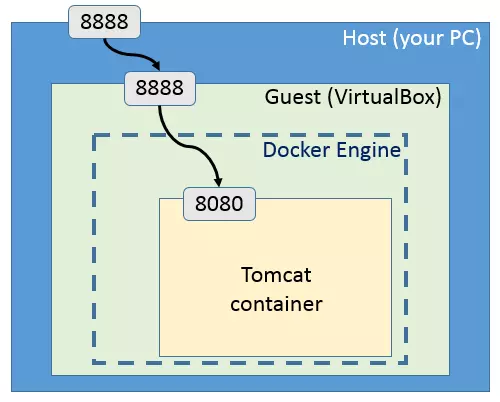

Because the environment in Docker is completely independent from the original environment. So to be able to use applications running in Docker, we need to open the port from Docker to be able to call outside.

Or call it “map port” properly. That is, we will open a port in the original operating system, then navigate to the port that is in the Docker. That way we can call applications running in Docker

As in the image above, we have a Tomcat server running in a Docker container, in the container we open port 8080 for external access environment, from external environment we open port 8888 so that real users can query. Therefore the user will call port 8888 to communicate with the application running in the Docker container.

Volume

Because the Docker environment is independent, the file system of the application running in Docker is also independent, but in fact, it is almost always necessary to save files: DB archives, log archives, images, etc. If the root field has access to the file system of the application running in Docker, then we need the Volume

Using the Volume to the root environment can tell Docker: if you have created a new log file, remember to save it for me, then make a “projection” of the log file for me, when I edit / delete at on my side (outside), or you edit / delete on your side, the log file must be in both environments (properly termed mount from the external environment into the Docker)

Concept has taken, setup only

Setup gitlab

In this Series, I encourage you to use Gitlab to store source code, because gitlab gives free private repo and free CICD, and also free container registry (where we can push Image and store it later). And Gitlab also supports a lot of great things, it’s also free, I hope I can share as much as possible for you guys.

So you create yourself a Gitlab account offline (rest assured free does not start entering credit card

). Visit gitlab.com to create a new account

). Visit gitlab.com to create a new account

Setup Docker and Docker-compose

Docker

Next you need to install Docker (of course  ):

):

- Install for Windows here . Be sure to read

System Requirementsand search google if you have questions - Install for Ubuntu here

- Install for Mac here

After installing, remember to check whether it has been successful or not. Test run the command to check:

1 2 | docker --version |

Hope you do not comment asking how to install it because on Google there are many better results

Docker-compose

To run projects in Docker, we can use the following command to run each container needed:

1 2 3 4 | docker run containerA docker run containerB .... |

BUT when running the actual project, most of the time we will use docker-compose to run the project, because in reality we almost always need more than 1 container for a project. So in this series I will use docker-compose to run demo projects in my articles. Rest assured using docker-compose does not need more brain where you guys okay, the same all

Docker-compose is a tool to configure and run multiple docker containers at the same time. Using docker-compose will make it easier for us to run one or several containers at the same time, and help us easily visualize the project overview.

Setting:

- With Win and Mac, Docker-compose is already integrated with Docker above, you do not need to do anything more

- With Ubuntu, you see the instructions here (remember to carefully read the instructions, install the latest version and do all the Step 1 is done offline)

After installing, check if it is successful or not. Test run the command to check:

1 2 | docker-compose --version |

End

Through this article I also briefly introduced to you about Docker and the benefits that Docker brings, along with the “ammunition” setup necessary for us to start on the next post.

In the following articles, I will show you how to dockerize specific applications (run applications in Docker environment) (NodeJS, Laravel, Vue, Python, …). From now on, I will use the word dockerize to show how we run the project inside Docker (similar to international friends).  )

)

In the following lessons, each lesson will be a specific example, so that you can both do and learn and see the results, I will focus more on practice because for me the fastest way to learn is practice. As Laradock’s homepage says:

1 2 | Use Docker First - Then Learn About It Later ( dùng Docker trước, học về nó sau, ý là các bạn sẽ dần hiểu ra nó là gì và cách vận dụng sao cho đúng) |

If you have any questions, please leave a comment for me. See you in the following articles  (The following article is the first practice with many important things I want to share, so please read it

(The following article is the first practice with many important things I want to share, so please read it  )

)

really show up. How to move all the data, reconfigure it from scratch with billions, install billions of things

really show up. How to move all the data, reconfigure it from scratch with billions, install billions of things