The first plugin to use machine learning to detect “crispy” chewing sounds.

- Tram Ho

YouTube is becoming more and more popular when people watch videos, the home opens YouTube, especially since the time when “Ms. Vy visits”, no one wants to go out. Of course, watching videos on YouTube how to lack delicious Potato Snacks. However, the sound of Potato Snack is crunchy when chewing makes you not hear lines from the video very uncomfortable. So Lay’s immediately introduced to the movie enthusiast club, fall in love with the delicious crunch of the Potato Snack Lay’s Crispy Subtitles application – the first plugin to convert the crunchy sound into subtitles!

Eat a Snack Delicious Crispy Potato has craved and still can watch the video of a lifetime

This is the result of cooperation between Lay’s, Happiness Saigon and BLISS. They created “Lay’s Crispy Subtitles” through the use of a machine learning algorithm that runs directly on a browser plugin. This article will help you understand how the plugin works.

Data collection

To teach crunchy sounds to machine learning, a collection of 17,512 different “crisp” sound samples were tested. This also means that a lot of Potato Snacks have been “wasted” in the plugin development process.

Pretreatment

At this point, the sounds are prepared for processing. To ensure the plugin runs completely on the user side of a browser using Tensorflow JS, usage patterns must comply with strict conditions and procedures:

● 22,050 KHz monophonic sound, switchable if needed.

Use librosa in Python to extract audio samples for validation or removal.

● Next, Mel frequency systems are created to convert raw data into perceptual information and reduce the data size. Finally, use Meyda to extract the data.

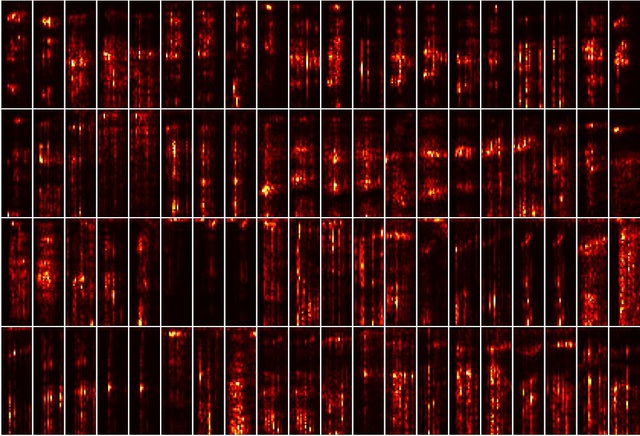

After the steps above, Lay’s Crispy Subtitles obtain the following spectral information:

This is the model’s input data that is then used to evaluate whether a 232-millisecond sample can be considered a “crackle” sound.

Model formation

Next, use Keras and Tensorflow to model sound classification machine learning. The dataset is first classified according to the positive and negative labels, making up the test and training set by sklearn.

After a lot of testing, the classification model consists of 2 layers Conv2D + Maxpooling2D, a Dense layer (128) with relu activation and a Dense layer (2) with softmax activation selected. Eliminated sounds are added between each layer to avoid over-matching.

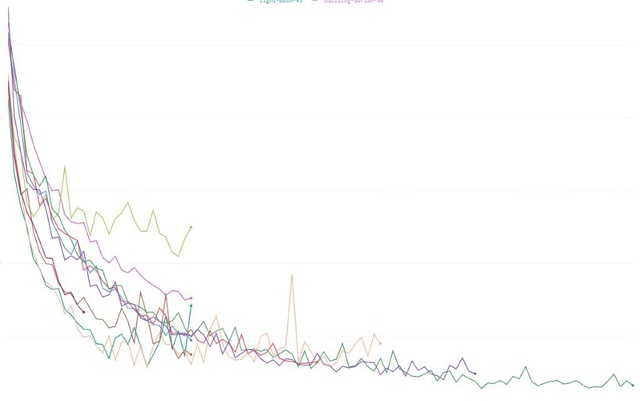

Train

Cross entropy classifies to calculate losses and uses the Adam optimizer to train the model with a batch size of 64 out of 75 epochs. After all, the model has 0.0831 precision and 0.986 precision.

Plugin setup and model integration

This process is done in the Python ecosystem. After that, they were converted using Tensorflow JS and eventually reduced to under 5MB in size and integrated into the plugin. When activating the plugin, the subtitles will appear. In case the video has subtitles but is turned off, machine learning will also recognize and automatically turn on the subtitles after 10 seconds.

This is a “lifesaver” from Lay’s for the association while watching videos on YouTube and chewing Potato Snack while watching.

Now test the effectiveness of Lay’s Crispy Subtitles by downloading it for free from the Chrome Webstore at http://bit.ly/LaysCrispySubtitles , install the plugin, open YouTube and watch a lifetime video while chewing a Potato Snack. Lay’s craved.

Source : Genk