The story of the extremely simple API has been enchanted to be complicated and fancy.

Recently I was assigned a very simple task by the boss: Create 1 service api with only 1 endpoint Get item by ID . It seems that this is the problem of creating API CRUD for students who have just graduated, I am eager to plunge into the code with the upper heart . After only about 2 hours of setting up the environment, finding libraries, code, … I had a project using KoaJS with 1 endpoint as required. And everything here is the end.

First things first

But.

If the story ended so early, I wouldn’t be here to talk about what to do, right? Let’s take a short break before starting to dive in this half-meter deep pond.

He is Ming Monmen, 1 devops genuine cult beauty of simplicity, favored the swing at tomorrow seo getting to know one problem, but prefer to stand on the shoulders of giants to embark on solving the problem . Today I am very pleased to accompany you in this crazy carp story. We look forward to your support and encouragement to keep motivated to continue slashing on the Wanderer.

Let’s get to our main topic: the main API is used to get item by ID .

At first glance, you will not find it worth thinking about, but behind this simple API are very complex requirements coming from the position of the system designer. But that’s the story behind, let’s take a quick look at some background knowledge to be able to understand this article:

- Eventual Consistency : Weak consistency of data

- Caching Strategy : Methods to implement caching

- Cache Eviction Policy : Rules for clearing the cache

- In-memory cache

- MongoDB change streams

Knead zo.

Note : All of the above benchmark results I performed on the local machine, not complete setup to measure so the numbers only have relative meaning when compared to each other without absolute meaning.

Context

Why do I have to make a separate service that contains only 1 API Get item by ID ? Well, it’s a long story, rooted in micro-services architecture.

In the micro-services system in general, there will usually be services containing information that is shared a lot throughout the system (such as Account , Product Catalog , …). These are critical services of the system, not only handle business logic itself but also a place to search data of many other services. Therefore, the amount of requests related to its read will be much more than the write , leading to us having to approach a different problem a little bit as follows:

The optimization problem for the internal read section is a fairly complex problem, so we decided to separate the internal read section into a separate service. This service will only serve as the data source for other services and focus on optimizing the read section. This separation allows me to be more comfortable in choosing a solution and avoid affecting the service that contains many existing business rules.

Requirements for internal read service:

- Low latency : The data from this service is often used as info data to serve the services that act as the API composition , that is, synthesize data from many services (including the main service that contains the logic – for example 1 list ID – and the auxiliary services contain info data). Therefore its latency greatly affects the entire user’s request. So the prerequisite is to have low latency.

- High throughput : As I said above, there will be many services that need information and call this service, so it needs to handle a huge number of requests. This is an equally important requirement compared to latency.

- Scalable : Can be scaled easily.

We can skip the optimization at the Database layer because these data tables are relatively small (about a few million rows) and query directly with the Primary Key . What we notice here is the application side optimization.

The first attempt

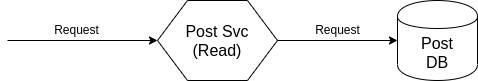

Initially this service was written in NodeJS with a very simple architecture:

Benchmark through the performance of the system has the following results:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | <span class="token comment"># AB with concurrent level: 50</span> <span class="token comment"># KoaJS app with PM2 cluster mode </span> Requests per second: 2034.62 <span class="token punctuation">[</span> <span class="token comment">#/sec] (mean)</span> Time per request: 24.575 <span class="token punctuation">[</span> ms <span class="token punctuation">]</span> <span class="token punctuation">(</span> mean <span class="token punctuation">)</span> Time per request: 0.491 <span class="token punctuation">[</span> ms <span class="token punctuation">]</span> <span class="token punctuation">(</span> mean, across all concurrent requests <span class="token punctuation">)</span> Transfer rate: 1490.20 <span class="token punctuation">[</span> Kbytes/sec <span class="token punctuation">]</span> received Percentage of the requests served within a certain <span class="token function">time</span> <span class="token punctuation">(</span> ms <span class="token punctuation">)</span> 50% 21 90% 40 95% 48 100% 103 <span class="token punctuation">(</span> longest request <span class="token punctuation">)</span> |

As you can see, TP95 falls into 48ms with throughput 2000 RPS . Also a pretty good number if you want to use codebase from the service that is running. This method does not use cache, so it will burden the DB. However, at low load levels, MongoDB is perfectly balanced. The absence of cache also makes the data returned for requests is always the latest data.

The second attempt

Everything went well with the above API and everything went smoothly within a few weeks. However, I have noted that at some peak times, the latency of this API has skyrocketed (about a few hundred ms), making services dependent on it slow down. Do not accept the result just stop at 2000rps , I embarked on optimal research to increase the performance of this service. Although NodeJS satisfies most of the types of services and workloads I have done, but because this is one of the special services that has been separated, the application of another technology is entirely possible.

Another factor to consider is resources. My NodeJS application when running takes up quite a lot of resources, for example, for each instance taking 100 ~ 200MB of ram, running 10 ~ 15 instances (to ensure the system’s throughput) will take up quite a lot of resources.

For a turning point, force us to turn sideways. And I switched to rewrite the service in Golang . Because this is the service with the simplest logic, just fast, the application of a new language to handle quite easily and appropriately.

Still with the above model, no cache and call directly to the DB, the API using its Golang was born with the following benchmark results:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | <span class="token comment"># AB with concurrent level: 50</span> <span class="token comment"># Gin gonic (Golang) with no cache</span> Requests per second: 4967.31 <span class="token punctuation">[</span> <span class="token comment">#/sec] (mean)</span> Time per request: 10.066 <span class="token punctuation">[</span> ms <span class="token punctuation">]</span> <span class="token punctuation">(</span> mean <span class="token punctuation">)</span> Time per request: 0.201 <span class="token punctuation">[</span> ms <span class="token punctuation">]</span> <span class="token punctuation">(</span> mean, across all concurrent requests <span class="token punctuation">)</span> Transfer rate: 5835.62 <span class="token punctuation">[</span> Kbytes/sec <span class="token punctuation">]</span> received Percentage of the requests served within a certain <span class="token function">time</span> <span class="token punctuation">(</span> ms <span class="token punctuation">)</span> 50% 8 90% 20 95% 26 100% 84 <span class="token punctuation">(</span> longest request <span class="token punctuation">)</span> |

Not bad, huh? My new API has 2.5 times the throughput and 2.5 times the TP95 . But coming here surely you will be wondering: If this is just an article showing that Golang is faster than NodeJS , what is it to write so long? The article also has nothing called the gray matter of the author.

Don’t be disappointed, good things are in the back.

The third attempt

Dissatisfied with myself, I started researching solutions to further optimize this result. And the first idea is to use caching . Of course, when using caching, I will have to solve some of the following problems:

- Cache mechanism like? How will data from the DB be loaded into the cache? On-demand or preload, …

- Resources that are more specific than RAM (because I will implement cache on RAM). Cache how many items, how much space?

- How to clear cache ? Because RAM resources are limited , it is almost impossible to store all the data in the DB into the cache, so we have to solve the problem of clearing cache when it is full. This can be done through expire, LRU, LFU, etc.

- Data is incorrect compared to DB. This is what happens with every cache system. It’s just how we handle it to shorten the lag time between the data in the db and the data in the cache.

Who am i, where is this?

The first thing we need to do is understand what we are doing. It sounds obvious, but do not underestimate this step. This is a step for you to understand your system, the data characteristics, the requirements for the response. I can summarize with a few quick questions as follows:

- The data to cache is easy to get : Yes (get item by ID is very fast). This question aims to evaluate the cost of 1 time retrieving data from the DB.

- Read / write ratio : 99/1. This number you can just estimate whether the data you need will read more or more and compare the correlation of these two requirements with each other for the purpose of evaluating cache update solutions.

- Consistency requirements : Medium. Does your data after the update need to be updated quickly to the hands of other services? Because this service focuses on providing info data for API compositions, I don’t need to put too much consistency. Information of 1 product / 1 user may be delayed and completely acceptable.

- Traffic characteristics : can be represented by the 80-20 rule, that is, 20% of items appear in 80% of traffic. This is a very important aspect in choosing Cache Eviction mechanism to increase Cache Hit Ratio rate.

Effective caching with Ristretto’s LFU algorithm

After having information on characteristics as well as system traffic, I started to search the library with appropriate cache mechanisms. And Ristretto is a brilliant library that meets all its requirements:

- High performance

- Concurrency-safe

- Support expire time

- Cache eviction policy: LFU increases Cache Hit Ratio rate when some items have a higher frequency of appearing in traffic than others.

Here I only mention one library is Ristretto, but I recommend you to learn carefully the cache mechanisms behind different libraries to be able to make a suitable choice for the type of data of the village. Dear.

You can read more about step by step building and benchmarking with other lib cache in these 2 blog posts: The State of Cache in Go and Introducing Ristretto: A High-Performance Go Cache

A little more talk about the two algorithms that clear the LRU cache (Least recently used) and LFU (Least frequently used) . You can read more about these two algorithms online to understand better, here I explain one way is that when the cache is full, to add one item to the cache, we will have to delete one or more other items . from the cache. So choosing which item to delete is the problem that cache systems need to solve. If you delete an item that is very frequently requested, of course, your miss cache rate will increase, so depending on the data distribution characteristics, you choose an appropriate algorithm. Here I choose LFU because my data has an uneven distribution, some items will be very often accessed. The right algorithm will help your Cache Hit rate increase significantly.

At this point, after implementing the cache, I have basically finished the work. Here is the benchmark result after the request sent has been completely cached:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | <span class="token comment"># AB with concurrent level: 50</span> <span class="token comment"># Gin gonic (Golang) with in-mem cache</span> Requests per second: 39630.33 <span class="token punctuation">[</span> <span class="token comment">#/sec] (mean)</span> Time per request: 1.262 <span class="token punctuation">[</span> ms <span class="token punctuation">]</span> <span class="token punctuation">(</span> mean <span class="token punctuation">)</span> Time per request: 0.025 <span class="token punctuation">[</span> ms <span class="token punctuation">]</span> <span class="token punctuation">(</span> mean, across all concurrent requests <span class="token punctuation">)</span> Transfer rate: 8785.24 <span class="token punctuation">[</span> Kbytes/sec <span class="token punctuation">]</span> received Percentage of the requests served within a certain <span class="token function">time</span> <span class="token punctuation">(</span> ms <span class="token punctuation">)</span> 50% 1 90% 2 95% 3 100% 19 <span class="token punctuation">(</span> longest request <span class="token punctuation">)</span> |

Very impressive right? I increased the throughput of the service from 5k rps to 40k rps with latency reduced by 10 times for cached items.

Although implementing Ristretto into the project is very easy, the speed of the API has also been greatly improved. However, this is not our stop. Using the cache alone does not make your API invincible. There are many factors that need to be considered in order to use cache effectively:

- Number of cache items – this number affects how much ram an instance of your app uses.

- Cache Ratio Ratio – reflects the efficiency of your cache usage.

- The number of cache items cleared – reflects the correlation with the write flow and the distribution of items in your cache over time.

- Cache time – adjust data latency and clean up old items.

The process of refining these parameters is quite time-consuming, but there are some experiences to draw for your reference:

- Increasing the number of cache items increases the Cache Hit Ratio to a limited level (over which the efficiency of the cache decreases because the hit ratio does not increase but consumes more memory). For my systems, the number of cache items is equivalent to 20-30% of the total number of items requested during the day.

- Reducing the cache time reduces data lag, but reduces the Hit Ratio Cache quite a lot.

- If the number of cache items is deleted too much (updating the data takes place with a large frequency) will effectively reduce the use of cache greatly.

The number of cache items and the number of items deleted will depend entirely on the characteristics of your traffic and system, so to determine these numbers is no other way than to monitor your system, learn See how many items are requested in a day, how often, and in proportion to the amount of RAM for each instance to decide.

Solve data latency problems with MongoDB Change Streams

Data latency is always a very headache problem for all cache systems. I remember once reading a doctor who said:

If there is a valid invalidate cache mechanism, I can cache everything

It sounds reasonable, right? At the top, when there is no mechanism for invalidate cache, I only use expire time to invalidate cache. This is the easiest way to manage data latency. However, reducing the expire time will directly affect Cache Hit Ratio. In addition, the less-changed items are invalidated, while the frequently changing items still have to accept data delays.

Based on the data characteristics that update infrequently / less than the frequency of reading, I have boldly used a very good feature of MongoDB which is Change Streams . Thereby instead of listening to the application’s events to invalidate the cache, it will listen directly to the database event via Change Streams .

In a nutshell, when your collection has additional actions to delete, Change Streams will return you an event related to the changed documents. I rely on this event to invalidate the cache of that item in the application.

This way has some characteristics that you need to note:

- Change Streams are only available on replicaset or sharded cluster .

- Change Streams does not burden the Database, but listening to Change Streams will take a connection . You should note this number compared to the client size pool.

- Handling Change Streams burdens your own application . Please consider when using and should only be used with data that has a lower write frequency than read.

So my complete cache mechanism would be:

Thanks to this invalidate mechanism, I can increase the cache time up to 1-2 days, thereby increasing the Cache Hit Ratio to the maximum. (> 90%)

summary

Some achievements have been obtained:

- Optimize RAM usage for cache. 200MB for 30k active item with an expire of 1 day.

- Increase throughput API from 2k rps to 40k rps

- Reduces latency from TP95 48ms to TP95 3ms

- Cache Hit Ratio reaches 93% with 5 instances running in parallel (running multiple instances in parallel reduces Cache Hit Ratio). This is a very important indicator to help me evaluate the effectiveness of using cache on a natural data set (rather than sitting in the benchmark of 1 cached item and thinking it is good)

- Cache lag time is only in ms – the time to process Change Streams event handling.

It’s over. If you have any questions, please comment.