Principle of operation of Augmented Reality

- Tram Ho

Preface

As you know, over the past 20 years, how technology has evolved has undergone a clear transition. From fixed offices to desktop computers, originally on websites, then to the media and society, then mobile computing. This has helped sales of mobile phones and tablets surpass sales of desktop computers for many years.

With an increasingly demanding user interface , when users leave the desktop, it makes sense to bring the physical world to our computing experience, right? that the physical world is not flat and not only includes materials, it can be considered as a new metaphor UI .

AR (Augmented Reality) has the potential to become the leading UI metaphor for positioning computing. AR provides a direct link between physical reality and virtual information about that fact. The world becomes a form of UI, leading to a familiar sentence that I often hear: ” Back to the real world! “?))

VR (Virtual Reality) it helps users have a vision to immerse themselves in the artificial world, it has promoted the development of gaming machines with more amazing 3D graphics. However, a UI metaphor like VR, by definition is not possible, but why don’t we try to ask that question: “What would it be like if it didn’t necessarily need to be used with the same computer?” ”

In fact VR and AR come from a lot of previous ideas, increasing mobile computing to connect with the real world seems to be an attractive proposition. A few easy examples illustrate this idea:

Location-based services can provide personal navigation based on the Global Integrated System (GPS) , while barcode scanners can help identify listings in libraries or products in supermarkets. However, these methods require clear user actions and are quite rough . Barcodes are very useful for identifying books, but not for naming peaks on a hiking trip; Similarly, they cannot help identify small parts of the clock that are being repaired, not to mention the anatomical structure during surgery.

AR promises to create direct, automated and actionable links between the physical and electronic information worlds. It provides a simple and immediate user interface for an electronically enhanced physical world. The great potential of AR as UI metaphor changes the pattern when we consider some of the most recent milestones in computer interaction with humans: the emergence of the World Wide Web , the social network. Assembly and mobile device revolution .

The trajectory of this series of milestones is clear: First, there is a large increase in access to online information, resulting in a large amount of consumer information. These consumers are then allowed to act as producers of information and contacts, and are finally given the means to manage their communications from anywhere, in every situation. case. However, the physical world, in which all activities of accessing information, authors and communication take place, are not easily linked to the user’s electronic activity. That is, the model has been stuck in a world of abstract websites and services that are not directly related to the physical world. Many technological advances have occurred in the field of computing and location-based services, sometimes called positioning computing (Situated computing) . However, the user interface with location-based services is still primarily derived from models used on desktops, applications and the web.

AR may change this situation, and, in so doing, may redefine browser information and author. This UI metaphor and technologies allow it to form one of the most attractive and future-oriented areas of computer science and application development. AR can mask computer-generated information about real world views, amplify people’s perceptions and perceptions in remarkable new ways.

Definition and Scope

How should Definition and Scope say, while virtual reality (VR) puts users in a completely computer-generated environment, augmented reality (AR) aims to present the information posted Sign directly into the physical environment. AR goes far beyond mobile computing in that it closes the gap between the virtual world and the real world, both in terms of space and awareness. With AR, digital information seems to become part of the real world, at least in user perceptions.

To achieve this connection is a big goal, a goal based on knowledge from many areas of computer science, but can lead to misconceptions about what AR really is.

For example, many people visually combine virtual and real elements with special effects in films like Jurassic Park and Avatar. Although computer graphics techniques used in the film can also be applied to AR, the film lacks an important aspect that is AR interaction. So what is AR?

The most widely accepted definition of AR was proposed by Azuma in the 1997 survey by According to Azuma , AR must have the following three characteristics:

- Real and virtual combination (Combines real and virtual)

- Interactive in real time (Interactive in real time)

- Linked to 3D (Registered 3D printing)With this definition you can understand: it does not require a specific output device, such as head-mounted displa (HMD), as well as unlimited AR for visual means. Audio , haptics and even the sense of smell or AR are included in its scope, although they may be difficult to recognize.

Note that define does not require real-time and spatial registration ( spatial registration ), meaning correct real-time alignment of virtual information and actual feedback. This task implies that the user of the AR monitor can at least perform some kind of interactive control and the increased parts created by the computer in the screen will still be registered for the objects referenced in the environment. school

Although there are opinions about what qualifies as real-time performance may vary depending on the individual and the task or application, the interaction implies that the human computer interface operates in one Feedback rings are incorporated. Users constantly navigate the AR scene and control the AR experience. In turn, the system will select user input by monitoring the user’s viewpoint or posture. It registers the posture in the real world with virtual content, and then presents the user with a visual image (an image is registered for objects in the real world).

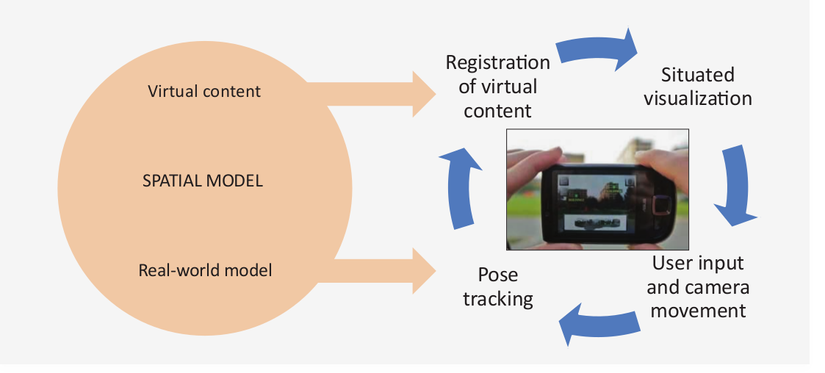

We can see that a complete AR system needs at least three components:

- Tracking component

- Registration component

- Visualization component

A fourth component is a spatial model (ie a database) about real-world information and virtual worlds. Real-world models are required to serve as a reference for the tracking component, to determine the user’s location in the real world. Virtual world model includes content used to enhance. Both parts of the spatial model must be registered in the same coordinate system.

AR uses feedback loops between users and computer systems. Users observe the AR screen and control views. The system monitors user views, registers real-world postures with virtual content and visualizes presentations.

A Brief History of Augmented Reality

Although one can easily turn back time to find examples in which information overlays are piled up on the physical world, enough to say that the first notes of the physical world with information computer generated happened in the 1960s. Ivan Sutherland could be noted when starting the field that would eventually turn into both VR and AR. In 1965, he released The ultimate display in an essay containing the following famous quotes:

Hiện hiển thị cuối cùng, của học, là một phòng trong mà máy tính được điều khiển các sẵn của đối số. Một Seat được hiển thị trong như một một phòng sẽ được đủ đủ để đọc trong. Handcuffs displayed in such a room would be confining, and a bullet displayed in such a room would be fatal. Không có chương trình hợp lệ như một thể hiển thị được đọc – có thể ghi lại vào đây vào Alice.

However, Sutherland ‘s essay (1965) includes not only an initial description of immersive displays . It also contains a little cited discussion, but that clearly predicts AR:

Người dùng của một của hiện thời có thể hiển thị hiển thị các đối tượng solid đối tượng — he can “see through matter!”

The image of the Sword of Damocles is the nickname of the first screen in the world, built in 1968. Permitted by Ivan Sutherland.

Advances in computing performance in the 1980s and early 1990s finally achieved the requirement for AR to emerge as an independent field of research. During the 1970s and 1980s, Myron Krueger, Dan Sandin, Scott Fisher and others experimented with many concepts of mixing human interaction with computer-generated overlays on video to experience analog art. work. about graphic annotations among the participants in their Videoplace installations around 1974.

1992 marked the birth of augmented reality terms. The term first appeared in the works of Caudell and Mizell at Boeing, to find a way to assist workers in a helicopter, by displaying a wiring diagram in the see through HMD.

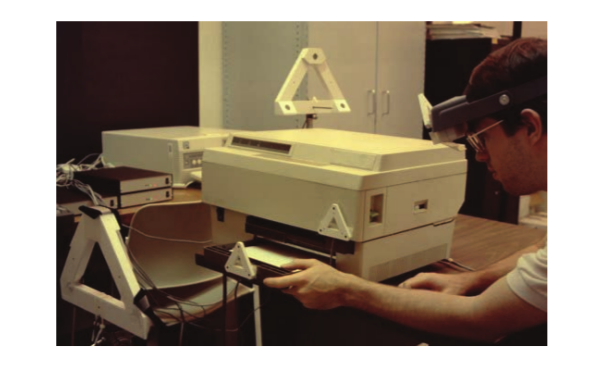

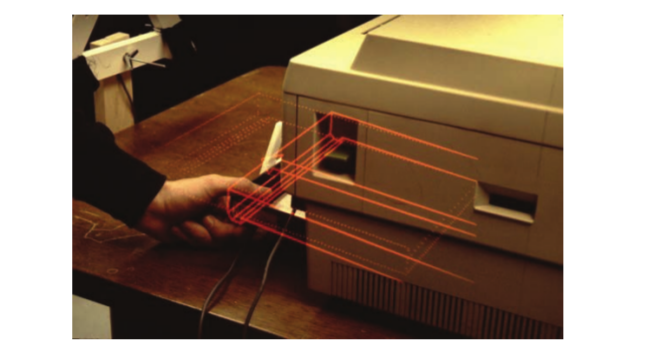

Following that, in 1993, Feiner et al. introduced KARMA , a knowledge-based AR combination system. This system has the ability to automatically deduce appropriate instruction sequences for repair and maintenance processes

Also in 1993, Fitzmaurice created the first handheld space recognition screen, serving as the precursor to the handheld AR. The Chameleon includes a portable liquid crystal display (LCD) with a lanyard. The screen displays the video output of the SGI graphics workstation at the time and is monitored spatially with a magnetic tracking device. This system is capable of displaying contextual information when users move their device around, for example, providing detailed location information on a wall map.

In 1994, State et al. at the University of North Carolina at Chapel Hill presented an attractive medical AR application, capable of allowing doctors to directly observe the fetus in a pregnant patient. Although the exact registration of computer graphics on a deformed object such as a human body is still a challenge today, this mental work suggests the power of AR for medicine and tasks. Other delicate

In the mid-1990s, Steve Mann at the MIT Media Lab deployed and tested with a vendor’s practical waist bag computer with a HMD (VR4 modified by the Virtual Research System). ) allows users to enhance, change or reduce visual reality. Through the WearCam project, Mann (1997) discovered wearable computers and mediocre reality. His work eventually helped establish the academic field of wearable computing, in the early days, there was a lot of synergy with AR (Starner et al. 1997).

In 1995, Rekimoto and Nagao created the first truly portable AR screen despite having a handheld AR screen. Their NaviCam was connected to a workstation, but equipped with a front camera. From the video feed, it can detect color-coded markers in camera images and information displayed on the video view.

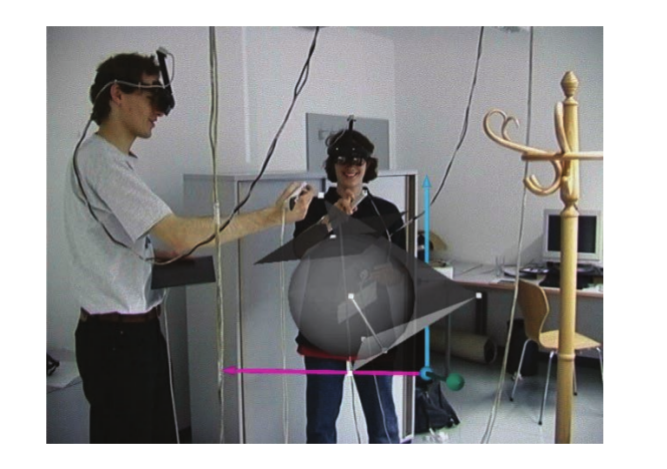

In 1996, Schmalstieg and his colleagues. has developed Studierstube, the first collaborative AR system. With this system, many users can experience virtual objects in the same common space . Each user has a HMD that is tracked and can see stereoscopic images precisely from a personal point of view. Unlike in multi-user VR, natural communication signals, such as voice, body posture and gesture, are not affected in Studierstube, because virtual content has been added to a collaborative situation usually in a way that is uncomfortable to a minimum. One of the applications introduced is a geometric course (Kaufmann and Schmalstieg 2003), which has been successfully tested with education.

From 1997 to 2001, the Japanese government and Canon Inc. jointly funded Mixed Reality Systems Laboratory as a temporary research company. This joint venture is the largest experimental research facility for research of mixed reality (MR) until that time (Tamura 2000) . One of its most remarkable achievements is the first coaxial stereo video design seen through HMD , COASTAR . Many activities done in the laboratory are also aimed at the digital entertainment market, which plays a very prominent role in Japan.

In 1997, Feiner et al. developed the first outdoor AR system, Touring Machine , at Columbia University. Touring machines use HMD devices to see through with GPS and track orientation. Providing mobile 3D graphics through this system requires a carrying backpack containing a computer , various sensors and a first tablet for data entry.

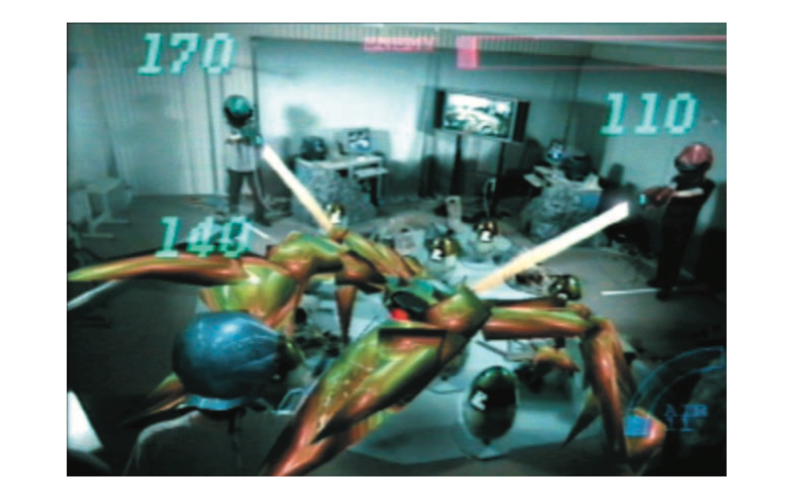

Only one year later, in 1998, Thomas and his colleagues. announced their work on building an outdoor AR navigation system, Map-in-the-Hat. This platform has been used for advanced applications, such as 3D surveys, but the most famous is to offer the first outdoor AR game, ARQuake . This game, a portal to Quake to Tinmith’s famous first-person shooter application, you can search, do not know if you can still play now, put users in the middle of a zombie attack in a parking lot. real car.

In the same year, Raskar et al. at the University of North Carolina at Chapel Hill to The Office of the Future , a remote system builds on the idea of a light scanning system and a structured projector. Although the necessary hardware is not really practical for everyday use at the time, related technologies, such as depth sensor and camera coupling, play a prominent role in the AR and the Other areas today.

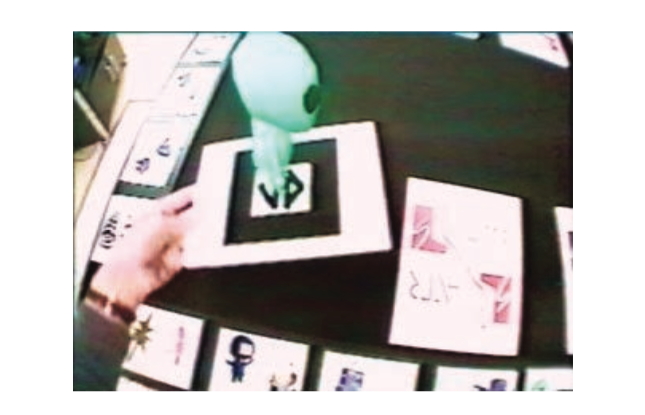

Until 1999, no AR software was available outside specialized research laboratories. This situation changed when Kato and Billinghurst released ARToolKit , the first open source software platform for AR. It features a 3D tracking library using black and white lenses, which can be easily produced on laser printers. Smart software design, combined with the availability of webcams, makes ARToolKit widely available.

In the same year, the German Federal Ministry of Education and Research initiated a program worth 21 million euros for industrial AR, called ARVIKA (Enhanced Reality for development, production and service). More than 20 research groups from industry and academia have developed advanced AR systems for industrial applications, especially in the German automotive industry. This program has raised awareness about AR worldwide in professional communities and is monitored by a number of similar programs designed to enhance the industrial application of technology.

Another noteworthy idea also appeared at the end of 1999: IBM Spohrer researcher wrote an essay on the Worldboard , an extensible infrastructure for information on link space registration, which Spohrer first proposed when he worked with Apple . This work can be considered the first concept for AR in the browser .

And from 2000 onwards, you also see the development of mobile phones and mobile computing, so is AR, which is combined with mobile and produces products such as: Pin-taric et al. . (2005) , a portable multi-player AR game, has been experienced by thousands of visitors at SIGGRAPH .

It took several years until 2008, the first truly usable natural feature tracking system for smartphones, was introduced to Wagner et al. 2008b . This work became the ancestor of the famous Vuforia toolkit for AR developers. Other notable achievements in recent years in the field of monitoring include the parallel tracking and mapping system (PTAM) by Klein and Murray (2007), which can be monitored without preparation in Unknown environment and KinectFusion system developed by Newcombe et al. (2011), build detailed 3D models from an inexpensive depth sensor.

Today, AR developers can choose among many software platforms, but these model systems continue to represent important directions for researchers such as Pokemon GO a very popular year game. Previously also used this model to develop.

Source : viblo.asia