Rails is a great framework that helps individuals and businesses build their products in a very short amount of time. Rails is used as a backend for many web projects or as an API server for mobile applications of startups. Myself also like the rails’ syntax and speed dev project.

However, the question is, what is Rails processing speed when dealing with large amounts of data? You’ve probably read the story “How We Went from 30 Servers to 2: Go”, if not then try reading it here . Basically the story is a company building a backend system with Rails to run the products they make for customers and use 30 servers to maintain it. As the number of customers increases, the amount of data becomes overloaded for the system and the company is forced to switch from Rails to Go, and as a result they succeed, but especially they only need to use 2 servers to Keep the system down.

Does Rails have a bottleneck when we try to process huge amounts of data? Probably not, if we use the following tips.

1. Do not use ActiveRecord if possible

ActiveRecord makes everything very easy, but it was not created for raw data. When you want to use a series of simple processes into millions of records, you should use plain SQL. If you feel you need an ORM tool to make it easier to work, try SEQUEL.

2. Use update_all to update all records

The following is a common mistake made by people who want to duplicate the entire table and update each element individually:

1 2 3 4 5 | <span class="token constant">User</span> <span class="token punctuation">.</span> <span class="token function">where</span> <span class="token punctuation">(</span> city <span class="token punctuation">:</span> “ <span class="token constant">Houston</span> ” <span class="token punctuation">)</span> <span class="token punctuation">.</span> <span class="token keyword">each</span> <span class="token keyword">do</span> <span class="token operator">|</span> user <span class="token operator">|</span> user <span class="token punctuation">.</span> note <span class="token operator">=</span> “ <span class="token constant">Houstonian</span> ” user <span class="token punctuation">.</span> save <span class="token keyword">end</span> |

The code is quite easy to understand but there are fatal drawbacks. If there are 100,000 users with city city “Houston”, the code will run for 24 hours. It’s been a while, huh? There is a much faster and more effective solution:

1 2 | <span class="token constant">User</span> <span class="token punctuation">.</span> <span class="token function">update_all</span> <span class="token punctuation">(</span> <span class="token punctuation">{</span> note <span class="token punctuation">:</span> “ <span class="token constant">Houstonian</span> ” <span class="token punctuation">}</span> <span class="token punctuation">,</span> <span class="token punctuation">{</span> city <span class="token punctuation">:</span> “ <span class="token constant">Houston</span> ” <span class="token punctuation">}</span> <span class="token punctuation">)</span> |

And this code runs within 30 seconds with the same amount of data as the above.

3. Get only data from the columns you need

The code User.where(city: “Houston”) will get all information from the users in the database. If you do not need to use additional information such as age, gender, marital status, … then you should not take all that information in the first place. Use select_column when you want to retrieve data from several columns:

1 2 | <span class="token constant">User</span> <span class="token punctuation">.</span> <span class="token function">select</span> <span class="token punctuation">(</span> “city” <span class="token punctuation">,</span> “state” <span class="token punctuation">)</span> <span class="token punctuation">.</span> <span class="token function">where</span> <span class="token punctuation">(</span> age <span class="token punctuation">:</span> <span class="token number">29</span> <span class="token punctuation">)</span> |

4. Replace the Model.all.each command with find_in_batches

For small systems, changes like this are not very important. But with a system with 100000 records, the command above can easily occupy 5 GB or more of memory. The server will easily crash. So I think find_in_batches should be used to solve this problem:

1 2 3 4 5 6 | <span class="token constant">User</span> <span class="token punctuation">.</span> <span class="token function">find_in_batches</span> <span class="token punctuation">(</span> conditions <span class="token punctuation">:</span> ‘grade <span class="token operator">=</span> <span class="token number">2</span> ' <span class="token punctuation">,</span> batch_size <span class="token punctuation">:</span> <span class="token number">500</span> <span class="token punctuation">)</span> <span class="token keyword">do</span> <span class="token operator">|</span> students <span class="token operator">|</span> students <span class="token punctuation">.</span> <span class="token keyword">each</span> <span class="token keyword">do</span> <span class="token operator">|</span> student <span class="token operator">|</span> student <span class="token punctuation">.</span> <span class="token function">find_or_create_by_class_name</span> <span class="token punctuation">(</span> ‘ <span class="token constant">PE</span> ’ <span class="token punctuation">)</span> <span class="token keyword">end</span> <span class="token keyword">end</span> |

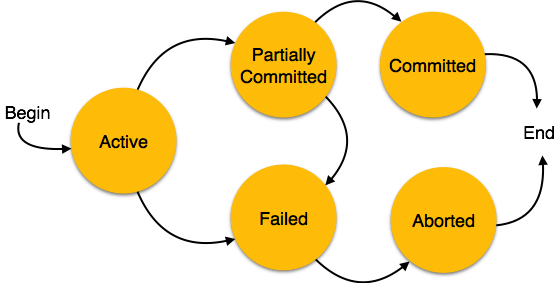

5. Don’t use transactions too much

1 2 3 | <span class="token punctuation">(</span> <span class="token number">0.2</span> ms <span class="token punctuation">)</span> <span class="token keyword">BEGIN</span> <span class="token punctuation">(</span> <span class="token number">0.4</span> ms <span class="token punctuation">)</span> <span class="token constant">COMMIT</span> |

Transaction is run every time the object is saved. It will run millions of times during system run. Even if we use find_in_batches, the only way to effectively restrict transactions is to group the processes. The code in Part 4 can still be optimized as follows:

1 2 3 4 5 6 7 8 | <span class="token constant">User</span> <span class="token punctuation">.</span> <span class="token function">find_in_batches</span> <span class="token punctuation">(</span> conditions <span class="token punctuation">:</span> ‘grade <span class="token operator">=</span> <span class="token number">2</span> ' <span class="token punctuation">,</span> batch_size <span class="token punctuation">:</span> <span class="token number">500</span> <span class="token punctuation">)</span> <span class="token keyword">do</span> <span class="token operator">|</span> students <span class="token operator">|</span> <span class="token constant">User</span> <span class="token punctuation">.</span> transaction <span class="token keyword">do</span> students <span class="token punctuation">.</span> <span class="token keyword">each</span> <span class="token keyword">do</span> <span class="token operator">|</span> student <span class="token operator">|</span> student <span class="token punctuation">.</span> <span class="token function">find_or_create_by_class_name</span> <span class="token punctuation">(</span> ‘ <span class="token constant">PE</span> ’ <span class="token punctuation">)</span> <span class="token keyword">end</span> <span class="token keyword">end</span> <span class="token keyword">end</span> |

This way, instead of having to commit every single record, now just commit after every 500 records, much more efficiently.

6. Don’t forget to type the index

Always index the most important columns or column groups you query the most. Otherwise, your command will take a lifetime to run.

7. Destroy occupies a lot of resources

Destroy in ActiveRecord is a very heavy process. Make sure you know what you are doing. One thing you must know is that: although destroy and delete both delete records, destroy will run all callback functions, which is very time consuming. Similar to destroy_all and delete_all . So, if you just want to delete records without touching anything else, you should only use delete_all . In other case is if you want to delete an entire table. For example, if you want to delete all users, you can use TRUNCATE :

1 2 | <span class="token constant">ActiveRecord</span> <span class="token punctuation">:</span> <span class="token punctuation">:</span> <span class="token constant">Base</span> <span class="token punctuation">.</span> connection <span class="token punctuation">.</span> <span class="token function">execute</span> <span class="token punctuation">(</span> “ <span class="token constant">TRUNCATE</span> <span class="token constant">TABLE</span> users” <span class="token punctuation">)</span> |

Anyway, delete at the database level is still very time consuming. This is why sometimes we should use “soft delete” or “soft delete”, just change the “deleted = 1” field of the record you want to delete.

8. It is not necessary to run the command immediately

Use “Background job”. Resque and Sidekiq are always there for you, use them to execute implicit orders and set order execution schedule, everything will be easier

In short, if you have a large amount of data, do your best to optimize system performance. Although very convenient, we have to admit that ActiveRecord slows down the system a bit. However, through the above tips, you can still keep the other strengths of Rails without wasting too much performance. Enjoy Rails as much as possible!

Reference: https://chaione.com/blog/dealing-massive-data-rails/