In the process of software development, we must have done many times adding or updating data but almost with only a relatively small amount of data changes, this process does not take much time.

I was really wrong to say that, please pay a little attention, usually in rails inserting row-by-row data, meaning that every time you create or change a record of a record, it will create 1 corresponding query. Imagine you want to import a few million copies, how many queries and time will it be?

To solve that problem in ruby is not difficult, in the process of finding out, I was known to gem activerecord-import . Wait, before that, did you know which way to import data?

1. Create a record by creating one by one (row by row)

1 2 3 4 5 6 | users = [] 10.times do |i| users << User.new(name: "user #{i}") end User.import users |

The example above is effective for a small amount of data, as mentioned above, the problem to be solved here is to import a few million records into your database. In the usual way, you enter rows one by one in the csv file and then insert them into your database via the seed.rb file.

1 2 3 4 5 | <span class="token comment"># seeds.rb</span> <span class="token constant">CSV</span> <span class="token punctuation">.</span> <span class="token function">foreach</span> <span class="token punctuation">(</span> <span class="token string">'products.csv'</span> <span class="token punctuation">,</span> headers <span class="token punctuation">:</span> <span class="token keyword">true</span> <span class="token punctuation">)</span> <span class="token keyword">do</span> <span class="token operator">|</span> row <span class="token operator">|</span> <span class="token constant">Product</span> <span class="token punctuation">.</span> <span class="token function">create</span> <span class="token punctuation">(</span> product_name <span class="token punctuation">:</span> row <span class="token punctuation">[</span> <span class="token string">'Product Name'</span> <span class="token punctuation">]</span> <span class="token punctuation">)</span> <span class="token keyword">end</span> |

It seems the above way, but the import performance is too slow, you can not sit all day to import only a few million data.

2. Using SQL INSERT statements (code that is hard to read and unsafe)

1 2 3 4 5 6 7 8 9 10 11 12 13 | <span class="token comment"># Gán giá trị cho users bằng một mảng gồm các user hash</span> <span class="token comment"># like [{ name: "Sam" }, { name: "Charls" }]</span> sql <span class="token operator">=</span> <span class="token string">"INSERT INTO users VALUES "</span> sql_values <span class="token operator">=</span> <span class="token punctuation">[</span> <span class="token punctuation">]</span> users <span class="token punctuation">.</span> <span class="token keyword">each</span> <span class="token keyword">do</span> <span class="token operator">|</span> user <span class="token operator">|</span> sql_values <span class="token operator"><</span> <span class="token operator"><</span> <span class="token string">"( <span class="token interpolation"><span class="token delimiter tag">#{</span> user <span class="token punctuation">.</span> values <span class="token punctuation">.</span> <span class="token function">join</span> <span class="token punctuation">(</span> <span class="token string">", "</span> <span class="token punctuation">)</span> <span class="token delimiter tag">}</span></span> )"</span> <span class="token keyword">end</span> sql <span class="token operator">+</span> <span class="token operator">=</span> sql_values <span class="token punctuation">.</span> <span class="token function">join</span> <span class="token punctuation">(</span> <span class="token string">", "</span> <span class="token punctuation">)</span> <span class="token constant">ActiveRecord</span> <span class="token punctuation">:</span> <span class="token punctuation">:</span> <span class="token constant">Base</span> <span class="token punctuation">.</span> connection <span class="token punctuation">.</span> <span class="token function">insert_sql</span> <span class="token punctuation">(</span> sql <span class="token punctuation">)</span> |

3. Using activerecord-import gem (faster)

1 2 3 4 5 6 | users <span class="token operator">=</span> <span class="token punctuation">[</span> <span class="token punctuation">]</span> <span class="token number">10.</span> times <span class="token keyword">do</span> <span class="token operator">|</span> i <span class="token operator">|</span> users <span class="token operator"><</span> <span class="token operator"><</span> <span class="token constant">User</span> <span class="token punctuation">.</span> <span class="token keyword">new</span> <span class="token punctuation">(</span> name <span class="token punctuation">:</span> <span class="token string">"user <span class="token interpolation"><span class="token delimiter tag">#{</span> i <span class="token delimiter tag">}</span></span> "</span> <span class="token punctuation">)</span> <span class="token keyword">end</span> <span class="token constant">User</span> <span class="token punctuation">.</span> import users |

ActiveRecord-import is a ruby gem written by Mr. Zach Dennis. It is much faster than the usual insert row by row and is also very easy to implement.

To be able to use it you need to import the gem "activerecord-import" into Gemfile and remember to type bundle install . The mechanism of this gem’s operation is to reduce the number of your queries into a single query. Instead of having millions of queries, now there is only 1 query.

In addition, you can also apply to insert a new column, add all new data in the table, update the entire data type, … Here is the example code of large data update from csv file:

In addition, you can also apply to insert a new column, add all new data in the table, update the entire data type, … Here is the example code of large data update from csv file:

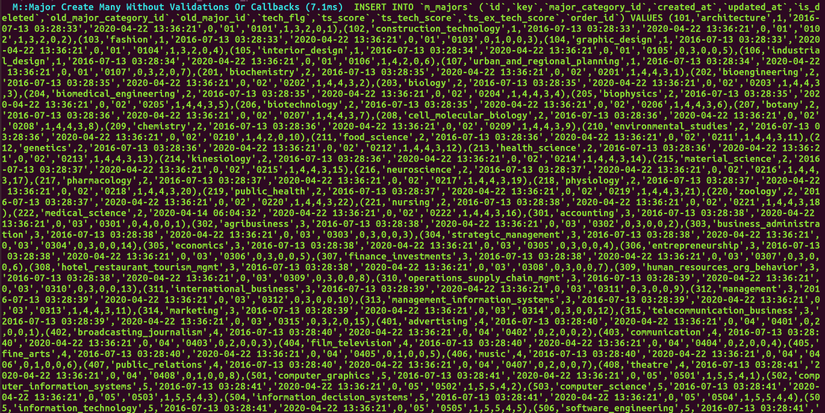

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | <span class="token keyword">def</span> update <span class="token keyword">return</span> <span class="token keyword">if</span> is_empty_db <span class="token operator">?</span> file_path <span class="token operator">=</span> <span class="token string">"product.csv"</span> instances <span class="token operator">=</span> <span class="token punctuation">[</span> <span class="token punctuation">]</span> update_keys <span class="token operator">=</span> <span class="token punctuation">[</span> <span class="token punctuation">]</span> <span class="token constant">CSV</span> <span class="token punctuation">.</span> <span class="token function">foreach</span> <span class="token punctuation">(</span> file_path <span class="token punctuation">,</span> headers <span class="token punctuation">:</span> <span class="token keyword">true</span> <span class="token punctuation">)</span> <span class="token keyword">do</span> <span class="token operator">|</span> row <span class="token operator">|</span> object <span class="token operator">=</span> <span class="token constant">Product</span> <span class="token punctuation">.</span> find_by id <span class="token punctuation">:</span> row <span class="token punctuation">[</span> <span class="token string">"id"</span> <span class="token punctuation">]</span> update_keys <span class="token operator">=</span> row <span class="token punctuation">.</span> to_h <span class="token punctuation">.</span> <span class="token function">except</span> <span class="token punctuation">(</span> <span class="token string">"id"</span> <span class="token punctuation">)</span> <span class="token punctuation">.</span> keys <span class="token keyword">if</span> update_keys <span class="token punctuation">.</span> blank <span class="token operator">?</span> <span class="token keyword">next</span> <span class="token keyword">unless</span> object object <span class="token punctuation">.</span> assign_attributes row <span class="token punctuation">.</span> to_h instances <span class="token operator"><</span> <span class="token operator"><</span> object <span class="token keyword">if</span> object <span class="token keyword">end</span> <span class="token constant">Product</span> <span class="token punctuation">.</span> import instances <span class="token punctuation">,</span> on_duplicate_key_update <span class="token punctuation">:</span> update_keys <span class="token punctuation">,</span> validate <span class="token punctuation">:</span> <span class="token keyword">false</span> <span class="token keyword">end</span> |

By using activerecord-import, when importing about 500,000 records from over 1 hour to less than 3 minutes, it’s too fast, isn’t it?

Understand more about manipulating databases

At this point, I am quite happy to import large data, but do you understand why many small queries are much slower than one large query?

I simply understand that with a query, the time to call it is quite time consuming, and the implementation is relatively fast.

Yes, when ActiveRecord performs the operation of insert row by row, it will access the database with an insert statement, and of course running sql for one insert does not take much time but the number of hits enter csdl to open a transaction, then complete it, it will take a lot of time. This leads to frequent hits on the database that have no effect on perfomance.

4. Bulk insert with rails 6

If you are using rails 6 then congratulations, the problem of inserting large amounts of data has been supported already.

Starting with rails 6, I have added insert_all , insert_all! and upsert_all into ActiveRecord :: Persistence

insert_all

Using insert_all makes it possible to perform bulk inserts like the example below

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | result <span class="token operator">=</span> <span class="token constant">User</span> <span class="token punctuation">.</span> <span class="token function">insert_all</span> <span class="token punctuation">(</span> <span class="token punctuation">[</span> <span class="token punctuation">{</span> name <span class="token punctuation">:</span> <span class="token string">"Sam"</span> <span class="token punctuation">,</span> email <span class="token punctuation">:</span> <span class="token string">" <a class="__cf_email__" href="/cdn-cgi/l/email-protection">[email protected]</a> "</span> <span class="token punctuation">}</span> <span class="token punctuation">,</span> <span class="token punctuation">{</span> name <span class="token punctuation">:</span> <span class="token string">"Sum"</span> <span class="token punctuation">,</span> email <span class="token punctuation">:</span> <span class="token string">" <a class="__cf_email__" href="/cdn-cgi/l/email-protection">[email protected]</a> "</span> <span class="token punctuation">}</span> <span class="token punctuation">]</span> <span class="token punctuation">)</span> <span class="token comment"># Bulk Insert (2.3ms) INSERT INTO "users"("name","email")</span> <span class="token comment"># VALUES("Sam", " <a class="__cf_email__" href="/cdn-cgi/l/email-protection">[email protected]</a> "...)</span> <span class="token comment"># ON CONFLICT DO NOTHING RETURNING "id"</span> puts result <span class="token punctuation">.</span> inspect <span class="token comment">#<ActiveRecord::Result:0x00007fb6612a1ad8 @columns=["id"], @rows=[[1], [2]],</span> <span class="token variable">@hash_rows</span> <span class="token operator">=</span> <span class="token keyword">nil</span> <span class="token punctuation">,</span> <span class="token variable">@column_types</span> <span class="token operator">=</span> <span class="token punctuation">{</span> <span class="token string">"id"</span> <span class="token operator">=</span> <span class="token operator">></span> <span class="token comment">#<ActiveModel::Type::Integer:0x00007fb65f420078 ....></span> puts <span class="token constant">User</span> <span class="token punctuation">.</span> count <span class="token operator">=</span> <span class="token operator">></span> <span class="token number">2</span> |

As mentioned above, notice ON CONFLICT DO NOTHING RETURNING "id" in the query. This is supported by SQLite and PostgreQuery databases. If there is a conflict or unique key violation during bulk insertion, it will skip the conflict record and proceed to insert the next record.

insert_all!

If we need to make sure all rows are inserted, we can use insert_all!

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | result <span class="token operator">=</span> <span class="token constant">User</span> <span class="token punctuation">.</span> <span class="token function">insert_all</span> <span class="token punctuation">(</span> <span class="token punctuation">[</span> <span class="token punctuation">{</span> name <span class="token punctuation">:</span> <span class="token string">"Sam"</span> <span class="token punctuation">,</span> email <span class="token punctuation">:</span> <span class="token string">" <a class="__cf_email__" href="/cdn-cgi/l/email-protection">[email protected]</a> "</span> <span class="token punctuation">}</span> <span class="token punctuation">,</span> <span class="token punctuation">{</span> name <span class="token punctuation">:</span> <span class="token string">"Sum"</span> <span class="token punctuation">,</span> email <span class="token punctuation">:</span> <span class="token string">" <a class="__cf_email__" href="/cdn-cgi/l/email-protection">[email protected]</a> "</span> <span class="token punctuation">}</span> <span class="token punctuation">]</span> <span class="token punctuation">,</span> returning <span class="token punctuation">:</span> <span class="token string">%w[id name]</span> <span class="token punctuation">)</span> <span class="token comment"># Bulk Insert (2.3ms) INSERT INTO "users"("name","email")</span> <span class="token comment"># VALUES("Sam", " <a class="__cf_email__" href="/cdn-cgi/l/email-protection">[email protected]</a> "...)</span> <span class="token comment"># ON CONFLICT DO NOTHING RETURNING "id", "name"</span> puts result <span class="token punctuation">.</span> inspect <span class="token comment">#<ActiveRecord::Result:0x00007fb6612a1ad8 @columns=["id", "name"],</span> <span class="token variable">@rows</span> <span class="token operator">=</span> <span class="token punctuation">[</span> <span class="token punctuation">[</span> <span class="token number">1</span> <span class="token punctuation">,</span> <span class="token string">"Sam"</span> <span class="token punctuation">]</span> <span class="token punctuation">,</span> <span class="token punctuation">[</span> <span class="token number">2</span> <span class="token punctuation">,</span> <span class="token string">"Sum"</span> <span class="token punctuation">]</span> <span class="token punctuation">]</span> <span class="token punctuation">,</span> <span class="token variable">@hash_rows</span> <span class="token operator">=</span> <span class="token keyword">nil</span> <span class="token punctuation">,</span> <span class="token variable">@column_types</span> <span class="token operator">=</span> <span class="token punctuation">{</span> <span class="token string">"id"</span> <span class="token operator">=</span> <span class="token operator">></span> <span class="token comment">#<ActiveModel::Type::Integer:0x00007fb65f420078 ....></span> |

upsert_all

If a record exists, and we want to update it or otherwise create a new record, this is called upert.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 | result <span class="token operator">=</span> <span class="token constant">User</span> <span class="token punctuation">.</span> <span class="token function">upsert_all</span> <span class="token punctuation">(</span> <span class="token punctuation">[</span> <span class="token punctuation">{</span> id <span class="token punctuation">:</span> <span class="token number">1</span> <span class="token punctuation">,</span> name <span class="token punctuation">:</span> <span class="token string">"Sam new"</span> <span class="token punctuation">,</span> email <span class="token punctuation">:</span> <span class="token string">" <a class="__cf_email__" href="/cdn-cgi/l/email-protection">[email protected]</a> "</span> <span class="token punctuation">}</span> <span class="token punctuation">,</span> <span class="token punctuation">{</span> id <span class="token punctuation">:</span> <span class="token number">1</span> <span class="token punctuation">,</span> <span class="token comment"># trùng id</span> name <span class="token punctuation">:</span> <span class="token string">"Sam's new"</span> <span class="token punctuation">,</span> email <span class="token punctuation">:</span> <span class="token string">" <a class="__cf_email__" href="/cdn-cgi/l/email-protection">[email protected]</a> "</span> <span class="token punctuation">}</span> <span class="token punctuation">,</span> <span class="token punctuation">{</span> id <span class="token punctuation">:</span> <span class="token number">2</span> <span class="token punctuation">,</span> name <span class="token punctuation">:</span> <span class="token string">"Charles"</span> <span class="token punctuation">,</span> <span class="token comment"># cập nhật tên</span> email <span class="token punctuation">:</span> <span class="token string">" <a class="__cf_email__" href="/cdn-cgi/l/email-protection">[email protected]</a> "</span> <span class="token punctuation">}</span> <span class="token punctuation">,</span> <span class="token punctuation">{</span> id <span class="token punctuation">:</span> <span class="token number">3</span> <span class="token punctuation">,</span> <span class="token comment"># tạo mới một bản ghi chưa có</span> name <span class="token punctuation">:</span> <span class="token string">"David"</span> <span class="token punctuation">,</span> email <span class="token punctuation">:</span> <span class="token string">" <a class="__cf_email__" href="/cdn-cgi/l/email-protection">[email protected]</a> "</span> <span class="token punctuation">}</span> <span class="token punctuation">]</span> <span class="token punctuation">)</span> <span class="token comment"># Bulk Insert (26.3ms) INSERT INTO `users`(`id`,`name`,`email`)</span> <span class="token comment"># VALUES (1, 'Sam new', ' <a class="__cf_email__" href="/cdn-cgi/l/email-protection">[email protected]</a> ')...</span> <span class="token comment"># ON DUPLICATE KEY UPDATE `name`=VALUES(`name`)</span> puts <span class="token constant">User</span> <span class="token punctuation">.</span> count <span class="token operator">=</span> <span class="token operator">></span> <span class="token number">3</span> |

The second row in the input array has duplicate id = 1 so the user’s name will be Sam's new instead of Sam new .

The third row in the input array is not duplicated, so it only does updates.

The fourth row with id = 3 is not in the database so it will be created here.

Source

https://medium.com/@eric_lum/importing-large-datasets-in-ror-why-you-should-use-activerecord-import-26fc915e6fd0

https://blog.saeloun.com/2019/11/26/rails-6-insert-all.html