General knowledge about Docker and Docker Swarm (Part 2 – Concept, history of Docker)

- Tram Ho

I. The reason for being born

- In the past, setting up and deploying an application to one or more servers was very difficult from having to install the tools and environments needed for the application to running the application, not to mention the heterogeneity between environments on many other servers. together.

- For that reason, Docker was born to solve this problem.

- Docker allows developers to create separate and independent environments for launching and developing applications, and these environments are called containers. When you need to deploy to any server, just run the Docker container and your application will be launched immediately.

Eg

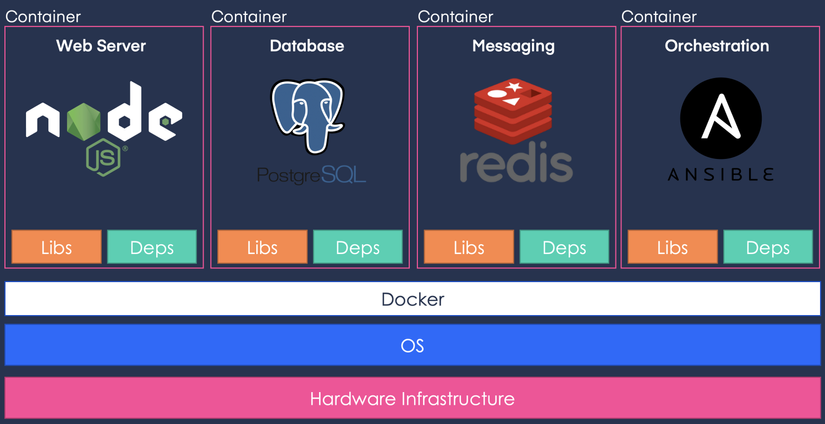

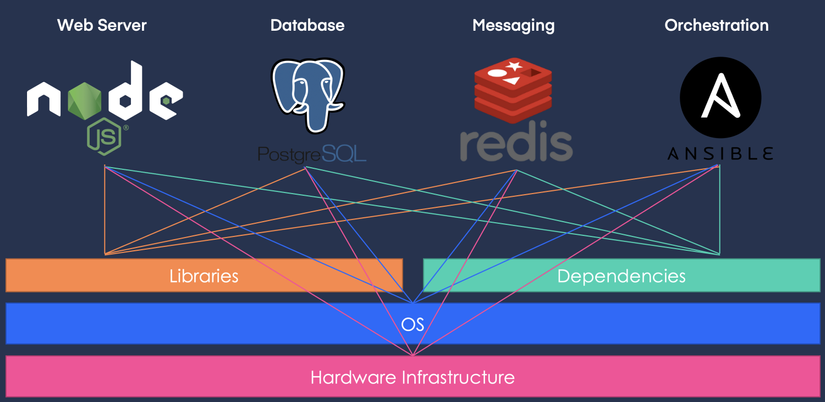

Imagine you code an application, have a web server written in NodeJs, have a database of PostgreSQL, use Redis to store messages,… Each of these you have to install a lot of libraries, dependencies, accompanied by configs.. And when you finish the code, if you want to let users use it, you have to deploy it.

In that deployment environment, you also have to install all of these along with its libraries, config, dependencies. Just missing a library or a small dependency, your application will also crash and be unusable. And it took a lot of time to set up and install all of this alone.

That’s the problem, how can I code in one place and bring it to users or to many places, different environments and still make sure the libraries, config, dependencies are installed correctly.

This problem will be solved by Docker.

- Docker it will collect things like NodeJS, PostgreSQL, Redis, Ansible, installing it is quite complicated and how to get it right version, Docker it collects these into 1 container.

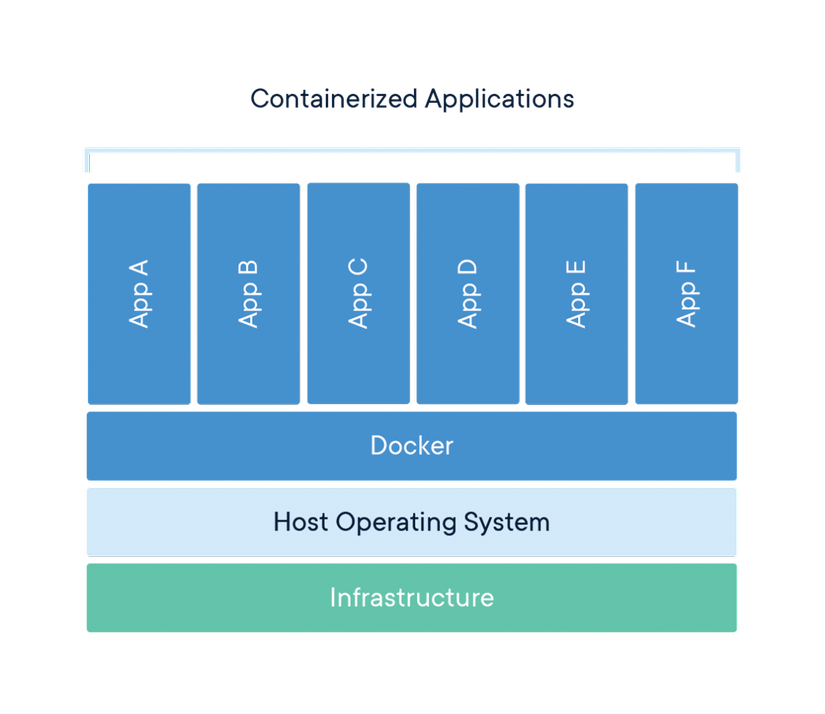

- Docker packages software into standardized units called containers. Containers allow a developer to package an application with all the parts the application needs, such as libraries and other dependencies, and ship it all out as a single package.

- Like a container truck, there is a cargo inside and you just put it on the truck and run to another place. When they arrive, they will unload the goods and use them. Docker it also works with such mechanism. It will collect each of these guys into containers.

- When you write code, each container will include the nodejs installation, libraries, dependencies, and code into one container. When you need to run, you just need to run this container, it will have all nodejs, libraries, code… without you having to install anything, Docker will take care of all that. .

- Packaged applications can work quickly and efficiently across different computing environments

- In short, Docker separates and packages software into standardized units called containers for easy management, portability, and sharing. From there, the application can work quickly and efficiently on different computing environments.

Technology used

To achieve speed and efficiency here, Docker has used Containerization technology with containers that share the OS kernel with the host server.

The container will work like a normal application, when it needs resources to operate, it will directly get from the host machine like a normal software running on the host machine.

II. History

To understand the benefits of Containerization technology, we will bring the deployment story back to the time when we did not have Containerization technology.

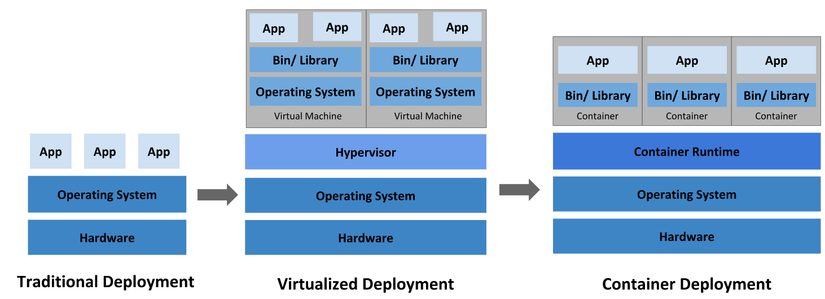

1. Deploy era in the traditional way

- Initially, applications are run on physical servers. There is no way to define resource boundaries for applications in the physical server **=> causing resource allocation problems. **

- For example, if multiple applications run on the same physical server, there may be situations where one application will take up more resources and other applications will perform worse as a result.

- One solution to this would be to run each application on a different physical server. But this solution is not optimal because resources are underutilized and it is very expensive for organizations to be able to maintain so many physical servers.

2. The era of virtualization deployment

- Virtualization was born as a solution to the traditional method.

- It allows you to run multiple Virtual Machines (VMs) on the CPU of a single physical server. Virtualization allows applications to be isolated between VMs and provides a level of security because one application’s information cannot be freely accessed by another application.

- Virtualization allows for better use of resources in a physical server and allows for better scalability as an application can be added or updated easily, reducing hardware costs and more.

- With virtualization, you can have a collection of physical resources as a cluster of virtual machines available.

- Each VM is a computer that runs all of its components, including its own operating system, on top of virtualized hardware.

3. Container Deployment Era

Containers are similar to VMs, but they are isolated to share Operating Systems (OS) between applications. Therefore, containers are considered lightweight. Similar to a VM, a container has a filesystem, CPU, memory, process space, etc. When they are decoupled from the underlying infrastructure, they are portable in the cloud or Operating System distributions.

All in all, the main difference between a VM and a Container is: Viral Machine: takes over the host machine’s hardware right from the start. => resource consuming, time consuming, cumbersome Container: use containers like using an application, only get resources when needed => fast, easy to set up and avoid wasting resources from virtual machines when not use

References

[1]. How node works

[2]. Docker docs

[3]. Docker là gì?