Future iPhones can better guide people with visual impairments to take photos

- Tram Ho

Although Apple’s Camera app has been overhauled on iPhone 11, iPhone 11 Pro, and iPhone 11 Pro Max, it’s still primarily for users with good vision. However, in the future, this app, and new iPhone models, may be tweaked so that users with visual impairment (or loss of vision) can make better use of the iPhone’s camera.

A patent, called ” Devices, Methods, and Graphical User Interfaces for Assisted Photo-Taking ” of Apple, reveals two approaches for with this problem.

Apple’s more than 30,000 word patents detail the ways in which an iPhone – or another device with a camera – can talk to users. For example, when they are reviewing images, or more useful, it can help them align the camera to take a new photo. It can detect when the camera is not upright, or when it is moved, and create sound or vibration feedback when the subject in the frame has changed.

Not only can the camera shake, move, and damage pictures. Subjects being photographed may also be affected. For example, the device will vibrate or play a feedback sound when a person (or other object …) has just stepped in or out of the preview frame.

The iPhone can vibrate in a variety of ways, and it can speak loudly to let users know what’s going on. These new features were developed to assist photography for people with a range of vision-related diseases, including vision loss.

According to the patent, the iPhone can also be described by the sound of the frame being taken. This description includes information regarding the number of subjects, such as ” Two people in the lower right corner of the screen “, or “Two faces near the camera “.

Information can be more specific if desired. If in the Photos app, iPhone can detect each person’s face in the photo and you can tag names on it, or similar services like Facebook, then the Camera app with new features, too. The Camera app can link other users’ information on the phone, such as contact information, or detected faces in Photos.

” The device can access a multimedia collection of one or more photos and / or videos that have been tagged by someone ” – the patent continues. ” The audio description of the scene then identifies the individuals in the scene by name (stating the name of the person as tagged in the multimedia collection, such as’ Samantha and Alex standing near the camera. in the lower right corner of the screen ‘) “.

However, there is a problem that can occur when a photo has too many people, or generally there are too many things crammed in the frame. This future Apple Camera app can assign priority, for example, to select only the three people closest to the camera as the subject in a crowd of dozens of people.

” The device can allow users to interact with subjects above a certain threshold, ” – the patent goes on – ” so that users don’t get overwhelmed by the scene with too many detected objects .”

However, calculating that priority, or simplifying the descriptions to provide users, is one thing. That information transmission is different, and that’s when options that respond with both sound and vibration become important.

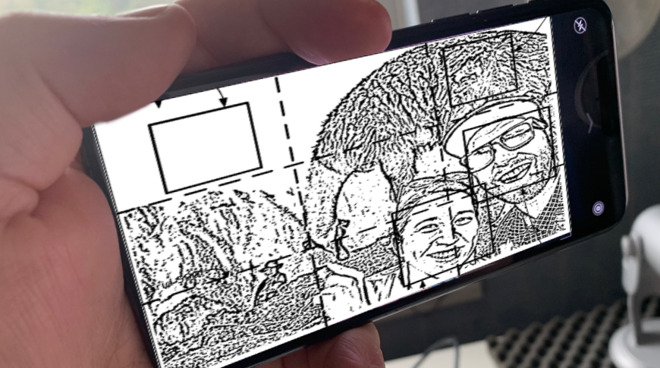

Initially, the software will identify which parts of the photo are important, or ” above certain threshold ,” and mark them with what Apple calls a “limit box”. But then the device has to do to tell users where the boxes are located on the screen.

If the user has weak or poor vision, they can still afford to locate the limit box and touch it. When they touched the box, a second, more complete description would be heard.

“A voice describing a specific subject, for example ‘A bearded man, smiling, standing near the camera on the right edge of the photo’, or ‘A bearded man wearing sunglasses and a hat in the corner lower right of the screen ‘, or’ Alex is laughing at the lower right of the screen ‘… “- the patent said.

If the first description were to say the name of the person, this second description would describe ” one or more of the characteristics (such as gender, facial expressions, facial features, glasses, hats, or wearing other accessories or not …) specific to a specific subject or individual “ .

When the user cannot see the limit box, the iPhone or other device can use vibration feedback. It can respond to a finger swipe along the user’s screen, for example, when a finger swipes through the limit box.

The patent suggests that when the camera is in “accessibility mode”, common gestures such as touch-to-focus will be replaced by special gestures specifically for users with the condition.

” For example, allowing users to select objects by moving their finger on the preview screen before accessibility mode is activated … ”

This patent is registered to 4 inventors, Christopher B. Fleizach, Darren C. Minifie, Eryn R. Wells, and Nandini Kannamangalam Sundara Raman. Fleizach is also registered in related patents such as ” Voice control to diagnose accidental activation of accessibility features “, while Minifie is registered in the related patent “Scan interface. for people with disabilities . ”

Reference: AppleInsider

Source : Genk