In the previous part I deployed nginx, along with services like

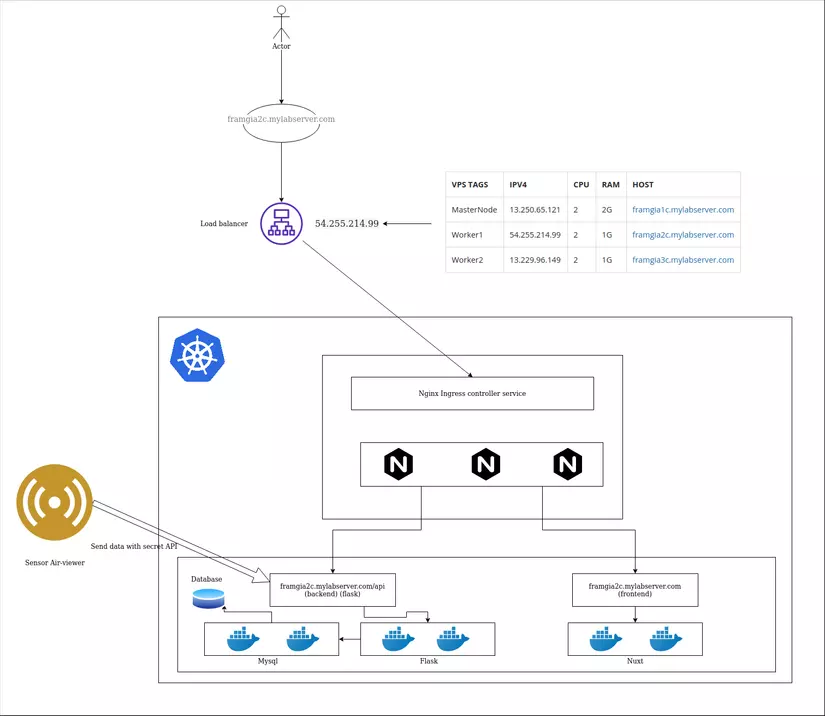

In the previous part I deployed nginx, along with services like tea or coffee , to this part I will deploy a web application with the system described as the picture above, the sensor part, I will not do details, you can Refer here or via github . We have services like Flask, mysql, nuxt.js routed with nginx-ingress and then use the worker node as the domain and loadbalancer. The project to measure the concentration of dust pollution in the air is also built microservices, you can refer here https://github.com/sun-asterisk-research/air-viewer

CleanUp the previous section

1 2 3 4 5 6 7 8 9 | # xóa service kubectl delete service coffee-svc tea-svc # xóa các deployment kubectl delete deployment coffee tea # xóa các ingress của các services kubectl delete ingress cafe-ingress # xóa các secret của ingress kubectl delete secret cafe-secret |

Clone Project Air-viewer

1 2 | <span class="token function">git</span> clone https://github.com/sun-asterisk-research/air-viewer.git |

Install for you who have not read the previous post

In the previous section, I have installed kubernetes-ingress so I will go quickly if you have not installed kubernetes-ingress yet. From now on, the default commands will execute on the master node only

1 2 3 | # vào thư mục install cd air-viewer/kubernetes/nginx-ingress/install |

Create a Namespace, a SA, the Default Secret, the Customization Config Map, and Custom Resource Definitions

1 2 | kubectl apply -f ns-and-sa.yaml |

Create a secret with the TLS certificate and the key for the default server NGINX:

1 2 | kubectl apply -f default-server-secret.yaml |

Create a config map for customizing NGINX configuration

1 2 | kubectl apply -f nginx-config.yaml |

Create custom resource definitions for VirtualServer and VirtualServerRoute

1 2 | kubectl apply -f custom-resource-definitions.yaml |

Configure RBAC

1 2 | kubectl apply -f rbac.yaml |

Deploy the Ingress Controller

1 2 | kubectl apply -f nginx-ingress.yaml |

Config Air-viewer

Create Namespaces

1 2 | kubectl create namespace air-viewer |

Deploy Mysql

Regarding deploying mysql, we need persistent Volume where database is not available, secret contains root and user name passwords to hide passwords to prevent others from managing other services readable through describe deployment của mysql command. Finally, the files contain service and deployment

1 2 | cd air-viewer/kubernetes/mysql |

Secret

1 2 | echo -n "root" | base64 |

ouput: cm9vdA==

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | <span class="token key atrule">apiVersion</span> <span class="token punctuation">:</span> v1 <span class="token key atrule">kind</span> <span class="token punctuation">:</span> Secret <span class="token key atrule">metadata</span> <span class="token punctuation">:</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> mysql <span class="token punctuation">-</span> secrets <span class="token key atrule">namespace</span> <span class="token punctuation">:</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">type</span> <span class="token punctuation">:</span> Opaque <span class="token key atrule">data</span> <span class="token punctuation">:</span> <span class="token key atrule">ROOT_PASSWORD</span> <span class="token punctuation">:</span> cm9vdA== <span class="token punctuation">---</span> <span class="token key atrule">apiVersion</span> <span class="token punctuation">:</span> v1 <span class="token key atrule">kind</span> <span class="token punctuation">:</span> Secret <span class="token key atrule">metadata</span> <span class="token punctuation">:</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> mysql <span class="token punctuation">-</span> pass <span class="token punctuation">-</span> non <span class="token punctuation">-</span> root <span class="token key atrule">namespace</span> <span class="token punctuation">:</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">type</span> <span class="token punctuation">:</span> Opaque <span class="token key atrule">data</span> <span class="token punctuation">:</span> <span class="token key atrule">ROOT_PASSWORD</span> <span class="token punctuation">:</span> ZXhhbXBsZQ== |

1 2 | kubectl apply -f secret.yaml |

We can list the secret in the air-viewer namespace

1 2 | kubectl get secret -n air-viewer |

Output:

1 2 3 4 5 | NAME TYPE DATA AGE default-token-p6j5h kubernetes.io/service-account-token 3 105m mysql-pass-non-root Opaque 1 3m54s mysql-secrets Opaque 1 3m54s |

Descriptions show information of mysql-secrets , similar to mysql-pass-non-root

1 2 | kubectl describe secret mysql-secrets -n air-viewer |

Output:

1 2 3 4 5 6 7 8 9 10 | Name: mysql-secrets Namespace: air-viewer Labels: <none> Annotations: Type: Opaque Data ==== ROOT_PASSWORD: 4 bytes |

Persistent Storage

Container is an ephemeral structure. Any changes to a running container will lose data when the container stops. Since containers are not suitable for storage databases, we must use the volume store to mount with mysql’s pods.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 | <span class="token comment"># tạo kho 2Gi trống</span> <span class="token key atrule">apiVersion</span> <span class="token punctuation">:</span> v1 <span class="token key atrule">kind</span> <span class="token punctuation">:</span> PersistentVolume <span class="token key atrule">metadata</span> <span class="token punctuation">:</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> mysql <span class="token punctuation">-</span> pv <span class="token punctuation">-</span> volume <span class="token key atrule">namespace</span> <span class="token punctuation">:</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">labels</span> <span class="token punctuation">:</span> <span class="token key atrule">type</span> <span class="token punctuation">:</span> local <span class="token key atrule">spec</span> <span class="token punctuation">:</span> <span class="token key atrule">storageClassName</span> <span class="token punctuation">:</span> manual <span class="token key atrule">capacity</span> <span class="token punctuation">:</span> <span class="token key atrule">storage</span> <span class="token punctuation">:</span> 2Gi <span class="token key atrule">accessModes</span> <span class="token punctuation">:</span> <span class="token punctuation">-</span> ReadWriteOnce <span class="token key atrule">hostPath</span> <span class="token punctuation">:</span> <span class="token key atrule">path</span> <span class="token punctuation">:</span> <span class="token string">"/mnt/data"</span> <span class="token punctuation">---</span> <span class="token comment"># mysql sẽ sử dụng mysql-pv-claim thông qua deployment</span> <span class="token key atrule">apiVersion</span> <span class="token punctuation">:</span> v1 <span class="token key atrule">kind</span> <span class="token punctuation">:</span> PersistentVolumeClaim <span class="token key atrule">metadata</span> <span class="token punctuation">:</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> mysql <span class="token punctuation">-</span> pv <span class="token punctuation">-</span> claim <span class="token key atrule">namespace</span> <span class="token punctuation">:</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">spec</span> <span class="token punctuation">:</span> <span class="token key atrule">storageClassName</span> <span class="token punctuation">:</span> manual <span class="token key atrule">accessModes</span> <span class="token punctuation">:</span> <span class="token punctuation">-</span> ReadWriteOnce <span class="token key atrule">resources</span> <span class="token punctuation">:</span> <span class="token key atrule">requests</span> <span class="token punctuation">:</span> <span class="token key atrule">storage</span> <span class="token punctuation">:</span> 2Gi |

1 2 | kubectl apply -f persistentVolume.yaml |

Deployment

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 | <span class="token key atrule">apiVersion</span> <span class="token punctuation">:</span> apps/v1 <span class="token key atrule">kind</span> <span class="token punctuation">:</span> Deployment <span class="token key atrule">metadata</span> <span class="token punctuation">:</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> mysql <span class="token key atrule">labels</span> <span class="token punctuation">:</span> <span class="token key atrule">app</span> <span class="token punctuation">:</span> mysql <span class="token key atrule">namespace</span> <span class="token punctuation">:</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">spec</span> <span class="token punctuation">:</span> <span class="token key atrule">replicas</span> <span class="token punctuation">:</span> <span class="token number">1</span> <span class="token key atrule">selector</span> <span class="token punctuation">:</span> <span class="token key atrule">matchLabels</span> <span class="token punctuation">:</span> <span class="token key atrule">app</span> <span class="token punctuation">:</span> mysql <span class="token key atrule">strategy</span> <span class="token punctuation">:</span> <span class="token key atrule">type</span> <span class="token punctuation">:</span> Recreate <span class="token key atrule">template</span> <span class="token punctuation">:</span> <span class="token key atrule">metadata</span> <span class="token punctuation">:</span> <span class="token key atrule">labels</span> <span class="token punctuation">:</span> <span class="token key atrule">app</span> <span class="token punctuation">:</span> mysql <span class="token key atrule">spec</span> <span class="token punctuation">:</span> <span class="token key atrule">containers</span> <span class="token punctuation">:</span> <span class="token punctuation">-</span> <span class="token key atrule">image</span> <span class="token punctuation">:</span> mysql <span class="token punctuation">:</span> <span class="token number">5.7</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> mysql <span class="token key atrule">env</span> <span class="token punctuation">:</span> <span class="token punctuation">-</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> MYSQL_ROOT_PASSWORD <span class="token key atrule">valueFrom</span> <span class="token punctuation">:</span> <span class="token key atrule">secretKeyRef</span> <span class="token punctuation">:</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> mysql <span class="token punctuation">-</span> secrets <span class="token key atrule">key</span> <span class="token punctuation">:</span> ROOT_PASSWORD <span class="token punctuation">-</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> TZ <span class="token key atrule">value</span> <span class="token punctuation">:</span> Asia/Ho_Chi_Minh <span class="token punctuation">-</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> MYSQL_DATABASE <span class="token key atrule">value</span> <span class="token punctuation">:</span> air_viewer <span class="token punctuation">-</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> MYSQL_USER <span class="token key atrule">value</span> <span class="token punctuation">:</span> example <span class="token punctuation">-</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> MYSQL_PASSWORD <span class="token key atrule">valueFrom</span> <span class="token punctuation">:</span> <span class="token key atrule">secretKeyRef</span> <span class="token punctuation">:</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> mysql <span class="token punctuation">-</span> pass <span class="token punctuation">-</span> non <span class="token punctuation">-</span> root <span class="token key atrule">key</span> <span class="token punctuation">:</span> ROOT_PASSWORD <span class="token key atrule">ports</span> <span class="token punctuation">:</span> <span class="token punctuation">-</span> <span class="token key atrule">containerPort</span> <span class="token punctuation">:</span> <span class="token number">3306</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> mysql <span class="token key atrule">volumeMounts</span> <span class="token punctuation">:</span> <span class="token punctuation">-</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> mysql <span class="token punctuation">-</span> persistent <span class="token punctuation">-</span> storage <span class="token key atrule">mountPath</span> <span class="token punctuation">:</span> /var/lib/mysql <span class="token key atrule">volumes</span> <span class="token punctuation">:</span> <span class="token punctuation">-</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> mysql <span class="token punctuation">-</span> persistent <span class="token punctuation">-</span> storage <span class="token key atrule">persistentVolumeClaim</span> <span class="token punctuation">:</span> <span class="token key atrule">claimName</span> <span class="token punctuation">:</span> mysql <span class="token punctuation">-</span> pv <span class="token punctuation">-</span> claim |

As above, you can see the mapping between deployment with secret and mysql-pv-claim

1 2 | kubectl apply -f deployment.yaml |

Check the status of mysql deployment

1 2 | kubectl describe deployment mysql -n air-viewer |

output:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 | Name: mysql Namespace: air-viewer CreationTimestamp: Mon, 17 Feb 2020 06:19:55 +0000 Labels: app=mysql Annotations: deployment.kubernetes.io/revision: 1 kubectl.kubernetes.io/last-applied-configuration: {"apiVersion":"apps/v1","kind":"Deployment","metadata":{"annotations":{},"labels":{"app":"mysql"},"name":"mysql","namespace":"air-viewer"}... Selector: app=mysql Replicas: 1 desired | 1 updated | 1 total | 1 available | 0 unavailable StrategyType: Recreate MinReadySeconds: 0 Pod Template: Labels: app=mysql Containers: mysql: Image: mysql:5.7 Port: 3306/TCP Host Port: 0/TCP Environment: MYSQL_ROOT_PASSWORD: <set to the key 'ROOT_PASSWORD' in secret 'mysql-secrets'> Optional: false TZ: Asia/Ho_Chi_Minh MYSQL_DATABASE: air_viewer MYSQL_USER: example MYSQL_PASSWORD: <set to the key 'ROOT_PASSWORD' in secret 'mysql-pass-non-root'> Optional: false Mounts: /var/lib/mysql from mysql-persistent-storage (rw) Volumes: mysql-persistent-storage: Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace) ClaimName: mysql-pv-claim ReadOnly: false Conditions: Type Status Reason ---- ------ ------ Available True MinimumReplicasAvailable Progressing True NewReplicaSetAvailable OldReplicaSets: <none> NewReplicaSet: mysql-6454669767 (1/1 replicas created) Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal ScalingReplicaSet 99s deployment-controller Scaled up replica set mysql-6454669767 to 1 |

Access the internal environment of the created pods

1 2 | kubectl get pods -n air-viewer |

output:

1 2 3 | NAME READY STATUS RESTARTS AGE mysql-6454669767-7md6f 1/1 Running 0 6m18s |

1 2 | kubectl exec -it mysql-6454669767-7md6f bash -n air-viewer |

output:

1 2 3 | <a class="__cf_email__" href="/cdn-cgi/l/email-protection">[email protected]</a> :/# mysql -u root -p password: root (đã được mã hóa ở trên) là truy cập được mysql |

Service

Building service for pods (s)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | <span class="token key atrule">apiVersion</span> <span class="token punctuation">:</span> v1 <span class="token key atrule">kind</span> <span class="token punctuation">:</span> Service <span class="token key atrule">metadata</span> <span class="token punctuation">:</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> mysql <span class="token key atrule">namespace</span> <span class="token punctuation">:</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">labels</span> <span class="token punctuation">:</span> <span class="token key atrule">app</span> <span class="token punctuation">:</span> mysql <span class="token key atrule">spec</span> <span class="token punctuation">:</span> <span class="token key atrule">type</span> <span class="token punctuation">:</span> ClusterIP <span class="token key atrule">ports</span> <span class="token punctuation">:</span> <span class="token punctuation">-</span> <span class="token key atrule">port</span> <span class="token punctuation">:</span> <span class="token number">3306</span> <span class="token key atrule">selector</span> <span class="token punctuation">:</span> <span class="token key atrule">app</span> <span class="token punctuation">:</span> mysql |

1 2 | kubectl apply -f service.yaml |

Review:

1 2 | kubectl describe svc mysql -n air-viewer |

output:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | Name: mysql Namespace: air-viewer Labels: app=mysql Annotations: kubectl.kubernetes.io/last-applied-configuration: {"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"app":"mysql"},"name":"mysql","namespace":"air-viewer"},"spec":... Selector: app=mysql Type: ClusterIP IP: 10.108.61.223 Port: <unset> 3306/TCP TargetPort: 3306/TCP Endpoints: 10.244.1.3:3306 Session Affinity: None Events: <none> |

Deploy Backend (Flask, UWSGI)

Deployment

1 2 | cd air-viewer/kubernetes/backend |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 | <span class="token key atrule">apiVersion</span> <span class="token punctuation">:</span> apps/v1 <span class="token key atrule">kind</span> <span class="token punctuation">:</span> Deployment <span class="token key atrule">metadata</span> <span class="token punctuation">:</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> backend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">labels</span> <span class="token punctuation">:</span> <span class="token key atrule">app</span> <span class="token punctuation">:</span> backend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">namespace</span> <span class="token punctuation">:</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">spec</span> <span class="token punctuation">:</span> <span class="token key atrule">replicas</span> <span class="token punctuation">:</span> <span class="token number">2</span> <span class="token key atrule">selector</span> <span class="token punctuation">:</span> <span class="token key atrule">matchLabels</span> <span class="token punctuation">:</span> <span class="token key atrule">app</span> <span class="token punctuation">:</span> backend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">strategy</span> <span class="token punctuation">:</span> <span class="token key atrule">type</span> <span class="token punctuation">:</span> Recreate <span class="token key atrule">template</span> <span class="token punctuation">:</span> <span class="token key atrule">metadata</span> <span class="token punctuation">:</span> <span class="token key atrule">labels</span> <span class="token punctuation">:</span> <span class="token key atrule">app</span> <span class="token punctuation">:</span> backend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">spec</span> <span class="token punctuation">:</span> <span class="token key atrule">containers</span> <span class="token punctuation">:</span> <span class="token punctuation">-</span> <span class="token key atrule">image</span> <span class="token punctuation">:</span> quanghung97/backend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token punctuation">:</span> v1 <span class="token key atrule">imagePullPolicy</span> <span class="token punctuation">:</span> Always <span class="token key atrule">name</span> <span class="token punctuation">:</span> backend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">env</span> <span class="token punctuation">:</span> <span class="token punctuation">-</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> DB_HOST <span class="token key atrule">value</span> <span class="token punctuation">:</span> mysql <span class="token punctuation">:</span> <span class="token number">3306</span> <span class="token punctuation">-</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> DB_DATABASE <span class="token key atrule">value</span> <span class="token punctuation">:</span> air_viewer <span class="token punctuation">-</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> DB_USERNAME <span class="token key atrule">value</span> <span class="token punctuation">:</span> example <span class="token punctuation">-</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> DB_PASSWORD <span class="token key atrule">valueFrom</span> <span class="token punctuation">:</span> <span class="token key atrule">secretKeyRef</span> <span class="token punctuation">:</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> mysql <span class="token punctuation">-</span> pass <span class="token punctuation">-</span> non <span class="token punctuation">-</span> root <span class="token key atrule">key</span> <span class="token punctuation">:</span> ROOT_PASSWORD <span class="token key atrule">ports</span> <span class="token punctuation">:</span> <span class="token punctuation">-</span> <span class="token key atrule">containerPort</span> <span class="token punctuation">:</span> <span class="token number">80</span> <span class="token key atrule">protocol</span> <span class="token punctuation">:</span> TCP |

This deployment will combine with the secret user, the password created earlier.

1 2 | kubectl apply -f deployment.yaml |

Check:

1 2 | kubectl describe deployment backend-air-viewer -n air-viewer |

output:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 | Name: backend-air-viewer Namespace: air-viewer CreationTimestamp: Mon, 17 Feb 2020 06:53:19 +0000 Labels: app=backend-air-viewer Annotations: deployment.kubernetes.io/revision: 1 kubectl.kubernetes.io/last-applied-configuration: {"apiVersion":"apps/v1","kind":"Deployment","metadata":{"annotations":{},"labels":{"app":"backend-air-viewer"},"name":"backend-air-viewer"... Selector: app=backend-air-viewer Replicas: 2 desired | 2 updated | 2 total | 2 available | 0 unavailable StrategyType: Recreate MinReadySeconds: 0 Pod Template: Labels: app=backend-air-viewer Containers: backend-air-viewer: Image: quanghung97/backend-air-viewer:v1 Port: 80/TCP Host Port: 0/TCP Environment: DB_HOST: mysql:3306 DB_DATABASE: air_viewer DB_USERNAME: example DB_PASSWORD: <set to the key 'ROOT_PASSWORD' in secret 'mysql-pass-non-root'> Optional: false Mounts: <none> Volumes: <none> Conditions: Type Status Reason ---- ------ ------ Available True MinimumReplicasAvailable Progressing True NewReplicaSetAvailable OldReplicaSets: <none> NewReplicaSet: backend-air-viewer-69dd45dfbd (2/2 replicas created) Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal ScalingReplicaSet 2m3s deployment-controller Scaled up replica set backend-air-viewer-69dd45dfbd to 2 |

Service

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | <span class="token key atrule">apiVersion</span> <span class="token punctuation">:</span> v1 <span class="token key atrule">kind</span> <span class="token punctuation">:</span> Service <span class="token key atrule">metadata</span> <span class="token punctuation">:</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> backend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">namespace</span> <span class="token punctuation">:</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">labels</span> <span class="token punctuation">:</span> <span class="token key atrule">app</span> <span class="token punctuation">:</span> backend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">spec</span> <span class="token punctuation">:</span> <span class="token key atrule">selector</span> <span class="token punctuation">:</span> <span class="token key atrule">app</span> <span class="token punctuation">:</span> backend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">ports</span> <span class="token punctuation">:</span> <span class="token punctuation">-</span> <span class="token key atrule">protocol</span> <span class="token punctuation">:</span> TCP <span class="token key atrule">port</span> <span class="token punctuation">:</span> <span class="token number">80</span> <span class="token key atrule">targetPort</span> <span class="token punctuation">:</span> <span class="token number">80</span> |

1 2 | kubectl apply -f service.yaml |

Deploy Frontend (Nuxt.js)

1 2 | cd air-viewer/kubernetes/frontend |

Deployment

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 | <span class="token key atrule">apiVersion</span> <span class="token punctuation">:</span> apps/v1 <span class="token key atrule">kind</span> <span class="token punctuation">:</span> Deployment <span class="token key atrule">metadata</span> <span class="token punctuation">:</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> frontend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">labels</span> <span class="token punctuation">:</span> <span class="token key atrule">app</span> <span class="token punctuation">:</span> frontend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">namespace</span> <span class="token punctuation">:</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">spec</span> <span class="token punctuation">:</span> <span class="token key atrule">replicas</span> <span class="token punctuation">:</span> <span class="token number">3</span> <span class="token key atrule">selector</span> <span class="token punctuation">:</span> <span class="token key atrule">matchLabels</span> <span class="token punctuation">:</span> <span class="token key atrule">app</span> <span class="token punctuation">:</span> frontend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">strategy</span> <span class="token punctuation">:</span> <span class="token key atrule">type</span> <span class="token punctuation">:</span> Recreate <span class="token key atrule">template</span> <span class="token punctuation">:</span> <span class="token key atrule">metadata</span> <span class="token punctuation">:</span> <span class="token key atrule">labels</span> <span class="token punctuation">:</span> <span class="token key atrule">app</span> <span class="token punctuation">:</span> frontend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">spec</span> <span class="token punctuation">:</span> <span class="token key atrule">containers</span> <span class="token punctuation">:</span> <span class="token punctuation">-</span> <span class="token key atrule">image</span> <span class="token punctuation">:</span> quanghung97/frontend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token punctuation">:</span> framgia2c <span class="token key atrule">imagePullPolicy</span> <span class="token punctuation">:</span> Always <span class="token key atrule">name</span> <span class="token punctuation">:</span> frontend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">ports</span> <span class="token punctuation">:</span> <span class="token punctuation">-</span> <span class="token key atrule">containerPort</span> <span class="token punctuation">:</span> <span class="token number">3000</span> <span class="token key atrule">protocol</span> <span class="token punctuation">:</span> TCP <span class="token key atrule">env</span> <span class="token punctuation">:</span> <span class="token punctuation">-</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> TZ <span class="token key atrule">value</span> <span class="token punctuation">:</span> Asia/Ho_Chi_Minh |

1 2 | kubectl apply -f deployment.yaml |

Service

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | <span class="token key atrule">apiVersion</span> <span class="token punctuation">:</span> v1 <span class="token key atrule">kind</span> <span class="token punctuation">:</span> Service <span class="token key atrule">metadata</span> <span class="token punctuation">:</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> frontend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">namespace</span> <span class="token punctuation">:</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">labels</span> <span class="token punctuation">:</span> <span class="token key atrule">app</span> <span class="token punctuation">:</span> frontend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">spec</span> <span class="token punctuation">:</span> <span class="token key atrule">selector</span> <span class="token punctuation">:</span> <span class="token key atrule">app</span> <span class="token punctuation">:</span> frontend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">type</span> <span class="token punctuation">:</span> ClusterIP <span class="token key atrule">ports</span> <span class="token punctuation">:</span> <span class="token punctuation">-</span> <span class="token key atrule">protocol</span> <span class="token punctuation">:</span> TCP <span class="token key atrule">port</span> <span class="token punctuation">:</span> <span class="token number">80</span> <span class="token key atrule">targetPort</span> <span class="token punctuation">:</span> <span class="token number">3000</span> |

1 2 | kubectl apply -f service.yaml |

Check:

1 2 | kubectl describe deployment frontend-air-viewer -n air-viewer |

Output:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 | Name: frontend-air-viewer Namespace: air-viewer CreationTimestamp: Mon, 17 Feb 2020 06:59:19 +0000 Labels: app=frontend-air-viewer Annotations: deployment.kubernetes.io/revision: 1 kubectl.kubernetes.io/last-applied-configuration: {"apiVersion":"apps/v1","kind":"Deployment","metadata":{"annotations":{},"labels":{"app":"frontend-air-viewer"},"name":"frontend-air-viewe... Selector: app=frontend-air-viewer Replicas: 3 desired | 3 updated | 3 total | 3 available | 0 unavailable StrategyType: Recreate MinReadySeconds: 0 Pod Template: Labels: app=frontend-air-viewer Containers: frontend-air-viewer: Image: quanghung97/frontend-air-viewer:framgia2c Port: 3000/TCP Host Port: 0/TCP Environment: TZ: Asia/Ho_Chi_Minh Mounts: <none> Volumes: <none> Conditions: Type Status Reason ---- ------ ------ Available True MinimumReplicasAvailable Progressing True NewReplicaSetAvailable OldReplicaSets: <none> NewReplicaSet: frontend-air-viewer-67d5dd75c7 (3/3 replicas created) Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal ScalingReplicaSet 88s deployment-controller Scaled up replica set frontend-air-viewer-67d5dd75c7 to 3 |

Ingress controller config

VirtualServer Profile: VirtualServer to configure load balancing for a domain

In this part, I have some requirements on Virtual server route and virtual server learn more here

1 2 | cd air-viewer/kubernetes/nginx-ingress |

Secret DNS framgia2c.mylabserver.com

1 2 3 4 5 6 7 8 9 10 11 | <span class="token key atrule">apiVersion</span> <span class="token punctuation">:</span> v1 <span class="token key atrule">kind</span> <span class="token punctuation">:</span> Secret <span class="token key atrule">metadata</span> <span class="token punctuation">:</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> air <span class="token punctuation">-</span> viewer <span class="token punctuation">-</span> secret <span class="token key atrule">namespace</span> <span class="token punctuation">:</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">type</span> <span class="token punctuation">:</span> Opaque <span class="token key atrule">data</span> <span class="token punctuation">:</span> <span class="token comment"># change this to your crt and key with your DNS</span> <span class="token key atrule">tls.crt</span> <span class="token punctuation">:</span> LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURMakNDQWhZQ0NRREFPRjl0THNhWFdqQU5CZ2txaGtpRzl3MEJBUXNGQURCYU1Rc3dDUVlEVlFRR0V3SlYKVXpFTE1Ba0dBMVVFQ0F3Q1EwRXhJVEFmQmdOVkJBb01HRWx1ZEdWeWJtVjBJRmRwWkdkcGRITWdVSFI1SUV4MApaREViTUJrR0ExVUVBd3dTWTJGbVpTNWxlR0Z0Y0d4bExtTnZiU0FnTUI0WERURTRNRGt4TWpFMk1UVXpOVm9YCkRUSXpNRGt4TVRFMk1UVXpOVm93V0RFTE1Ba0dBMVVFQmhNQ1ZWTXhDekFKQmdOVkJBZ01Ba05CTVNFd0h3WUQKVlFRS0RCaEpiblJsY201bGRDQlhhV1JuYVhSeklGQjBlU0JNZEdReEdUQVhCZ05WQkFNTUVHTmhabVV1WlhoaApiWEJzWlM1amIyMHdnZ0VpTUEwR0NTcUdTSWIzRFFFQkFRVUFBNElCRHdBd2dnRUtBb0lCQVFDcDZLbjdzeTgxCnAwanVKL2N5ayt2Q0FtbHNmanRGTTJtdVpOSzBLdGVjcUcyZmpXUWI1NXhRMVlGQTJYT1N3SEFZdlNkd0kyaloKcnVXOHFYWENMMnJiNENaQ0Z4d3BWRUNyY3hkam0zdGVWaVJYVnNZSW1tSkhQUFN5UWdwaW9iczl4N0RsTGM2SQpCQTBaalVPeWwwUHFHOVNKZXhNVjczV0lJYTVyRFZTRjJyNGtTa2JBajREY2o3TFhlRmxWWEgySTVYd1hDcHRDCm42N0pDZzQyZitrOHdnemNSVnA4WFprWldaVmp3cTlSVUtEWG1GQjJZeU4xWEVXZFowZXdSdUtZVUpsc202OTIKc2tPcktRajB2a29QbjQxRUUvK1RhVkVwcUxUUm9VWTNyemc3RGtkemZkQml6Rk8yZHNQTkZ4MkNXMGpYa05MdgpLbzI1Q1pyT2hYQUhBZ01CQUFFd0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFLSEZDY3lPalp2b0hzd1VCTWRMClJkSEliMzgzcFdGeW5acS9MdVVvdnNWQTU4QjBDZzdCRWZ5NXZXVlZycTVSSWt2NGxaODFOMjl4MjFkMUpINnIKalNuUXgrRFhDTy9USkVWNWxTQ1VwSUd6RVVZYVVQZ1J5anNNL05VZENKOHVIVmhaSitTNkZBK0NuT0Q5cm4yaQpaQmVQQ0k1ckh3RVh3bm5sOHl3aWozdnZRNXpISXV5QmdsV3IvUXl1aTlmalBwd1dVdlVtNG52NVNNRzl6Q1Y3ClBwdXd2dWF0cWpPMTIwOEJqZkUvY1pISWc4SHc5bXZXOXg5QytJUU1JTURFN2IvZzZPY0s3TEdUTHdsRnh2QTgKN1dqRWVxdW5heUlwaE1oS1JYVmYxTjM0OWVOOThFejM4Zk9USFRQYmRKakZBL1BjQytHeW1lK2lHdDVPUWRGaAp5UkU9Ci0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K <span class="token key atrule">tls.key</span> <span class="token punctuation">:</span> LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb3dJQkFBS0NBUUVBcWVpcCs3TXZOYWRJN2lmM01wUHJ3Z0pwYkg0N1JUTnBybVRTdENyWG5LaHRuNDFrCkcrZWNVTldCUU5semtzQndHTDBuY0NObzJhN2x2S2wxd2k5cTIrQW1RaGNjS1ZSQXEzTVhZNXQ3WGxZa1YxYkcKQ0pwaVJ6ejBza0lLWXFHN1BjZXc1UzNPaUFRTkdZMURzcGRENmh2VWlYc1RGZTkxaUNHdWF3MVVoZHErSkVwRwp3SStBM0kreTEzaFpWVng5aU9WOEZ3cWJRcCt1eVFvT05uL3BQTUlNM0VWYWZGMlpHVm1WWThLdlVWQ2cxNWhRCmRtTWpkVnhGbldkSHNFYmltRkNaYkp1dmRySkRxeWtJOUw1S0Q1K05SQlAvazJsUkthaTAwYUZHTjY4NE93NUgKYzMzUVlzeFR0bmJEelJjZGdsdEkxNURTN3lxTnVRbWF6b1Z3QndJREFRQUJBb0lCQVFDUFNkU1luUXRTUHlxbApGZlZGcFRPc29PWVJoZjhzSStpYkZ4SU91UmF1V2VoaEp4ZG01Uk9ScEF6bUNMeUw1VmhqdEptZTIyM2dMcncyCk45OUVqVUtiL1ZPbVp1RHNCYzZvQ0Y2UU5SNThkejhjbk9SVGV3Y290c0pSMXBuMWhobG5SNUhxSkpCSmFzazEKWkVuVVFmY1hackw5NGxvOUpIM0UrVXFqbzFGRnM4eHhFOHdvUEJxalpzVjdwUlVaZ0MzTGh4bndMU0V4eUZvNApjeGI5U09HNU9tQUpvelN0Rm9RMkdKT2VzOHJKNXFmZHZ5dGdnOXhiTGFRTC94MGtwUTYyQm9GTUJEZHFPZVBXCktmUDV6WjYvMDcvdnBqNDh5QTFRMzJQem9idWJzQkxkM0tjbjMyamZtMUU3cHJ0V2wrSmVPRmlPem5CUUZKYk4KNHFQVlJ6NWhBb0dCQU50V3l4aE5DU0x1NFArWGdLeWNrbGpKNkY1NjY4Zk5qNUN6Z0ZScUowOXpuMFRsc05ybwpGVExaY3hEcW5SM0hQWU00MkpFUmgySi9xREZaeW5SUW8zY2czb2VpdlVkQlZHWTgrRkkxVzBxZHViL0w5K3l1CmVkT1pUUTVYbUdHcDZyNmpleHltY0ppbS9Pc0IzWm5ZT3BPcmxEN1NQbUJ2ek5MazRNRjZneGJYQW9HQkFNWk8KMHA2SGJCbWNQMHRqRlhmY0tFNzdJbUxtMHNBRzR1SG9VeDBlUGovMnFyblRuT0JCTkU0TXZnRHVUSnp5K2NhVQprOFJxbWRIQ2JIelRlNmZ6WXEvOWl0OHNaNzdLVk4xcWtiSWN1YytSVHhBOW5OaDFUanNSbmU3NFowajFGQ0xrCmhIY3FIMHJpN1BZU0tIVEU4RnZGQ3haWWRidUI4NENtWmlodnhicFJBb0dBSWJqcWFNWVBUWXVrbENkYTVTNzkKWVNGSjFKelplMUtqYS8vdER3MXpGY2dWQ0thMzFqQXdjaXowZi9sU1JxM0hTMUdHR21lemhQVlRpcUxmZVpxYwpSMGlLYmhnYk9jVlZrSkozSzB5QXlLd1BUdW14S0haNnpJbVpTMGMwYW0rUlk5WUdxNVQ3WXJ6cHpjZnZwaU9VCmZmZTNSeUZUN2NmQ21mb09oREN0enVrQ2dZQjMwb0xDMVJMRk9ycW40M3ZDUzUxemM1em9ZNDR1QnpzcHd3WU4KVHd2UC9FeFdNZjNWSnJEakJDSCtULzZzeXNlUGJKRUltbHpNK0l3eXRGcEFOZmlJWEV0LzQ4WGY2ME54OGdXTQp1SHl4Wlp4L05LdER3MFY4dlgxUE9ucTJBNWVpS2ErOGpSQVJZS0pMWU5kZkR1d29seHZHNmJaaGtQaS80RXRUCjNZMThzUUtCZ0h0S2JrKzdsTkpWZXN3WEU1Y1VHNkVEVXNEZS8yVWE3ZlhwN0ZjanFCRW9hcDFMU3crNlRYcDAKWmdybUtFOEFSek00NytFSkhVdmlpcS9udXBFMTVnMGtKVzNzeWhwVTl6WkxPN2x0QjBLSWtPOVpSY21Vam84UQpjcExsSE1BcWJMSjhXWUdKQ2toaVd4eWFsNmhZVHlXWTRjVmtDMHh0VGwvaFVFOUllTktvCi0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg== |

1 2 | kubectl apply -f air-viewer-secret.yaml |

Backend Virtual Server Route

Configure the backend route with prefix /api

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | <span class="token key atrule">apiVersion</span> <span class="token punctuation">:</span> k8s.nginx.org/v1 <span class="token key atrule">kind</span> <span class="token punctuation">:</span> VirtualServerRoute <span class="token key atrule">metadata</span> <span class="token punctuation">:</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> backend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">namespace</span> <span class="token punctuation">:</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">spec</span> <span class="token punctuation">:</span> <span class="token comment"># change this to your DNS</span> <span class="token key atrule">host</span> <span class="token punctuation">:</span> framgia2c.mylabserver.com <span class="token key atrule">upstreams</span> <span class="token punctuation">:</span> <span class="token punctuation">-</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> backend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">service</span> <span class="token punctuation">:</span> backend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">port</span> <span class="token punctuation">:</span> <span class="token number">80</span> <span class="token key atrule">subroutes</span> <span class="token punctuation">:</span> <span class="token punctuation">-</span> <span class="token key atrule">path</span> <span class="token punctuation">:</span> /api <span class="token key atrule">action</span> <span class="token punctuation">:</span> <span class="token key atrule">pass</span> <span class="token punctuation">:</span> backend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer |

1 2 | kubectl apply -f backend-virtual-server-route.yaml |

Frontend Virtual Server Route

Configure the frontend’s route with the prefix /

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | <span class="token key atrule">apiVersion</span> <span class="token punctuation">:</span> k8s.nginx.org/v1 <span class="token key atrule">kind</span> <span class="token punctuation">:</span> VirtualServerRoute <span class="token key atrule">metadata</span> <span class="token punctuation">:</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> frontend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">namespace</span> <span class="token punctuation">:</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">spec</span> <span class="token punctuation">:</span> <span class="token comment"># change this to your DNS</span> <span class="token key atrule">host</span> <span class="token punctuation">:</span> framgia2c.mylabserver.com <span class="token key atrule">upstreams</span> <span class="token punctuation">:</span> <span class="token punctuation">-</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> frontend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">service</span> <span class="token punctuation">:</span> frontend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">port</span> <span class="token punctuation">:</span> <span class="token number">80</span> <span class="token key atrule">subroutes</span> <span class="token punctuation">:</span> <span class="token punctuation">-</span> <span class="token key atrule">path</span> <span class="token punctuation">:</span> / <span class="token key atrule">action</span> <span class="token punctuation">:</span> <span class="token key atrule">pass</span> <span class="token punctuation">:</span> frontend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer |

1 2 | kubectl apply -f frontend-virtual-server-route.yaml |

Air-viewer Virtual Server

Map path rules for nginx controller

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | <span class="token key atrule">apiVersion</span> <span class="token punctuation">:</span> k8s.nginx.org/v1 <span class="token key atrule">kind</span> <span class="token punctuation">:</span> VirtualServer <span class="token key atrule">metadata</span> <span class="token punctuation">:</span> <span class="token key atrule">name</span> <span class="token punctuation">:</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">namespace</span> <span class="token punctuation">:</span> air <span class="token punctuation">-</span> viewer <span class="token key atrule">spec</span> <span class="token punctuation">:</span> <span class="token comment"># change this to your DNS</span> <span class="token key atrule">host</span> <span class="token punctuation">:</span> framgia2c.mylabserver.com <span class="token key atrule">tls</span> <span class="token punctuation">:</span> <span class="token key atrule">secret</span> <span class="token punctuation">:</span> air <span class="token punctuation">-</span> viewer <span class="token punctuation">-</span> secret <span class="token key atrule">routes</span> <span class="token punctuation">:</span> <span class="token punctuation">-</span> <span class="token key atrule">path</span> <span class="token punctuation">:</span> /api <span class="token key atrule">route</span> <span class="token punctuation">:</span> air <span class="token punctuation">-</span> viewer/backend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer <span class="token punctuation">-</span> <span class="token key atrule">path</span> <span class="token punctuation">:</span> / <span class="token key atrule">route</span> <span class="token punctuation">:</span> air <span class="token punctuation">-</span> viewer/frontend <span class="token punctuation">-</span> air <span class="token punctuation">-</span> viewer |

The frontend-air-viewer is similar for backend-air-viewer

1 2 | kubectl describe virtualserverroute frontend-air-viewer -n air-viewer |

output:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 | Name: frontend-air-viewer Namespace: air-viewer Labels: <none> Annotations: kubectl.kubernetes.io/last-applied-configuration: {"apiVersion":"k8s.nginx.org/v1","kind":"VirtualServerRoute","metadata":{"annotations":{},"name":"frontend-air-viewer","namespace":"air-vi... API Version: k8s.nginx.org/v1 Kind: VirtualServerRoute Metadata: Creation Timestamp: 2020-02-17T07:10:40Z Generation: 1 Resource Version: 30179 Self Link: /apis/k8s.nginx.org/v1/namespaces/air-viewer/virtualserverroutes/frontend-air-viewer UID: 4a2a194a-1303-42c3-812a-1d35299a1bd0 Spec: Host: framgia2c.mylabserver.com Subroutes: Action: Pass: frontend-air-viewer Path: / Upstreams: Name: frontend-air-viewer Port: 80 Service: frontend-air-viewer Events: Type Reason Age From Message ---- ------ ---- ---- ------- Warning NoVirtualServersFound 2m31s nginx-ingress-controller No VirtualServer references VirtualServerRoute air-viewer/frontend-air-viewer Warning NoVirtualServersFound 2m30s nginx-ingress-controller No VirtualServer references VirtualServerRoute air-viewer/frontend-air-viewer Normal AddedOrUpdated 31s nginx-ingress-controller Configuration for air-viewer/frontend-air-viewer was added or updated Normal AddedOrUpdated 30s nginx-ingress-controller Configuration for air-viewer/frontend-air-viewer was added or updated |

Check virtualserver air-viewer

1 2 | kubectl describe virtualserver air-viewer -n air-viewer |

output:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | Name: air-viewer Namespace: air-viewer Labels: <none> Annotations: kubectl.kubernetes.io/last-applied-configuration: {"apiVersion":"k8s.nginx.org/v1","kind":"VirtualServer","metadata":{"annotations":{},"name":"air-viewer","namespace":"air-viewer"},"spec":... API Version: k8s.nginx.org/v1 Kind: VirtualServer Metadata: Creation Timestamp: 2020-02-17T07:12:38Z Generation: 1 Resource Version: 30469 Self Link: /apis/k8s.nginx.org/v1/namespaces/air-viewer/virtualservers/air-viewer UID: 7867f15d-4b5e-4fad-b279-25217c7cc18c Spec: Host: framgia2c.mylabserver.com Routes: Path: /api Route: air-viewer/backend-air-viewer Path: / Route: air-viewer/frontend-air-viewer Tls: Secret: air-viewer-secret Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal AddedOrUpdated 3m12s nginx-ingress-controller Configuration for air-viewer/air-viewer was added or updated Normal AddedOrUpdated 3m11s nginx-ingress-controller Configuration for air-viewer/air-viewer was added or updated |

Check for browser access

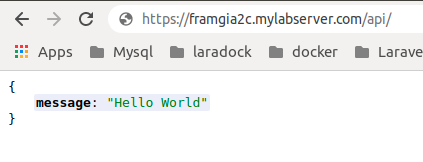

Visit https://framgia2c.mylabserver.com/api/ we will access the service backend due to prefix rule: /api

output:

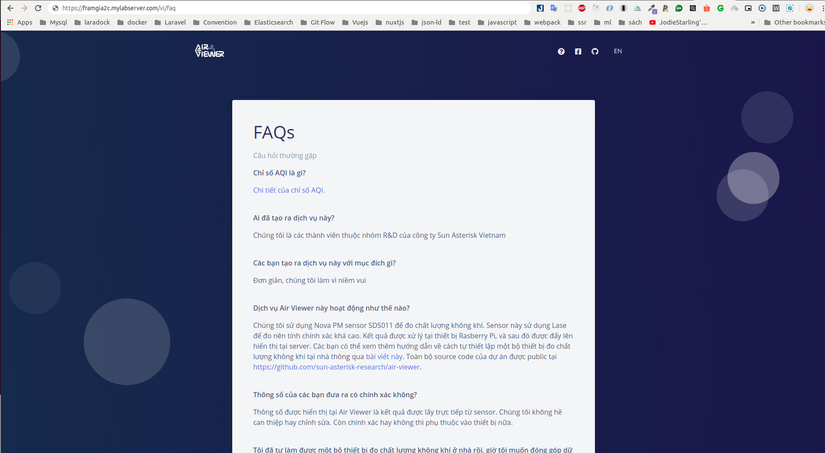

Visit https://framgia2c.mylabserver.com/en/faq we will lead to the air-viewer FAQ

output:

Currently we do not have a PI + sensor client to collect air data to send to this domain, the data will be empty like http://airviewer.sun-asterisk.vn/

Because the server is for learning purposes, it may only work for a few hours and then be shut down. The domain name will not be accessible, please understand

A few small experiments

Experiment 1 (eliminating 1 worker node)

We will shut down worker node 2 with the domain framgia3c.mylabserver.com which does not affect the current domain nor change the master node. Realizing that the app is still running normally, check that the pods been moved to worker node 1 to support the shut down pods.

Experiment 2 (completely removing the master node)

We have 3 nodes, 1 master and 2 workers running and try to shut down the master nodes. The App is still running as usual, only some services that NuxtServerInit of Nuxt.js calls API on the server platform connected to Flask will be dead, in addition to other services or APIs still work as usual. Just like an organization, the leader takes a day off, the member still works as usual, but just need to change the schedule, or have problems with the members (shutdown to the worker node at that time). surely our web will be dead. Because the pods will no longer be coordinated because the master node no longer manages operations.

Through these two experiments, it was possible to draw the zero downtime of kubernetes

End

This implementation will also be incomplete, in addition we need to combine other DevOps tools to manage the monitors, logs … collect analysis reports of system status logs, very Many other things that come to me are still very fluttering. Promise to come to the future article later