Deepfake and video, fake calls continuously scam billions of dong with increasingly sophisticated tricks, how to protect yourself?

- Tram Ho

What is Deepfake?

Even if you’re not a big fan of tech news, the phrase “deepfake” definitely reminds you of some kind of technology, because recently this concept has been mentioned. heavily in the media.

According to Kaspersky (Russian security company), deepfake is a combination of two words “deep” in deep-learning and “fake” (deception). Deepfake can be understood as an advanced artificial intelligence (AI) method that uses multiple layers of machine learning algorithms to gradually extract higher-level features from an input of raw data. learn from unstructured data – such as human faces. It can even collect data on people’s physical movements.

The image data will also be further processed to create deepfake videos through GAN (Creative Adversarial Network). This is a kind of specialized machine learning system. Two neural networks can be used to compete with each other in learning the features already in the information data store for the purposes of AI training (Example: Face Snapshot) and then generate new data have the same characteristics (new ‘photo’).

The learning ability of AIs is constantly tested and compared with the original data for training purposes, so the image “fake” by the AI becomes more and more convincing. This makes deepfakes a bigger threat than ever. Not only images, AI can also spoof other data, some deepfake technologies can be used to fake voices.

Using deepfake to spread fake news?

It is difficult to explain specifically how deepfake works in a technical way. But it can be understood simply that, deepfake with the ability to learn human face, image and voice data, it will make the face of person A attached to person B’s body very similar to the real thing, to the extent that it is difficult to tell by simply looking at it.

In 2017, an AI startup called Lyrebird released voice copies of US leaders like Mr. Trump, Mr. Obama and Mrs. Hilary Clinton. Since then, the quality has improved a lot and even the Lyrebird app is open to the public for public use.

Another example, demonstrating the danger of deepfake is the video made by actor Jordan Peele, the team of technicians used real footage of Barack Obama combined with video editing and “deepfake” techniques. released a piece of fake speech, in which Mr. Obama used bad language to talk about Mr. Trump.

Shortly after, actor Jordan Peele posted what the two halves of the merged video look like, with the aim of warning people about the sophisticated tricks of fake news that we may encounter on our lips. internet school. It is known that the actor’s team took about 60 hours to create this fake but very realistic video.

Mr. Obama is not the only politician faked by deepfake, even the founder of the social network Facebook is “fake” by deepfake, from a simple speech, deepfake created a video that Mark Zuckerberg admitted. Facebook steals user data and is controlling the future. Although in this video, the AI impersonating Mark Zuckerberg’s voice is not too similar, but it is also very difficult to recognize.

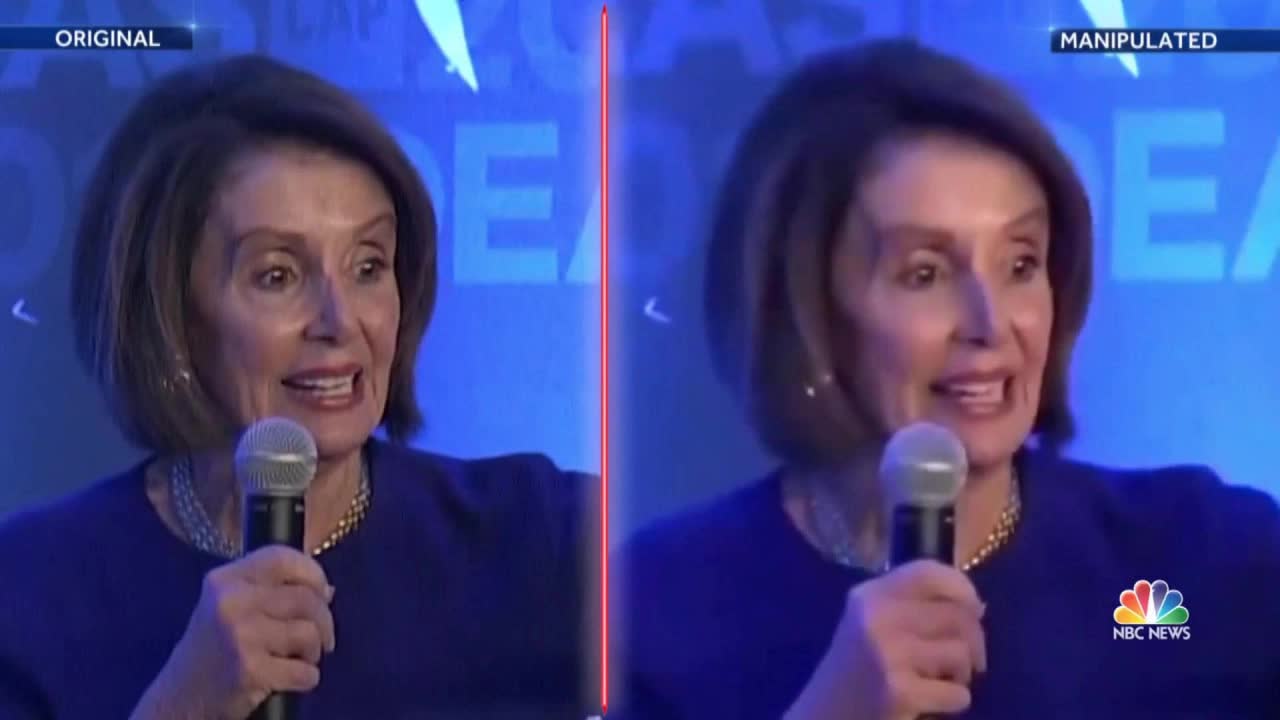

NBC News photo

All of the examples above are just videos with high resolution and detail, but if you think the sharper the image, the easier it will be to cheat, you are probably wrong. Nancy Pelosi was once the victim of a fake video with statements like a drunk, this fake video has extremely poor quality but quickly attracted millions of views on social networks, forcing Nancy Pelosi must make corrections immediately.

Deepfake – Scam and Blackmail

It has not stopped at using deepfake to launch a series of political fake news, or personal revenge. Fraudsters also apply this technology to extort money and appropriate assets.

The CEO of an energy company was scammed $243,000 using only the deepfake voice of the head of that company with an urgent money transfer request. The forgery was so convincing that the CEO did not have time to suspect and did not think about cross-checking, the money was not transferred to the headquarters but to a third-party bank account. The CEO only became suspicious when his “boss” requested another round of transfers. The counterfeiter was discovered, but it was too late to get back the $243,000 he had transferred earlier.

In France, a man named Gilbert Chikli impersonated, along with meticulously copying the office of Foreign Minister Jean-Yves le Drian and its furniture, to defraud executives. high level millions of euros. The fraudster Gilbert Chikli was later accused of disguised as a minister to demand ransoms from wealthy individuals and corporate executives in order to free French hostages in Syria. to stand trial for his actions.

Facebook boss also “got” deepfake to fake both face and voice.

Total money in porn videos is a scenario that seems to everyone when deepfake is born. Specifically, this tool has been used to blackmail female reporters and journalists, in the case of Rana Ayyub in India, this female reporter had her face transplanted into pornographic videos, then the crooks used it. use these fake videos to blackmail her.

As technology advances and access to deepfake becomes simpler and cheaper to implement, the level of danger increases every day. If we are not equipped with enough knowledge and information, it is difficult to recognize deepfakes.

How to protect yourself against deepfake?

In the face of increasingly sophisticated tricks, not all users have enough information technology knowledge to be able to recognize fake videos and audios by AI. Most security experts give advice to avoid possible bad situations, especially to improve the security of online accounts.

Trust but still have to verify: If you receive a voice message, especially messages directed to the purpose of borrowing money or borrowing your personal property. Even though the voice sounds very familiar and extremely lifelike, it is still best to call back with a phone number you know to be correct to verify that your colleague or relative did indeed send the request using the voicemail. voice messages.

Don’t rush to any link: When your loved one sends you a link with a strange structure, don’t rush to click on that link/button. Chances are it’s a trap set up so scammers can take over your online account. Try to re-verify with the person who sent you the message.

Pay attention to the smallest things: If you receive a money transfer call or a video call with money transfer content, even if it is a close person, you still need to carefully check the phone number, email or account that matches the person. making the request or not. Usually the subjects will ask to transfer funds to a third party account or to an account with a similar name.

Restrict access to your voice and images: As we all know, to be able to create fake images and sounds scammers will need your recordings, photos or footage to create fake products. To prevent your image and audio data from being copied, limit your presence on social networks or make your account private, accepting requests only from friends trust.

Not only that, some security experts also give advice to be able to detect deepfake immediately as follows:

– The movement is jerky, like an error video

– The light is changed continuously from one frame to the next

– Change skin tone continuously

– The video has weird flickering

– Mouth is out of sync with speech

– Show digital objects in the image

– Low quality audio and/or video

– The character talks continuously, without blinking

Deepfake – Will a dangerous form of fraud be more common in the future?

As of June 2019, the number of deepfake videos that IBM discovered was only 3,000. But in January 2020, that number rose to 100,000. And as of March 2020, there are more than a million deepfake videos circulating on the internet.

According to another study from Deeptrace, in December 2018, there were 15,000 deepfake videos created. This number increased to 558,000 in June 2019 and skyrocketed to over one million in February 2020.

Photo CNBC

Also in Deeptrace’s research, up to 96% of deepfake videos are created for illegal purposes. These numbers don’t lie, alerting us to a bad prospect. Technology is developing and accessing deepfake is becoming more and more simple and less expensive, so fake information flows, scams, … deepfake application will most likely be rampant. on the internet environment.

Therefore, be very careful when using the internet, especially with social networking platforms, where we are always comfortable sharing a lot of personal information that makes it easy for bad actors to take advantage of it. perform fraud.

Source : Genk