Decoding the power of ChatGPT – the chatbot that scared Google turns out to be the researchers at Google

- Tram Ho

Only launched since the end of November 2022, but the appearance of ChatGPT is creating an earthquake for the field of artificial intelligence research in particular as well as for the entire technology industry in general. With the ability to present answers in a coherent and thorough manner like a real person, ChatGPT has surprised global users and completely changed the way they look at this new AI technology.

Many investors see the rise of ChatGPT as ushering in a technological revolution similar to that of the internet or the iPhone. This explains why, despite the industry-wide downturn, OpenAI still attracts investment capital with a valuation of up to $29 billion. In addition, capital is also pouring into startups on generative AI.

In fact, AI or chatbot are both technologies that have been talked about for a long time, but only with the appearance of ChatGPT, people realized the potential and great influence of these technologies on the world. So what makes ChatGPT different from its predecessors that makes even the tech giant Google tremble?

But few people know, the heart of ChatGPT’s ability to communicate like “talk to people” was developed by Google researchers and implemented open source for it: Transformer deep learning architecture.

This architecture is the foundation for building famous natural language processing models such as Google’s BERT or OpenAI’s GPT (short for Generative Pre-trained Transformer) model family with famous names including: including GPT-2 and GPT-3. In which GPT-3 is the foundation to create the famous ChatGPT chatbot today.

Not very well known to the public, so few people know that the appearance of Transformer deep learning architecture has made a turning point for natural language processing models from which to create a chatbot like ChatGPT. To understand this importance, it is necessary to know how machines process human language before this Transformer architecture was born.

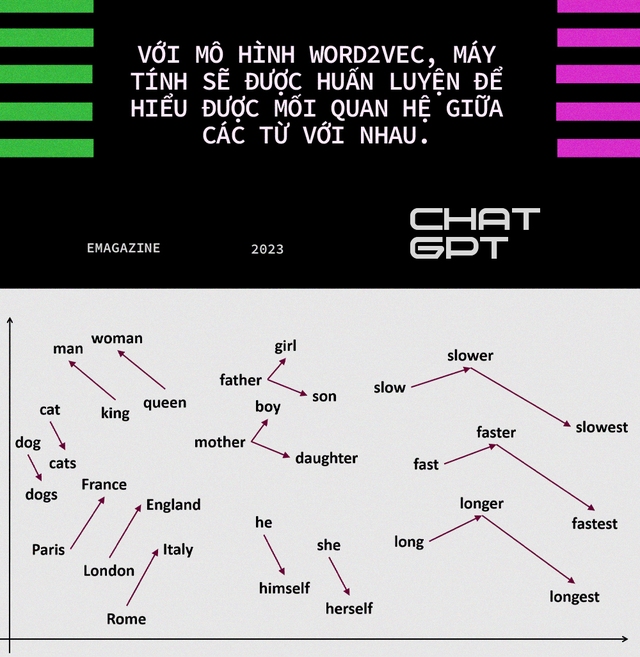

Previously, natural language models often followed the direction of sequential processing of words. For example, the Word2Vec model allows training so the AIs are able to understand related words, like when you say phone, the computer will “associate” other words like “mobile phone”. “, “iPhone”, “Android”, “touch screen” … Then the architecture of Regressive Artificial Neural Networks (RNNs) was born that allows sequential processing of sentences in a text.

But training with this approach is very slow as well as limited in the amount of data that can be trained, making it difficult for the computer to associate the meaning of each word with the context of the entire text or the whole text. posts.

In 2018, a new model called ELMo (Embeddings from Language Models) was introduced and was the first model capable of non-sequential language processing, to simulate how humans read and process text.

Using RNNs architecture, ELMo processes the text in 2 directions: read from beginning to end and vice versa, read from end to top to then combine these 2 directions of text to understand its meaning. Although it is a big step forward in terms of language processing, ELMo still has difficulty training it with increasingly longer sentences or paragraphs of text.

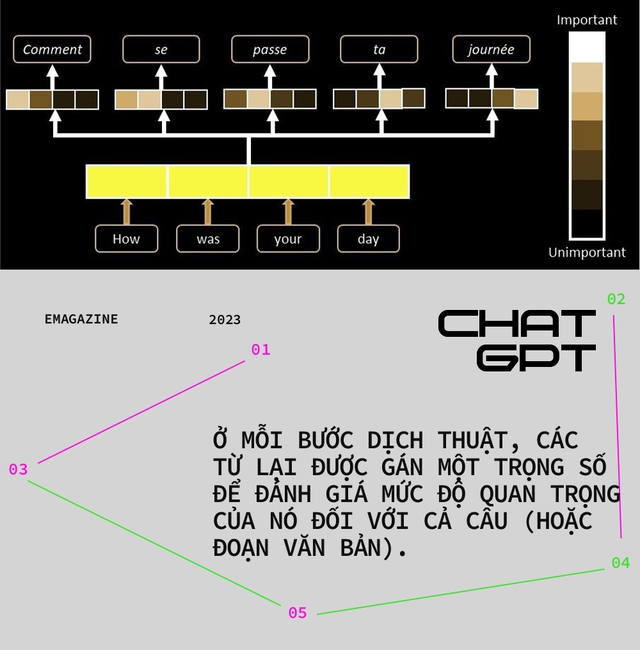

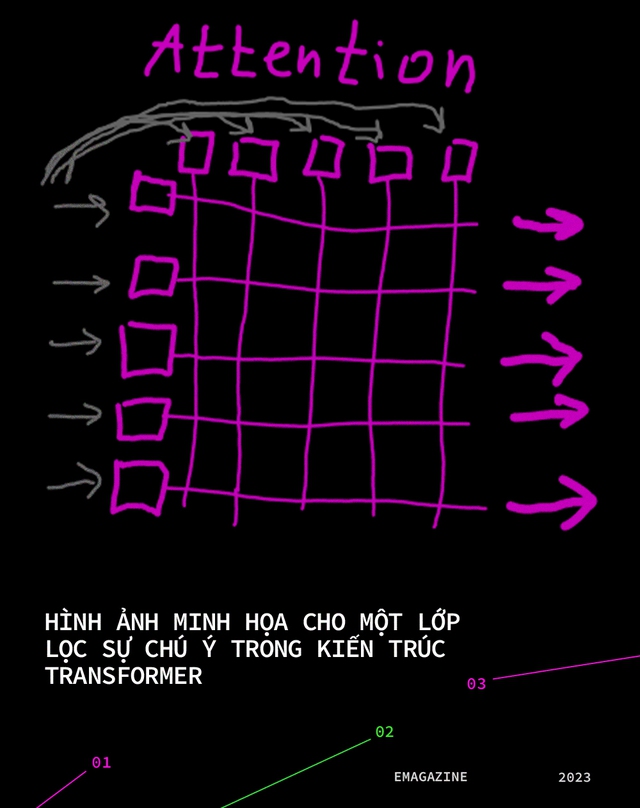

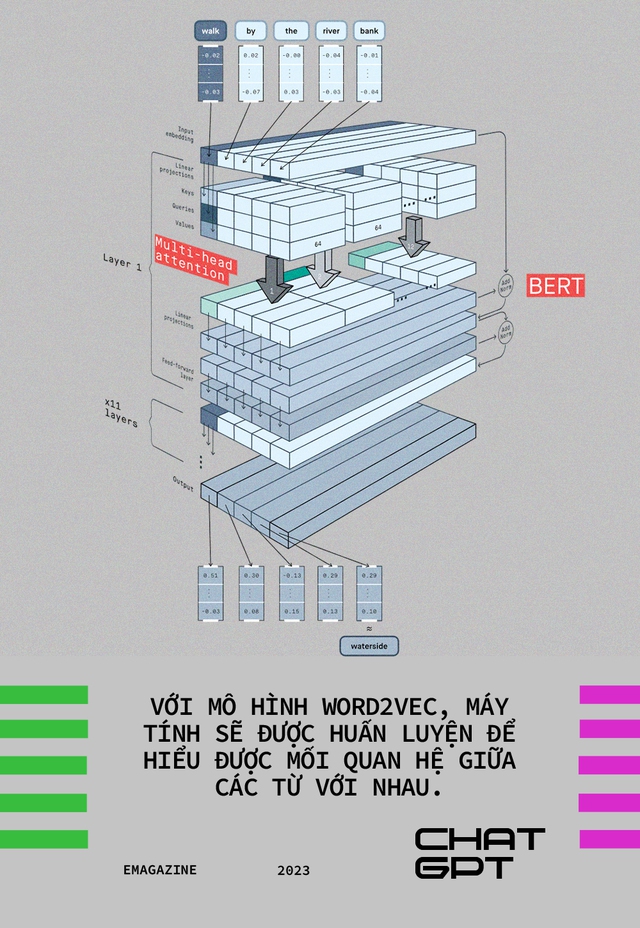

Unlike the sequential processing of each word, each sentence of the previous models, Google’s Transformer architecture is based on an attention mechanism. This mechanism allows language processing models to use attention layers to evaluate the importance of words and sentences in a piece of text based on their relevance to each other. the entire text.

Each of these attention-filtering layers can be said to be one more learning about the input of the data, helping to look at different parts of the sentence and uncover more semantic or syntactic information. These filter layers allow the model to maintain its learning rate without slowing down as the sentence length increases. Then, the processing models just add new layers to filter more and learn more about the new data. Thanks to these filter layers, models can simultaneously scan the entire text and understand its semantics.

For comparison, the formerly famous BERT language processing model contains 24 attention filtering layers, GPT-2 – the language processing model released by OpenAI in early 2019 – contains 12 filtering layers, while GPT-3, the model that makes up ChatGPT today, contains up to 96 layers of attention filtering.

In addition, another benefit of the Transformer architecture is the ability to use pre-trained models – something that did not appear until ELMo came out. The idea is similar to ImageNet, a huge database of pre-labeled images, so that image recognition models don’t have to be trained from scratch for these tasks.

Meanwhile, linguistic data blocks are more complex and difficult to process than image recognition data. Therefore, language processing models need more data to identify relationships between words and phrases. Therefore, without pre-trained models, only the tech giants have the resources to process the data and train the models to recognize that data, but for small startups, this impossible or progressing very slowly.

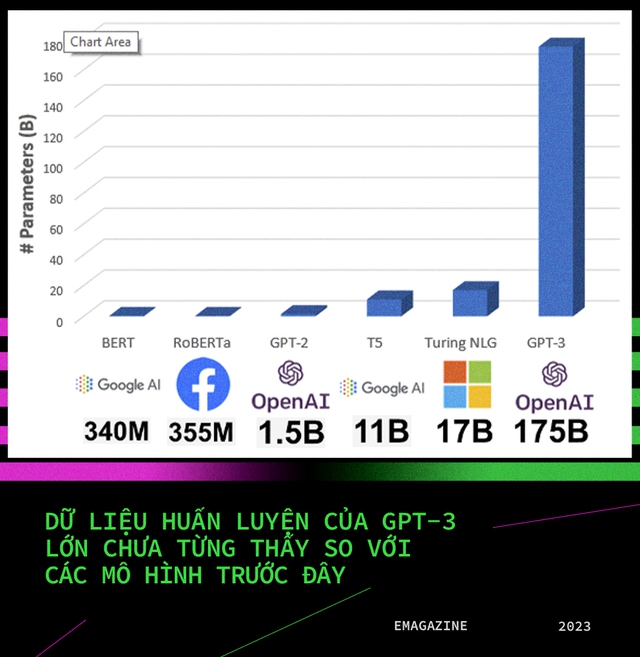

Models like GPT-3 are trained on 45TB of data and contain up to 175 billion different parameters – vastly superior to previous well-known models like Google’s BERT, Facebook’s RoBERTa, Microsoft’s Turing NLG… . GPT-3’s data block consists of more than 8 million documents with 10 billion words. This is the foundation for ChatGPT’s ability to communicate and chat to many people by surprise.

Also, another benefit of pre-trained models is the ability to fine-tune for specific tasks without having a lot of data available. For example, for a BERT model, you only need a few thousand data samples to be able to refine the model for a new task. As for a model that has been trained with too much data like GPT-3, it only needs to be trained with a small amount of data for ChatGPT to perform a new task, such as programming.

In fact, the above factors are only advantages in terms of technology platforms compared to previous chatbots and AI, but to create ChatGPT with the potential to change the entire technology industry as well as threaten the position of customers. Google’s current position, not to mention the team of AI researchers inside OpenAI. It is they who make the core of the difference that ChatGPT is bringing.

More than a chatbot with the ability to communicate like a real person, ChatGPT really opens a new door to the potential of artificial intelligence and the ability to change many economic sectors in the future. For that reason, it is not difficult to see OpenAI being valued at $29 billion even without a specific business model.

Source : Genk