Definition of autoscaling?

Auto-scaling is a way to automatically increase / decrease the computing resources that are allocated to our app at any time when needed. This helps to create 24/24 scalable cloud systems to meet usage needs.

What is HPA?

HPA stands for Horizontal Pod Autoscaler – it can be understood that this is a controller for the horizontal scale of Pods.

HPA offers the following benefits: economical, automated system configuration increases and decreases in accordance with systems with highly variable and unpredictable load volumes (enduser levels).

Compared with the “traditional” model that hardens the number of pods, Auto scaling adapts to the needs. For example, when nighttime traffic on the system drops, pods can be set to sleep mode to be turned on again in response to an abnormal increase in traffic.

Setting up the HPA requires:

1. Metrics Server

Metrics Server is a server that aggregates metrics (metrics) of containers (pods) for the autoscaling cycle integrated in K8S.

You can see on the diagram above, step by step:

- Metrics (RAM, CPU usage) are gathered from pods

- These metrics are

kubeletto thekubelet - Metrics Server collects metrics through

kubelet - Metrics are pushed to the API server, HPA will call this API to get metrics, compute to scale pods.

Note Metrics Server is not intended for purposes other than auto-scaling. For example, don’t use it as a way to monitor the system.

Install Metrics Server:

1 2 | kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.6/components.yaml |

For KOPS:

If you are using kops to manage cluster, then need to enble another config to allow metrics-server to run.

https://github.com/kubernetes/kops/tree/master/addons/metrics-server

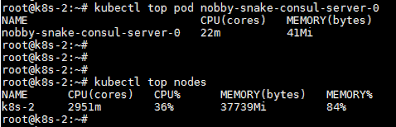

Check out your Metrics-Server installation:

After installing the metrics server, the kubectl command will be available. This command will get the current metrics of pods and nods. If we type the command but not run, then the installation of Metrics-server failed!

kubectl top node

kubectl top pod

2. Cluster Auto-Scaler

As Ban điều hành HPA Ban điều hành increased the number of pods, it became clear that the node also needed to be augmented to accommodate this new number of pods.

Cluster Auto-Scaler is a function in K8S, responsible for increasing / or decreasing the number of nodes to match the number of active pods.

Cluster Auto-Scaler will automatically adjust the size of the Kubernetes cluster (or the number of nodes) when one of the following conditions is met:

- Some run pods fail in the cluster due to insufficient resources.

- Having a node in the cluster is not being used at full capacity, and its pods can operate on other nodes (which are available) with abundant resources.

https://github.com/kubernetes/autoscaler/tree/master/cluster-autoscaler

Install Cluster Auto-Scaler:

We can see instructions for installing Cluster Auto-Scaler on each different platform according to the documents below:

- GCE https://kubernetes.io/docs/concepts/cluster-administration/cluster-management/

- GKE https://cloud.google.com/container-engine/docs/cluster-autoscaler

- AWS https://github.com/kubernetes/autoscaler/blob/master/cluster-autoscaler/cloudprovider/aws/README.md

- Azure https://github.com/kubernetes/autoscaler/blob/master/cluster-autoscaler/cloudprovider/azure/README.md

- Alibaba Cloud https://github.com/kubernetes/autoscaler/blob/master/cluster-autoscaler/cloudprovider/alicloud/README.md

- OpenStack Magnum https://github.com/kubernetes/autoscaler/blob/master/cluster-autoscaler/cloudprovider/magnum/README.md

- DigitalOcean https://github.com/kubernetes/autoscaler/blob/master/cluster-autoscaler/cloudprovider/digitalocean/README.md

Check the Cluster Auto-scaler installation:

Try deploying an application and increasing the number of pods above the current node’s resources. If the auto-scaler cluster created a new node, and deleted the node when we reduced the pod, our installation was successful.

3. Set the Requests / Limits parameters of the resource and define the Liveness / Readiness state

HPA relies on the% CPU used to calculate the number of pods to scale, so it is necessary to understand how to calculate the appropriate request / limit parameters for each pod. Refer to [this article] ( https://kubernetes.io/docs/concepts/configuration/manage-resources-containers/ ) to get the necessary knowledge.

A pod is running but the app inside it has problems, if this cannot be determined, the HPA mechanism can suffer, making it difficult to determine the number of pods to scale. See more here

4. Overall scale of the cycle

- Metrics server aggregates metrics from existing pods

- HPA will check the parameters over 15 seconds, if the value falls within the range set in HPA, it will proceed to increase / decrease the number of pods.

- In case of scale-up, (part name) Kubernetes scheduler will create a pods into the node that has all the resources the pod requested.

- If there are not enough resources, Cluster auto-scaler will increase the number of nodes in response to the number of pods that are being created.

- In the case of scale-down, the HPA reduces the number of pods

- Cluster scaler if the node is “free”, and the pods of a node X can switch to another node to utilize resources, the pod will switch to, and that node X will be scaled down. )

How does HPA calculate to scale?

https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale-walkthrough/

HPA is used to automatically scale (up / down) the number of pods based on the collection and evaluation of current CPU usage metrics, Memory (or possibly another metric that you define yourself, but spectrum the variable is still CPU or Memory)

The HPA will (by default) check the CPU / Memory usage parameters on the current pods every 15 seconds, and compare it with its (desired) setting.

The specific formula is as follows

TotalPodsNeed = ceil [CurrentPod Number * (CurrentPodsDigreeDigree)]

The ceil is the rounding up operation

For example, if you expect RAM usage to remain at 500MB, but now the demand increases to 700MB, the number of Pods is currently 1, then

Total Number of Pods Needed = ceil [1 * (700/500)]

Obviously the Number of Pods Needed will need to be proportional to the Current Parameter, the measured current that is higher than the desired level ie the system is overloaded, needs to be scaled up, and vice versa.

HPA setup file (HPA Manifest):

Finally, I will introduce the HPA setup file through the example below.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | apiVersion: autoscaling/v2beta2 kind: HorizontalPodAutoscaler metadata: name: php-apache spec: scaleTargetRef: apiVersion: apps/v1 kind: Deployment name: php-apache minReplicas: 1 maxReplicas: 10 metrics: - type: Resource resource: name: cpu target: type: Utilization averageUtilization: 50 |

In this example, the system will be scale-up when the average CPU usage of the pods is greater than or equal to 50% and vice versa, scale-down when the CPU consumption is below 50%

Article Source: https://medium.com/devops-for-z Zombies /understanding- k8s-autoscale-f8f3f90938f4