Continuing with the Guideline Settings series of basic function modules on Amazon Web Service, I would like to introduce more about Settings S3 (Simple Storage Service) on AWS. the concept of S3

1. Overview of S3 (Simple Storage Service)

1.1 Definition S3

Most generally, S3 is an online storage service that stores huge data, and the user can access this data from almost any device.

- Amazon S3 has a minimal web service interface where users can store and get any data, with any capacity, anytime and anywhere on the website.

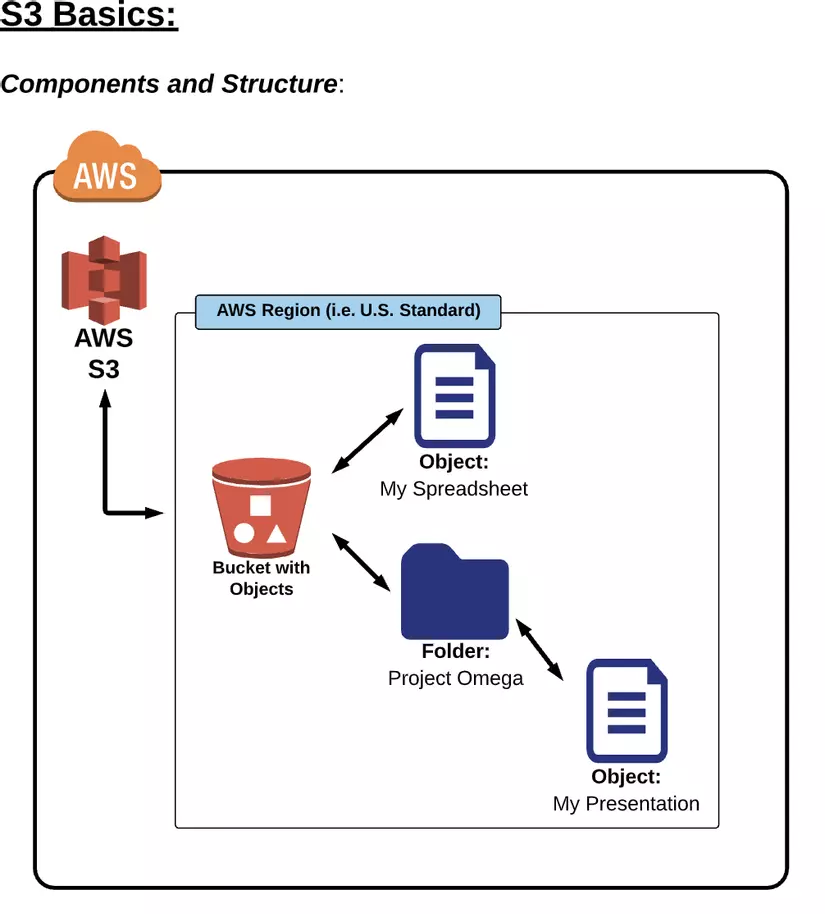

1.2 Components and Structures of S3

S3 Basics:

- S3: Simple Storage Service

- This is the primary storage service of AWS

- User can store any file type on S3

** S3 Buckets: **

- “Folders” root level user created in S3 is called buckets

- Any “subfolder” that a user creates in a bucket is called a folder

S3 Objects:

- Files that are stored in a bucket are called objects

S3 Regions:

- When creating a bucket, we have to choose a specific region to build S3 in that region. This means that any data we upload to the S3 bucket will be stored on the data center of the respective region we have selected.

- AWS recommend we choose the region with the closest geographical location, to minimize data transfer time

- If you are supplying files to a customer based on a certain region of the world, please set the region closest to the customer

1.3 Pricing / Cost Overview

Free Tier (Free Tier) applies to S3 How Amazon charges for using S3: 1.3.1. Storage Cost:

- Applies to data store on S3

- Charge is based on GB used

- The price per GB may vary by region and storage class.

1.3.2. Request Pricing – move data in / out to S3:

- PUT

- COPY

- POST

- LIST

- GET

- Lifecycle Transitions Request

- Data Retrieval

- Data Archival

- Data Restoreation

Link Pricing / Cost on S3: https://aws.amazon.com/s3/pricing/

2. Buckets and Folders:

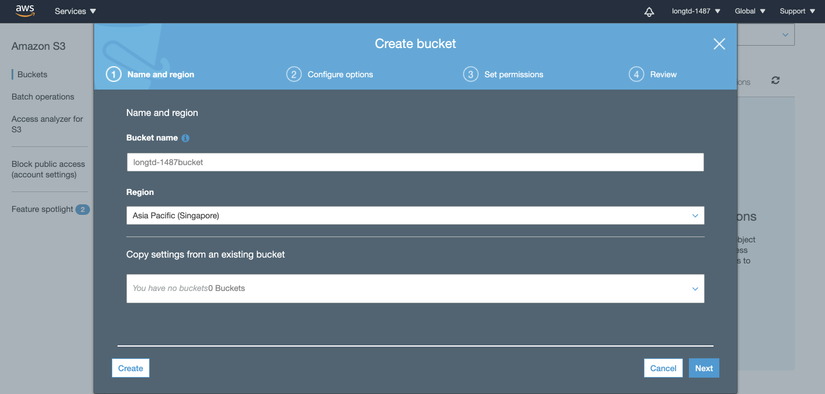

2.1 Initialize an S3 Bucket:

2.1.1. Select Bucket name: Bucket name must follow the following rules :

- Bucket names must be unique on all AWS systems

- The bucket name must be 3 ~ 63 digits long.

- Bucket names can only contain lower case characters, numbers, and hyphens “-“

- Bucket name is not formatted as an IP address (eg, 192.168.5.4)

2.1.2 Select Region:

- AWS recommend we choose the region that is closest to the majority of website and app users

- Here, I choose Region Singapore as the closest place to locate AWS servers compared to Vietnam

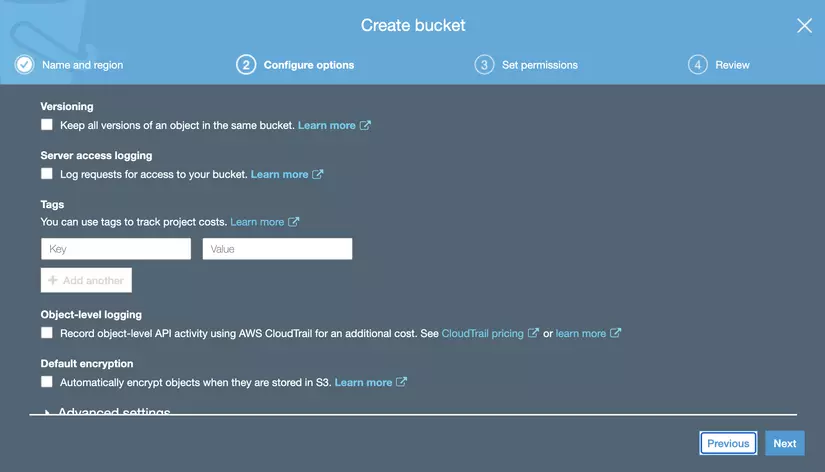

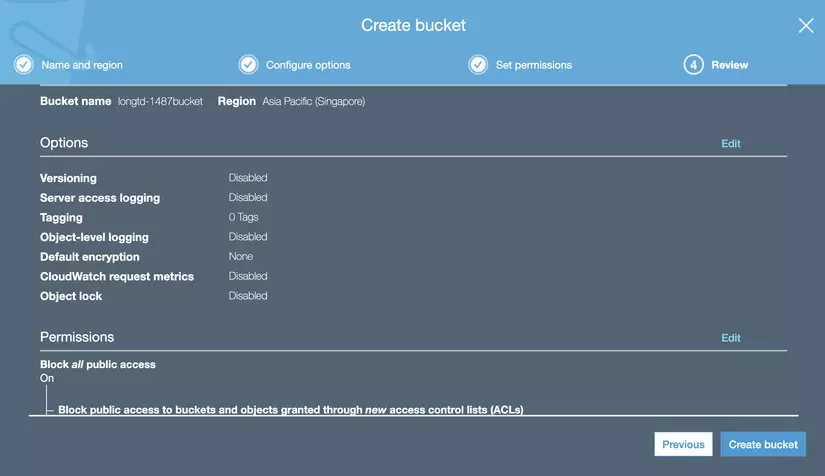

2.1.3 Properties:

- Versioning: Allows you to keep all versions of an object in the same bucket

- Server access logging: Log the entire request access to the bucket (It is recommended to set this to easily perform tracking log if there is an error calling the API)

- Object-level logging: This is a paid part to use more manage events, data events as well as Cloudtrail Insights features of AWS.

- Default encryption:

Temporarily we will set this part in default and will go into detail about these advance settings later and will proceed to create an S3 Bucket.

Step config options:

Step review:

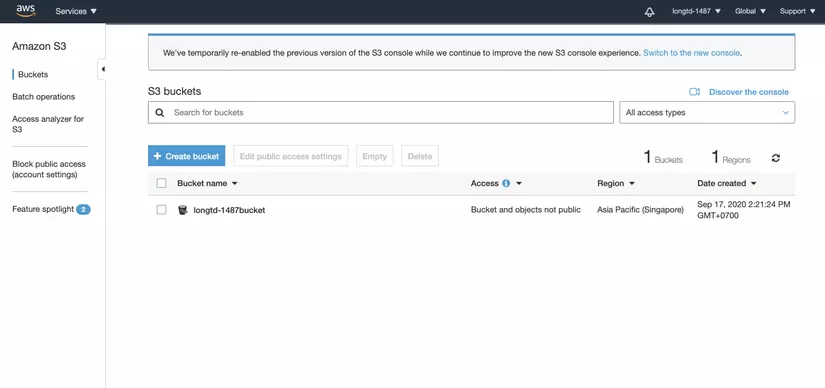

Result of creating bucket:

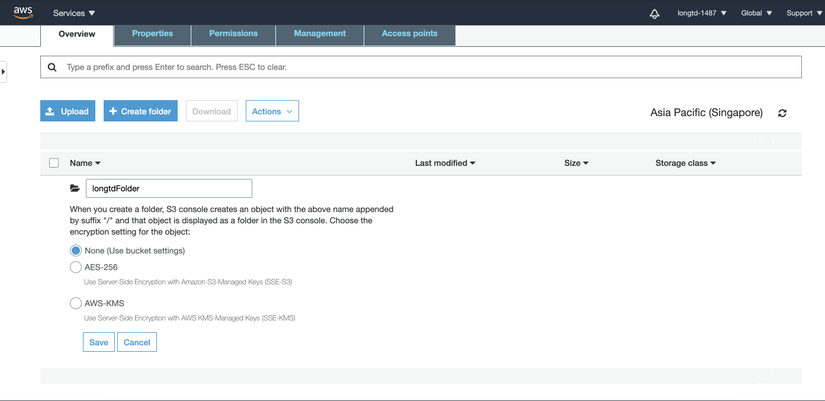

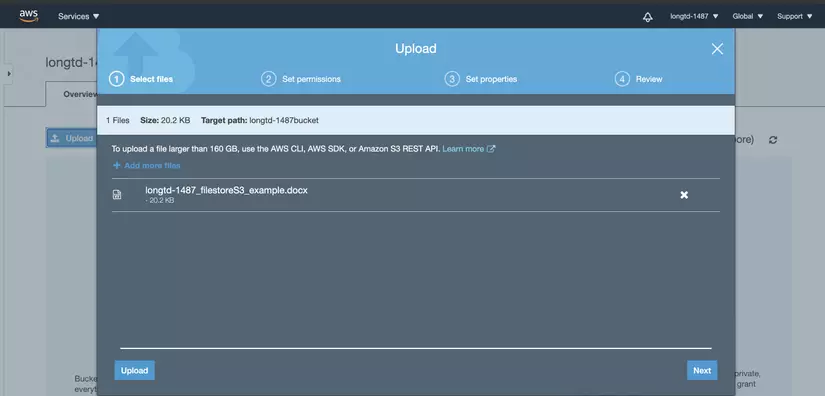

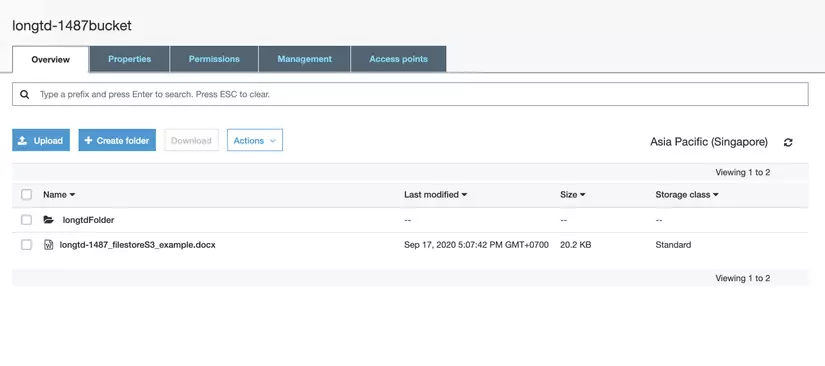

2.1.4 Upload Object (file) to bucket 2.1.4.1 Create subfolder (AWS will call subfolder folder)

- After successfully initializing an S3 bucket, we can proceed to upload any file to the S3 folder (text, video, image, …)

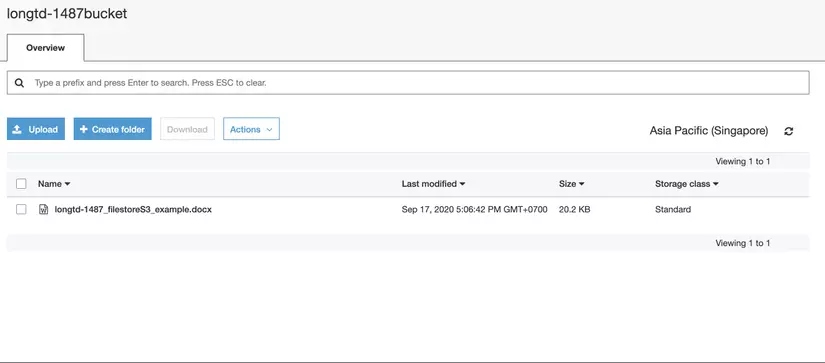

- The results after uploading the file to the subfolder:

- Continue to upload the file above to the S3 Bucket instead of subfolder:

- At this point we will have Objects stored in both the subfolder and the S3 Bucket. We do not have to create a folder to upload files that can be uploaded directly to the S3 Bucket, but that is harder to manage.

- Because we have selected Region for the S3 bucket, when the Object is uploaded to the S3 Bucket, the data will be stored on the data center of the respective Region.

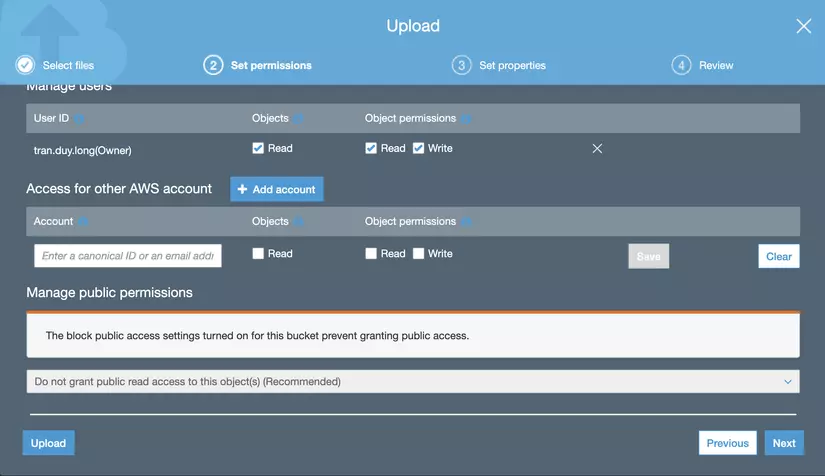

2.1.4.2 Set permissions for Objects

- We can proceed to set Read / Write permissions for each other AWS account. This setting is to restrict users’ access to resources stored on S3

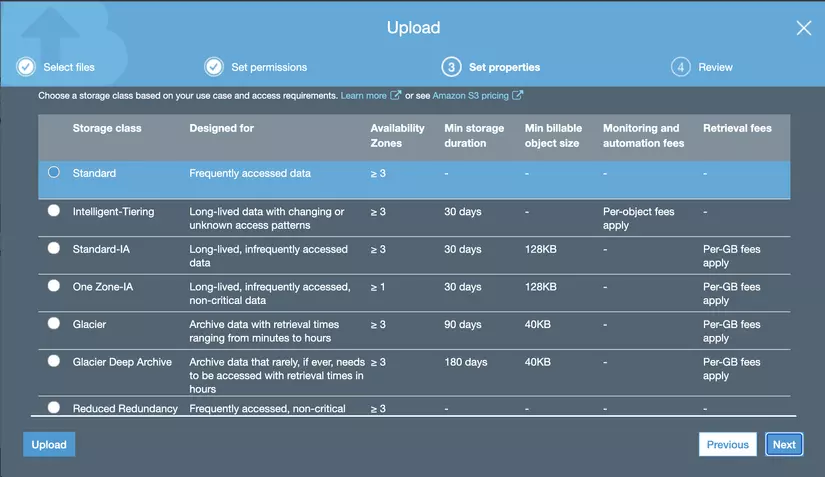

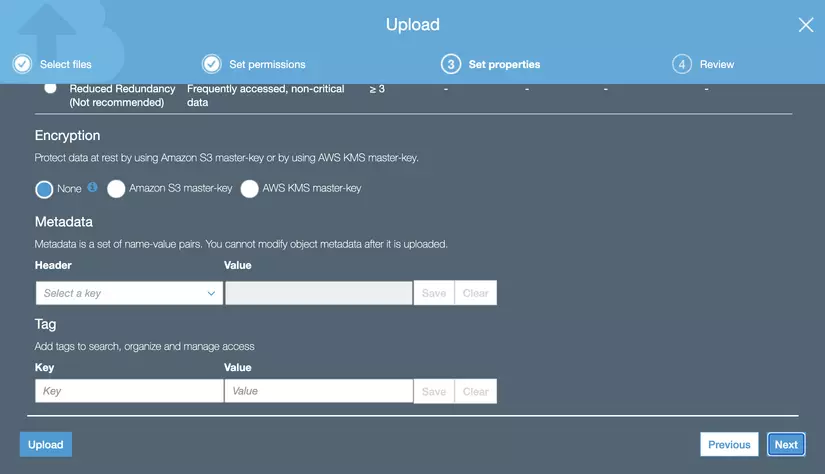

2.1.4.3 Set properties for Objects

- This setting allows us to limit the storage time of files on the S3 Bucket (30 days, 90 days, …), the size of the file as well as encrypt the data using the Amazon master-key.

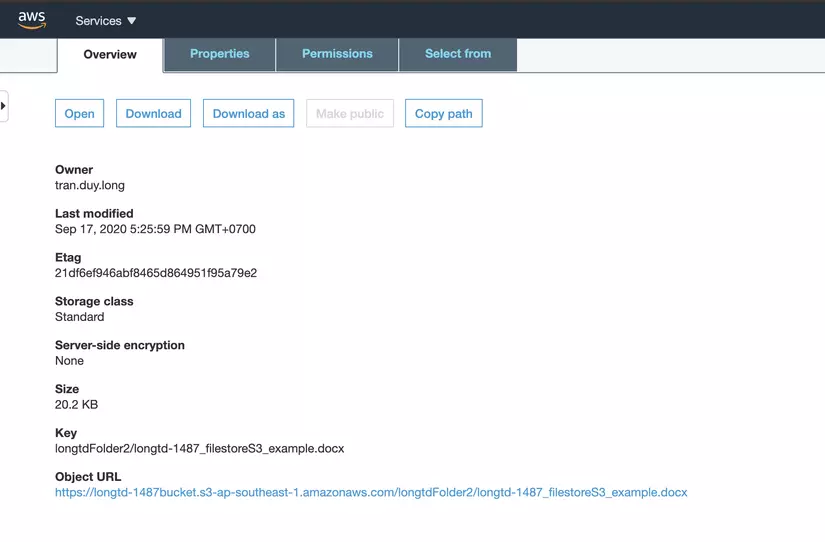

- After creating the file, by clicking directly on the file, AWS provides us with an Object URL to be able to download this file.

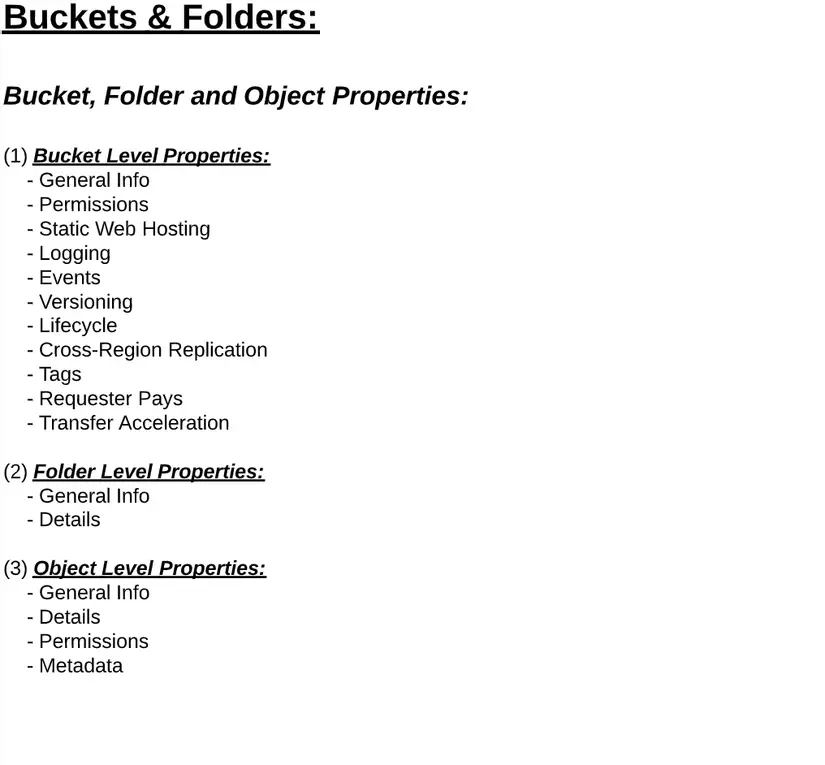

2.2 Bucket, Folder and Object Properties:

The Bucket, Folder and Object Properties are divided into the following levels:

3. Storage Class

3.1 Storage Class Definition

One thing to keep in mind is that S3 pricing is based on a Storage Class :

https://aws.amazon.com/s3/pricing/

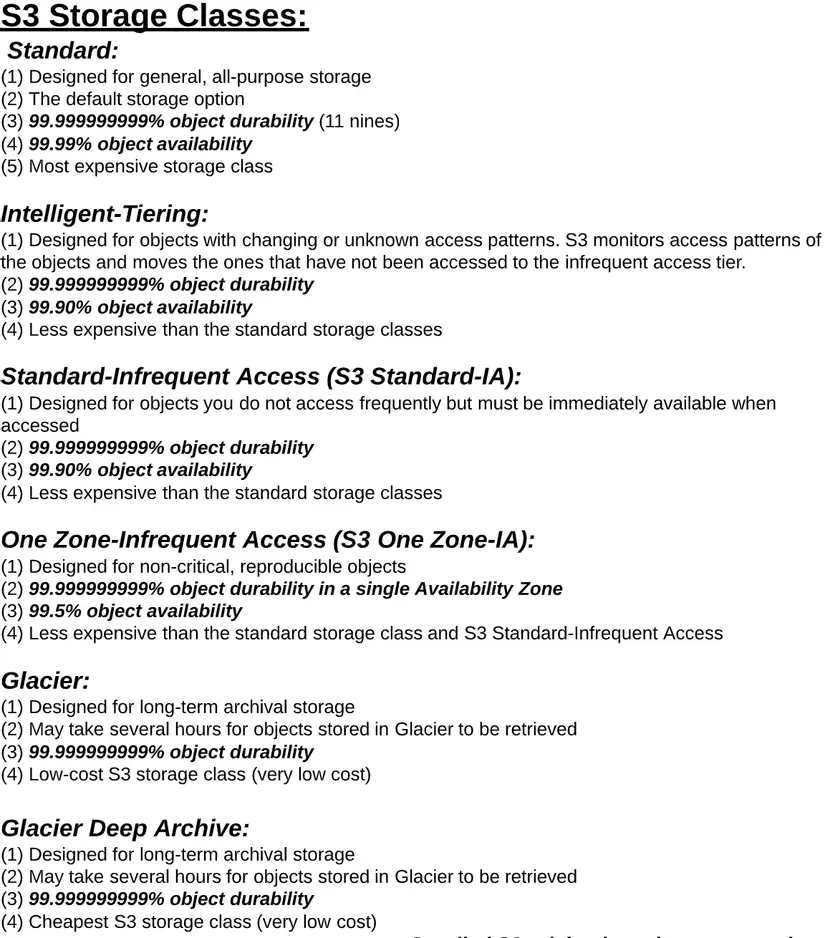

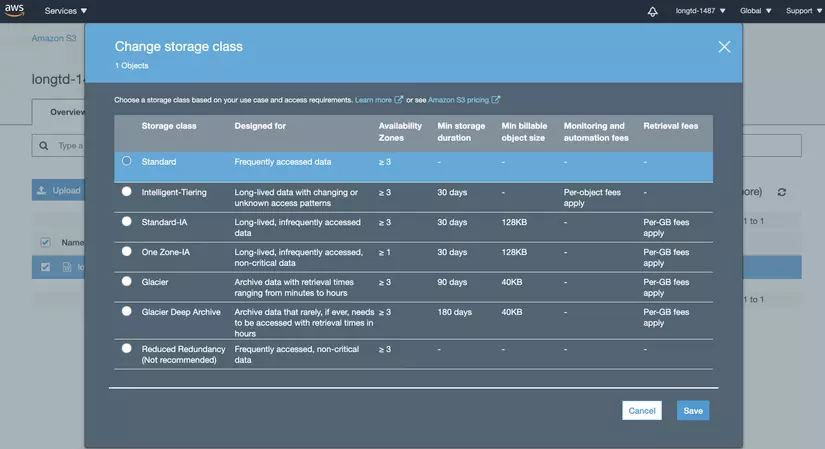

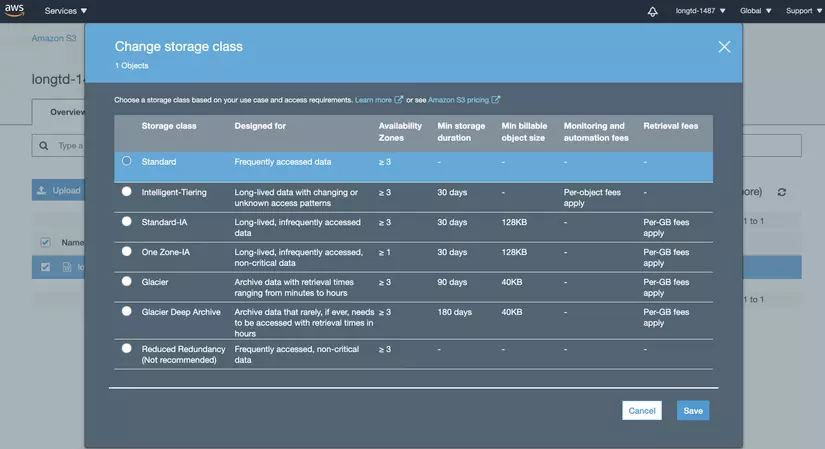

3.1.1 Each storage class is considered a classification class assigned to each Object in S3

- Storage classes include:

- Standard

- Intelligent-Tiering

- Standard Infrequent Access

- One Zone-Infrequent Access (One Zone-IA)

- Glacier

- Glacier Deep Archive

- Redduced Redundancy (not recommended)

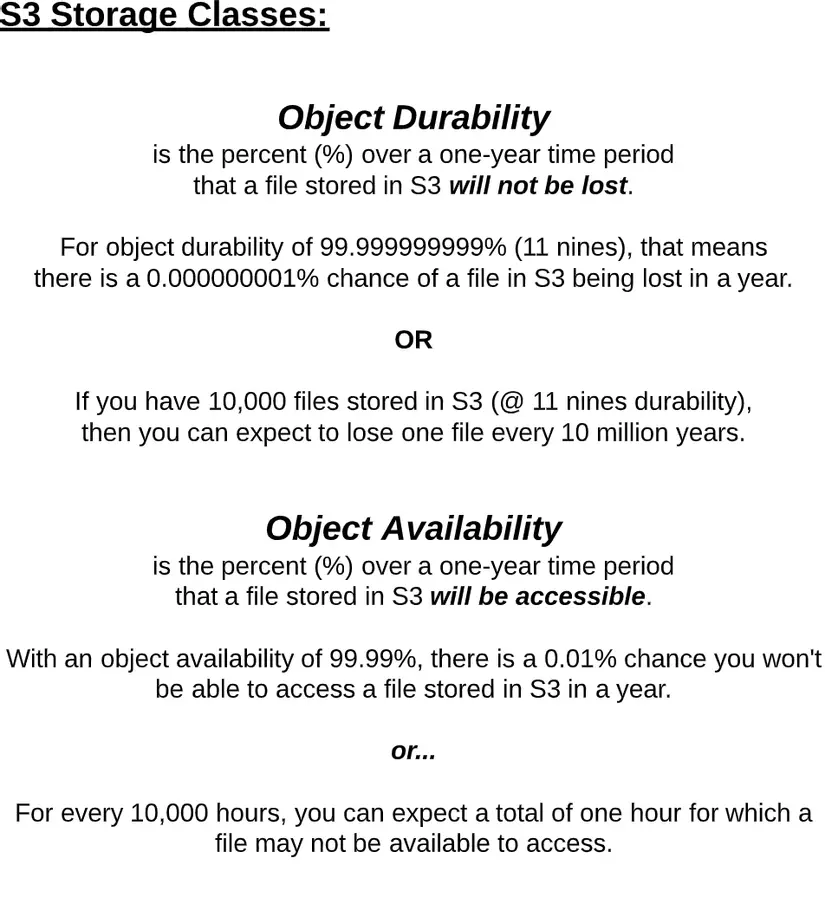

3.1.2 Each Storage Class has many properties to execute the command such as:

- Storage cost

- Object availability

- Object durability

- Frequency of access (to object)

3.1.3 Each Object must be assigned a storage class (standard is the default class). 3.1.4 We can change the storage class of an object at any time for certain Storage Class:

- Standard

- Intelligent-Tiering

- Standard-Infrequent Access (S3 Standard-IA)

- One Zone-Infrequent Access (S3 One)

3.1.5 Details and characteristics of each Storage class

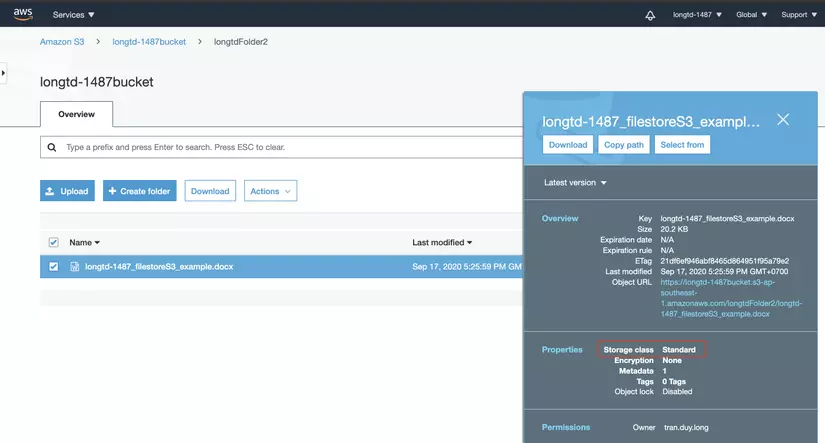

3.2 Setting / Changing Storage Class

3.2.1 Default, all new objects uploaded to S3 are set to Standard Storage Class

When you click on the Storage Class text link, the popup settings will be displayed

If you want to set a new object with different storage. We can perform the setting in two ways:

- Select another object during upload (set details)

- Use object lifecycle policies (will be mentioned in the following section).

Note: To be able to move objects into Glacier and Glacier Deep Archive storage class: the following conditions are required:

- Need to use object lifecycles.

- Changing to Glacier can take anywhere from 1 to 2 days to apply.

4. Object Lifecycle

4.1 What is Object lifecycle?

An object lifecycle is a set of rules that automatically migrate object’s storage class to another storage class that, or can be automatically deleted, based on a specified time period.

For example:

- We have a file that needs access every day for the next 30 days.

- After 30 days, we only need to access this file 1 time / 1 week for the next 60 days.

- After 90 days total, we will never need to access this file again, but still want to keep it just in case.

Solution of this problem: From date 0-29:

- Usage need = very frequent

- The most suitable Storage class: Storage class = Standard

- Cost = Cost tier is highest

Day 30-89

- Usage need = Infrequent

- The most suitable Storage class: Storage class = Standard Infrequent Access

- Cost = Cost tier middle

90+ days

- Usage need = Almost never

- Best suitable Storage class: Storage class = Glacier

- Cost = Lowest cost tier

4.2 Lifecycle Management

- The Lifecycle function is placed in the bucket level

- However, a lifecycle policy can be applied to:

- The entire bucket (Apply to the entire object in the bucket)

- A certain folder in bucket (Appli for all objects in the folder)

- A certain Object in the bucket.

- We can delete a lifecycle at any time or change the storage class back to any desired setting

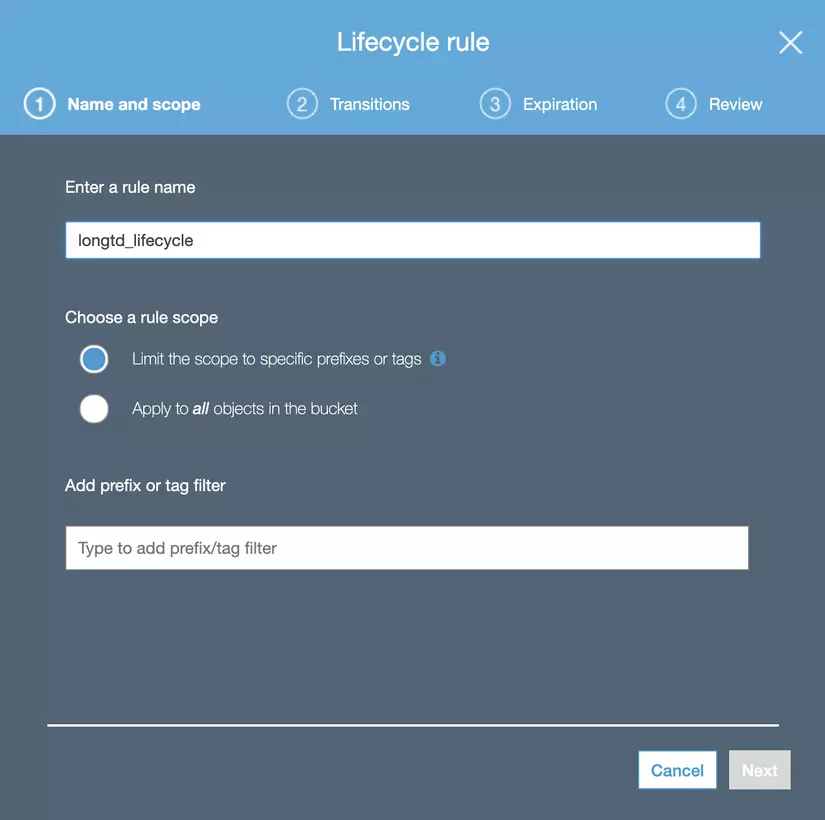

4.3 Carry out the Lifecycle setting

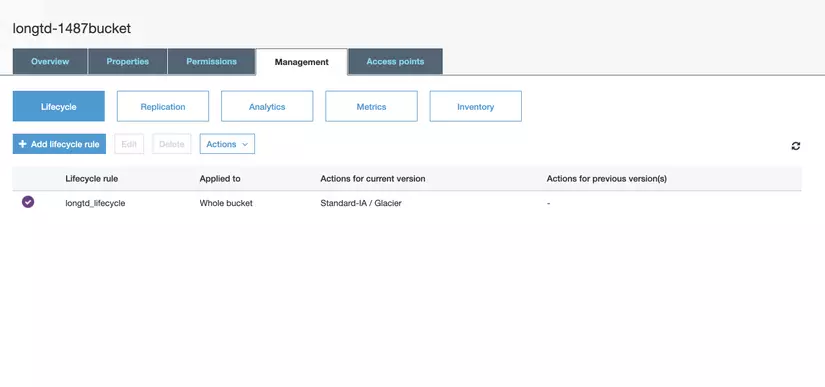

- In the bucket, select Tab Managemen and choose Add lifecycle rule

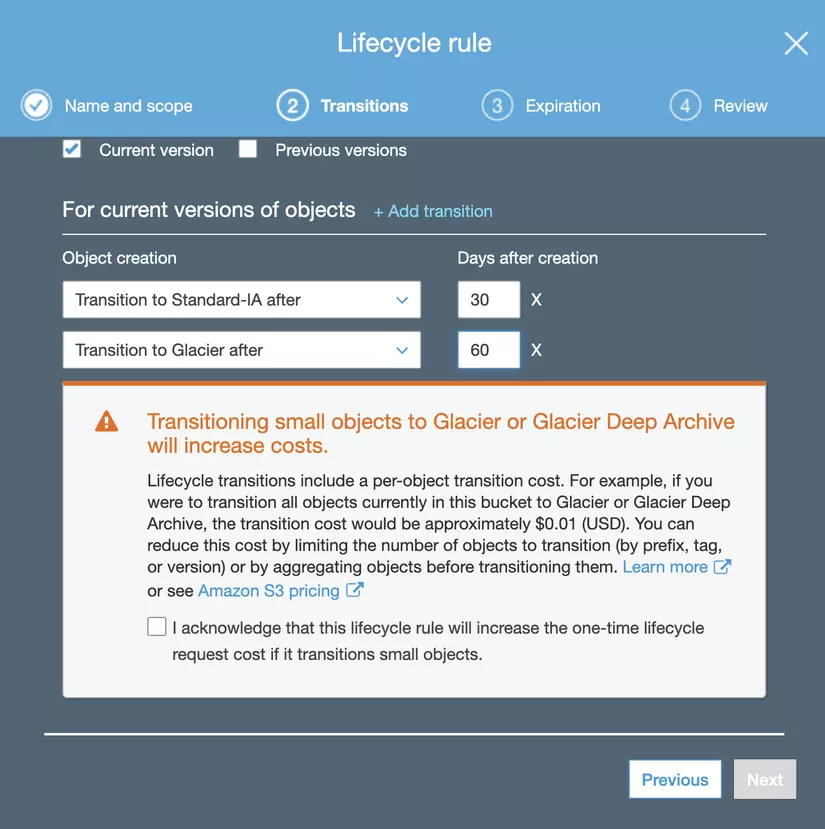

- On the Transition tab, we will proceed according to the above Solution: Note: Transitioning a small object to the Storage class Glacier will incur costs.

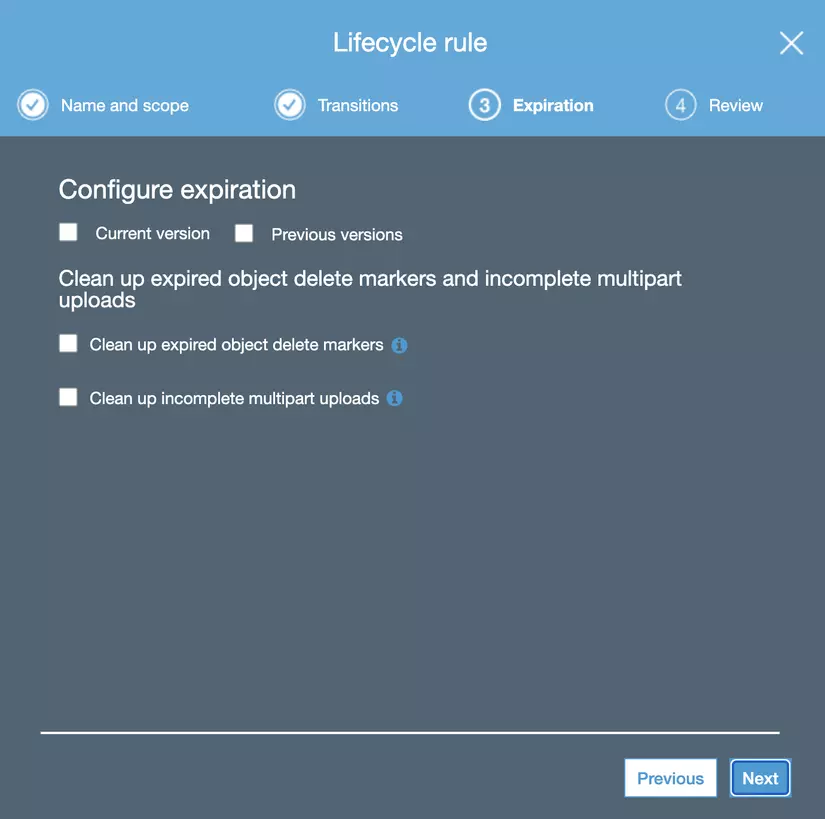

- Setting expired object / incomplete multiple part upload

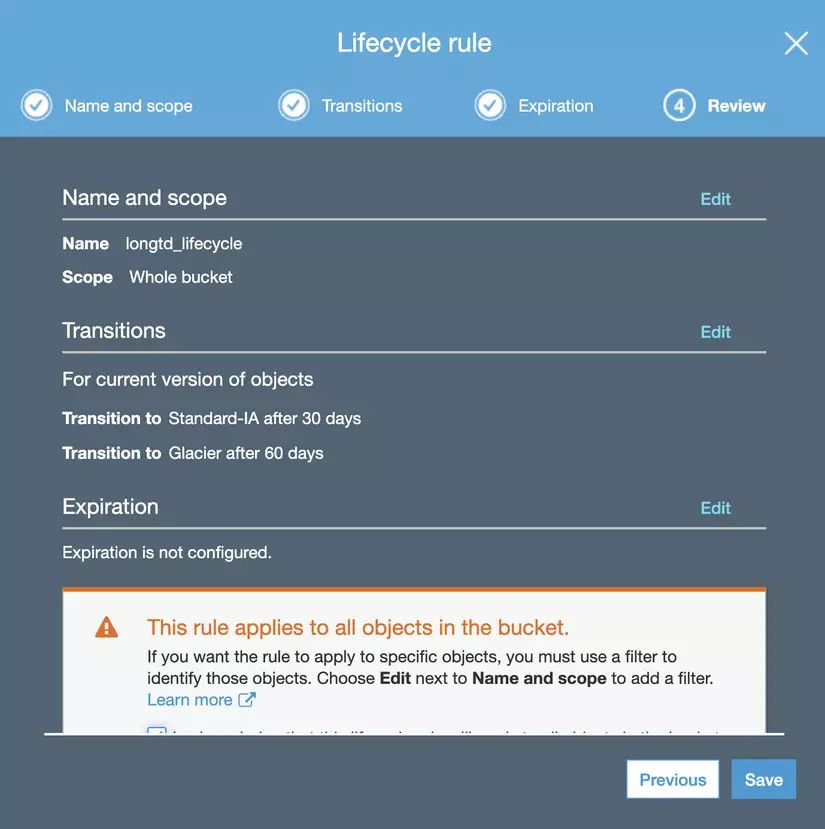

- Step review:

- Result:

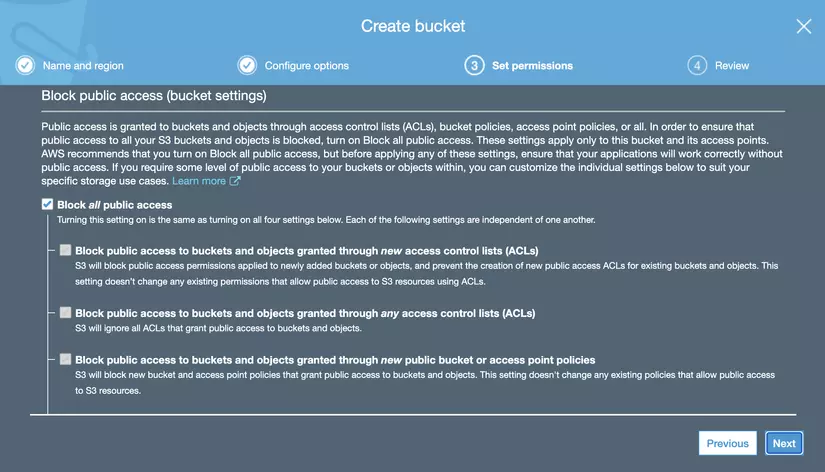

5. S3 Permission

5.1 What is S3 Permission?

S3 permission allows us to set who can view, access, and use a bucket or object.

In the bucket level we can do the control (for each separate bucket).

- List: Who can see the bucket name

- Upload / Delete

- Permission: Add / Edit / Delete / View permisions

In Object level, we can control (for each object separately)

- Open / Download

- View Permission

- Edit Permission

6. S3 Versioning

What is S3 Versioning?

S3 Versioning is the feature traking and stores the entire version of an object so that we can access and use the old version if we want.

- Versioning can be ON or OFF

- Once turned ON, we can only suspend versioning and not turn OFF completely.

- Suspending versioning just to prevent versioning from increasing, all old version objects will still be stored in Storage

- Versioning can only be set to the bucket level, and applets all objects in the bucket

I would like to end the article Guideline guiding setting up for S3 here, I hope everyone will continue in the next section about RDS.