General introduction

In a small AI team or in the early stages of making POC (Proof of Concepts) to convince customers, or verify business ideas, most of them stopped at the prototype step, making a codebase approach in monolithic form. Then it will quickly perform and stop at 2 steps:

- Data analysis and training model

- Prepare the interface for real-time demo, or video recording

However, when moving from prototype to Production, we need more than that.

Human resources example:

To make AI-prototype, we only need a few AI engineers to be enough to do these two tasks, but production needs is labler, AI deployment, AI infustructure …. (specifically what are the roles of these guys) I will reserve another post to share.)

Technically:

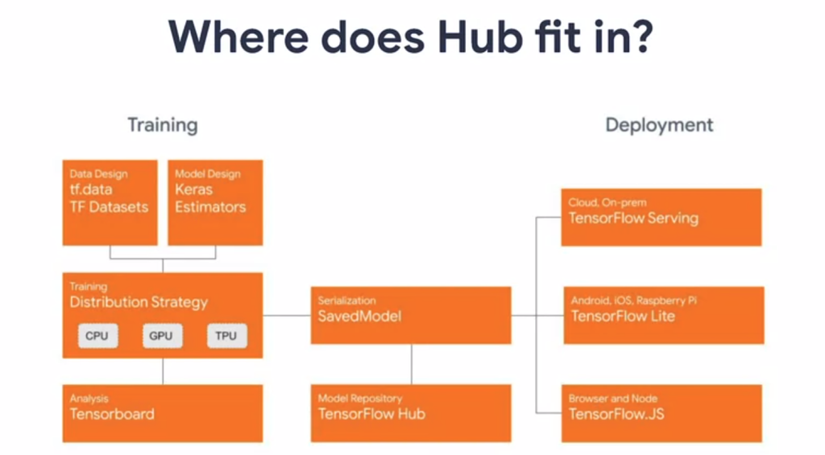

- Overview of the structure, the “ecosystem” that tensorflow (specifically 2.0) has built to support production….. etc (end of thinking capacity

)

)(kidding, there are quite a lot of them in … but write to where I fill it with each post for easy)

I will start the series with a summary of the course “Advanced Deployment Scenarios with Tensorflow” on coursera, if you have finished reading the overview feel quite interested, you can learn more here .

Analysis tensorflow ecosystem created

Summary through the Training:

In this section, most AI engineers do daily and there are many frameworks to choose from:

- Preparing data: we can return to the correct format of tf.Dataset and then use the preprocess rows available, or customize more.

- Model design: based on Keras.

- Understand and evaluate the model ( week3 of the course):To understand the model, visualize the results, and the data, TensorFlow supports TensorBoard. With Tensorboard we can visualize a lot of things (histogram, accuracy, loss, data …), all we need to do is write a function callback (can be customized using LambdaCallback ), put into the model. .fit, update to epoch, and turn tensor_board to view. Also quite convenient

The saving model section

After the model is finished training, we usually go to the next step, optimize model (quantization, prunning, graph optimization, switch to .pb …., I will share in another post) and save to prepare. for deployment, or for others to use  .

.

What is used for others seems harmless but extremely beneficial. One of the main things is to use it for transfer learning (pre-trained model).

Week 2 talks about how to use tensorflow Hub, to upload pretrained models that have been uploaded by big companies like google, facebook, kaggle … Just like the sample code from which can be downloaded, continue to train / finetune, or get a full model. For you want to learn more ( tensorflow Hub , in my opinion , there are quite a few models, just go to git, see what is good about me, customize Customize.

Part deployment

In the upper part after I save the model, I can turn on the server to run the model. When the client needs results, send the request to the server.

In week 1, I will introduce tensorflow Serving, help me to accomplish these purposes with a few lines:

- The model server will be launched = tensorflow_model_server from tensorflowServing.

- Run request to this server, put the appropriate input as it will have dc output

Example code here:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | # Define đường dẫn model MODEL_DIR = tempfile.gettempdir() version = 1 ..... os.environ["MODEL_DIR"] = MODEL_DIR ... # Server: run in a terminal nohup tensorflow_model_server --rest_api_port=8501 --model_name=fashion_model --model_base_path="${MODEL_DIR}" >server.log 2>&1 # Client run on another ... import requests headers = {"content-type": "application/json"} json_response = requests.post('http://localhost:8501/v1/models/foo_model:predict', data=data, headers=headers) predictions = json.loads(json_response.text)['predictions'] |

You can learn the entire sample code here to speed up your work as quickly as possible.

Hope you find something for yourself through this post. Thank you for reading, if you find it interesting then continue reading through part 2 of m.

)

)